AI

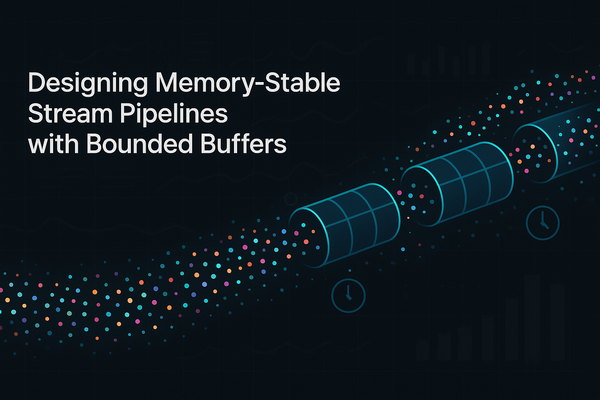

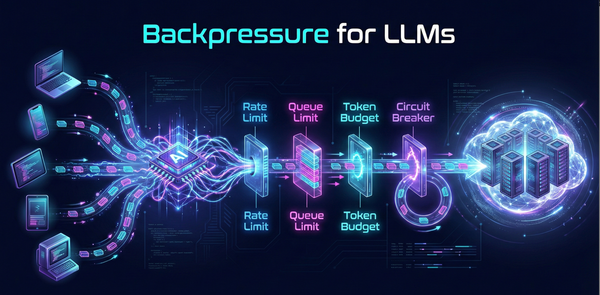

Backpressure for LLMs: Managing Load Spikes and Token Floods in Real-Time AI Services

LLM features usually start life in a happy place: a handful of users, low traffic, and plenty of quota. Latency looks fine, tokens are cheap, logs are quiet. Then a product launch, a marketing campaign, or a new “Ask AI” button lands — and suddenly your once-stable service is drowning in