Flash Endurance: Smart Storage Strategies for Embedded Edge Systems

Storage wear & tear — practical engineering guide from Ever-bowl’s memory pipeline

This post explains how the Ever-bowl platform uses PSRAM as a buffer and a flash-aware firmware stack to extend flash lifetime. It includes concrete implementation pitfalls and solutions, datasheet-backed guidance, and a practical checklist for engineers building similar capture systems.

Why storage matters for always-on edge vision

Edge devices that capture high-frame-rate images or thermal maps continuously are constrained by two conflicting needs: store large amounts of data reliably, and keep the device alive for years in the field. Flash memory is dense and persistent but has finite program/erase (P/E) endurance and erase-before-write semantics — if not managed, frequent small writes (e.g., per-frame writes) rapidly exhaust flash life. Ever-bowl uses an architecture that buffers captures in PSRAM, batches and aligns writes to NOR flash, and performs wear-aware allocation to avoid hot spots. The design balances throughput, reliability, and longevity for real deployments.

The core problem: NOR flash characteristics you must design for

Serial NOR flash devices (Winbond W25Q256 series) typically present:

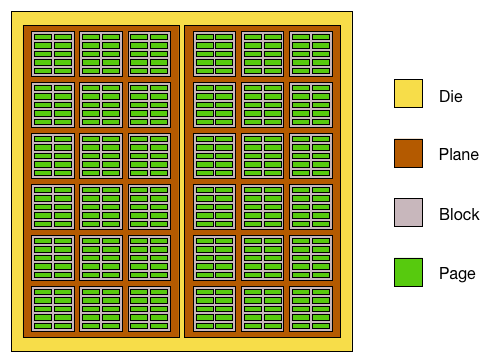

- Sector vs block layout: small programmable pages (e.g., 256 B), erasable sectors (commonly 4 KB) and larger erase blocks (e.g., 64 KB). Erase is performed per sector/block, not per byte.

- Limited P/E cycles: typical NOR flash endurance is in the 10⁴–10⁵ erase cycles range — datasheet numbers are the authoritative reference when sizing lifetime guarantees.

- Erase-before-write semantics: updating small pieces of data often forces erasing a large block, accelerating wear.

Consequences for capture systems:

- Writing each frame as a separate small file will force repeated erases of the same blocks (hot spots).

- Metadata tables (indices) updated frequently concentrate wear unless rotated or avoided.

- Poor physical layout, such as signal integrity issues or improper power sequencing, can cause transient write errors, corrupting blocks and necessitating retries.

Ever-bowl integrates a QSPI NOR (W25Q256) and parallel PSRAM on STM32H5's Flexible Memory Controller (FMC) domains. The hardware enables high-throughput buffering and commits, but firmware must be optimized to maximize benefits. STMicroelectronics' reference manual confirms OCTOSPI support for up to 200 MHz in octal mode and DMA integration for efficient transfers.

Lifetime Calculations: Quantifying Endurance

To estimate flash life, use the formula: Lifetime (years) = (Total Cycles * Block Size * Blocks) / (Daily Write Volume * Wear Amplification).

Example: For a 256MB NOR flash (100k P/E cycles, 4KB sectors), capturing 10 fps at 1KB/frame (864,000 KB/day):

- Without batching: ~1 erase per frame → Wear amplification ~4 (due to metadata) → Lifetime ~0.07 years (~25 days).

- With 4MB PSRAM buffer (batching 4,000 frames): ~1 erase per 4,000 KB → Amplification ~1.1 → Lifetime ~2.8 years.

Pattern recognition — how wear happens and how PSRAM helps

Common harmful I/O patterns:

- Repeated small updates to the same sectors (metadata, logs).

- In-place updates (rewrite file header in same area).

- Frequent random writes without aggregation.

PSRAM serves as a volatile "shock absorber": It's fast (~70 ns access time for ISSI devices), has no wear limits, and is ideal for circular buffers handling DMA captures. Ever-bowl uses PSRAM to ingest DCMI/DMA frames from camera sensors, then commits batched, aligned chunks to flash—reducing erase counts and overhead. The ISSI IS66WVE4M16E datasheet specifies 1.65–1.95 V operation and 70 ns asynchronous access, making it suitable for intermediate buffering in low-power edge setups. Industry best practices emphasize avoiding frequent writes and using wear leveling to spread cycles evenly.

Ever-bowl’s storage architecture (what, why & how)

Architecture summary

- Capture path: camera sensors → DCMI → DMA writes into PSRAM circular buffer.

- Threshold: firmware monitors buffer occupancy; when threshold reached (configurable), it schedules an offload.

- Offload: suspend or throttle capture briefly, stream large aligned chunks from PSRAM into QSPI NOR flash with optimized QSPI page/program sequences.

- Resume capture; manage indices and wear tables in flash metadata area.

Why batching & alignment are essential

- Fewer erase cycles: writing N frames as one contiguous block requires far fewer erases than N per-frame writes. Realistic gains are often orders of magnitude depending on chunking size.

- Higher QSPI efficiency: QSPI write commands have fixed overhead; large writes amortize that overhead and boost throughput

Flash-level strategies to extend life.

Partitioning & isolation

Create partitions for: metadata/index (small, high-update), frame store (append/circular), long-term archive, and logs. Isolate frequently updated data so you can apply special wear mitigation. Ever-bowl uses separate logical partitions for indices vs frame data.

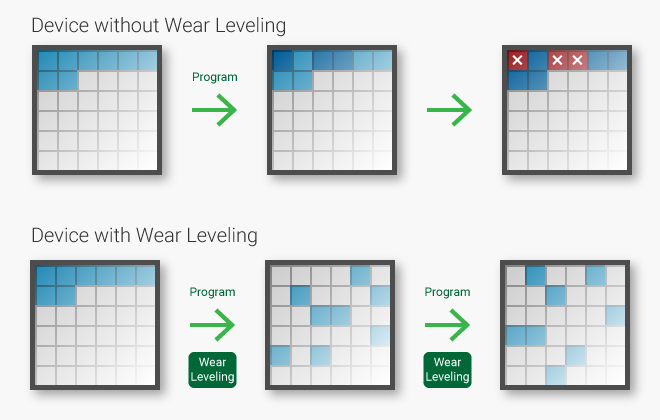

Pseudo wear-leveling (rotate write zones)

Advance a "next_write_block" pointer after each commit to distribute writes across the flash. This simple technique is effective for fixed-capacity systems under full control. For instance, in a 256MB flash with 4KB sectors, rotating across all blocks can equalize wear.

#define FLASH_START_ADDR 0x90000000 // Example QSPI base

#define SECTOR_SIZE 4096

#define TOTAL_SECTORS 65536 // 256MB / 4KB

static uint32_t next_write_sector = 0;

void advance_write_pointer(void) {

next_write_sector = (next_write_sector + 1) % TOTAL_SECTORS;

// Optional: Skip bad blocks from a table

}

HAL_StatusTypeDef write_to_flash(uint8_t* data, uint32_t size) {

uint32_t addr = FLASH_START_ADDR + (next_write_sector * SECTOR_SIZE);

// Erase sector, program data using HAL_QSPI_Transmit, etc.

advance_write_pointer();

return HAL_OK;

}Append-only / circular buffers for frequent small items

For telemetry or small logs, use append-only areas that wrap; avoid erasing metadata on every update.

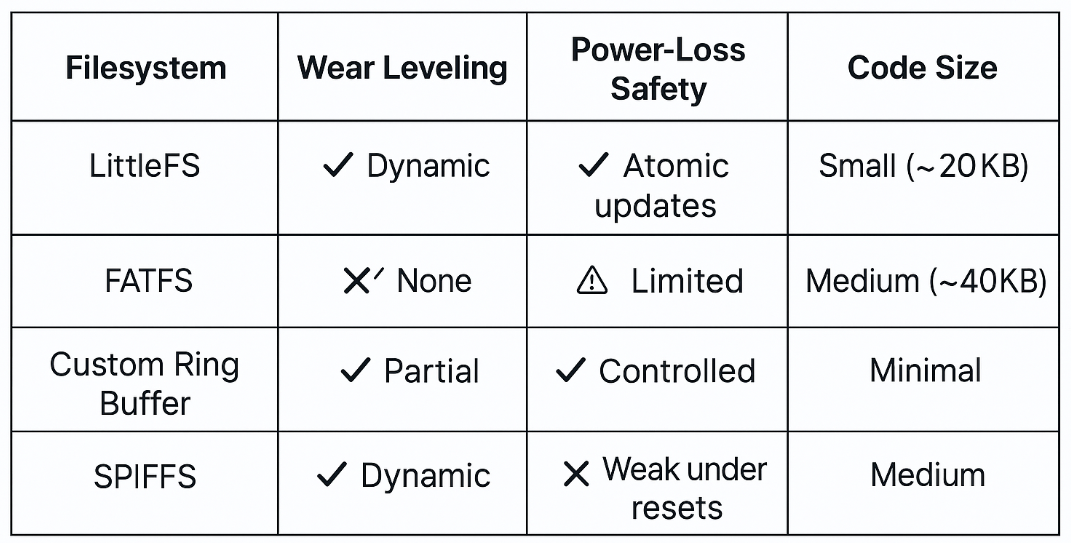

Filesystem choice & limitations

Use a flash-aware FS like LittleFS for dynamic wear leveling and bad-block handling. Note: LittleFS provides dynamic wear leveling (it primarily balances wear among free/dynamic blocks) but not full static wear leveling; when you have large static data + a small hot set, design partitions accordingly. Read LittleFS docs for limitations before assuming perfect leveling.

Bad-block handling & verification

Always verify writes and erases post-operation. On persistent failures, mark blocks bad and remap using a mirrored table in reserved flash. Ever-bowl's manager employs conservative error handling. ST community forums recommend integrity checks after power events.

Takeaways & Practical Checklist

- Buffer Bursts: Use PSRAM for fast, wear-free buffering; commit large aligned chunks to NOR. (ISSI specs: 70 ns access).

- Partition Data: Isolate hot metadata from bulk frames to apply targeted leveling.

- Rotate & Level: Implement zone rotation and LittleFS, but review docs for static data limits. (GitHub: littlefs-project).

- Verify & Handle Errors: Add post-write verification, bad-block tables (mirrored), and power-loss recovery.

- PCB Best Practices: Strict SI with matched traces, termination; follow TI guidelines for high-speed memory.

- Calculate Lifetime: Use formulas to size buffers; test with real workloads.

- Code Integration: Start with STM32 HAL for QSPI/FMC; add custom wear logic.

About Ever-bowl

Ever-bowl develops robust edge vision systems integrating image, thermal, and proximity sensing. The storage strategies here enhance field reliability, minimize returns, and support long-term data for analytics and ML. Hardware schematics and firmware from Ever-bowl underpin these recommendations.

Sources & Further Reading

- Winbond W25Q256JW Datasheet:

- ISSI IS66WVE4M16E PSRAM Datasheet:

- STM32H5 Reference Manual:

- LittleFS Project Docs:

- TI High-Speed Interface Layout Guidelines:

- Macronix AN on NOR Endurance:

- Ever-bowl Schematic and Firmware Excerpts (Uploaded).

Key Citations

- STM32 Wear Leveling for 3 Million Double Writes

- FAT Filesystem and Flash Endurance

- Would Writing to the Same Flash Address Reduce Lifespan

- What Happens in Power Outage During NOR Flash Erase

- Understanding Life Expectancy of Flash Storage

- Program/Erase Cycling Endurance in NOR Flash

- Improving NAND Flash Lifetime by Balancing Page Endurance

- High-Speed Interface Layout Guidelines

- K2G PCB Guidelines for QSPI

- TN-25-09: Layout Guidelines – Serial NOR Flash