Queue vs. Direct Task Notify Performance Trade-offs in FreeRTOS ITC

Modern embedded systems demand efficient inter-task communication (ITC) mechanisms that balance performance with reliability. When building high-performance sensor systems, every microsecond counts—especially in real-time applications where hardware interrupts must trigger rapid processing pipelines. This exploration examines two fundamental FreeRTOS ITC approaches: traditional queues versus direct task notifications, revealing critical performance implications that can make or break your system's responsiveness.

The Challenge: When Hardware Speed Meets Software Reality

In precision sensor systems, the gap between hardware capability and software overhead becomes painfully apparent. Consider a thermal imaging sensor generating frames at 30 FPS—each frame must be captured, processed, and stored within a 33.3ms window. Traditional ITC mechanisms often introduce latencies that accumulate across the processing pipeline, ultimately constraining overall system throughput.

Understanding the Fundamentals

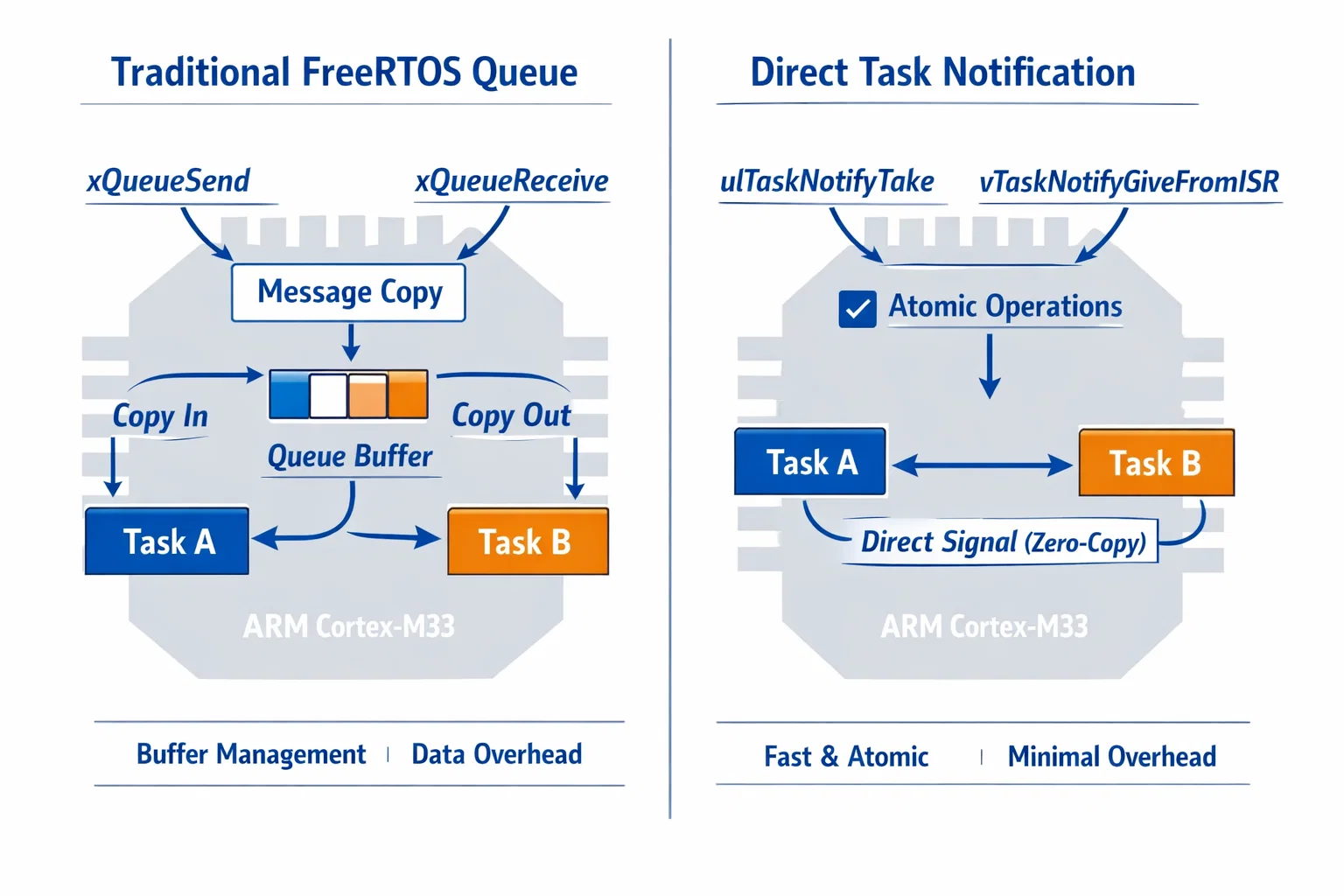

FreeRTOS Queue Mechanism

Queues represent FreeRTOS's traditional approach to inter-task communication. They provide a robust, feature-rich solution for passing data between tasks with built-in synchronization and buffering capabilities.

Core Characteristics:

- Message Storage: Queues maintain internal buffers to store message copies

- Data Copying: Messages are copied into and out of queue storage

- Multiple Producers/Consumers: Support multiple tasks sending and receiving

- Blocking Operations: Tasks can block waiting for queue space or messages

- Memory Overhead: Each queue requires dedicated storage for message buffers

Direct Task Notification System

Introduced in FreeRTOS v8.2.0, direct task notifications provide a lightweight alternative for simple signaling scenarios. They leverage the task control block's built-in notification array, eliminating separate queue objects entirely.

Core Characteristics:

- Zero Copy: No message copying—only notification values

- Minimal Memory: Uses existing task control block storage

- Single Target: One-to-one communication only

- Atomic Operations: Hardware-optimized notification primitives

- Faster Context Switches: Reduced scheduler overhead

Performance Analysis: Real-World Impact

Our analysis of high-performance camera systems reveals significant performance differences between these ITC mechanisms. These measurements come from ARM Cortex-M33 implementations running at 250MHz, representing typical industrial IoT processing capabilities.

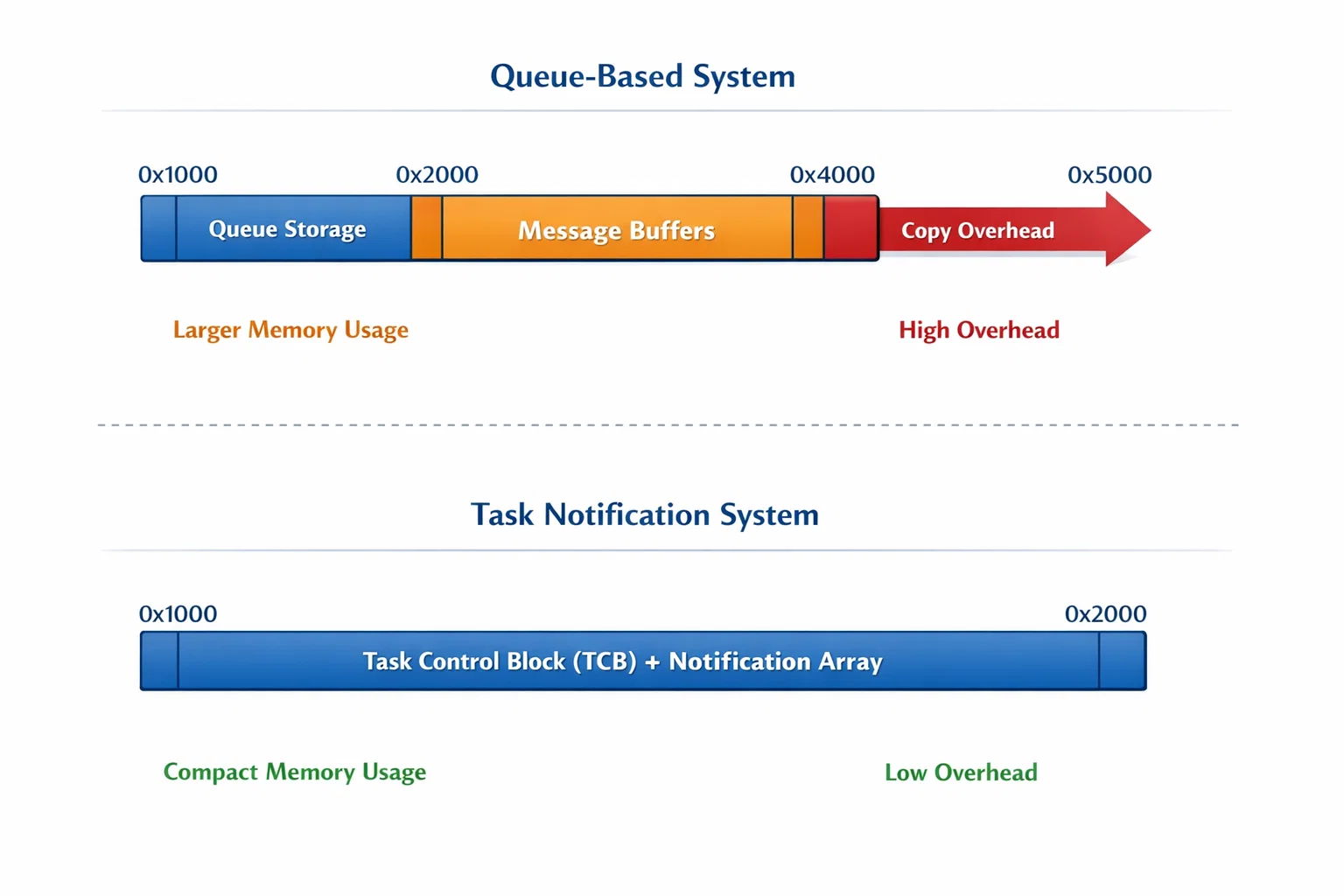

Memory Footprint Comparison

Queue Implementation:

// Traditional queue approach - storage overhead

QueueHandle_t transmission_queue;

transmission_queue = xQueueCreate(8, sizeof(transmission_msg_t));

// Memory usage: ~160 bytes (queue + 8 × 20-byte messages)

Direct Task Notification:

// Zero additional memory required

ulTaskNotifyTake(pdTRUE, portMAX_DELAY);

// Memory usage: 0 bytes (uses existing TCB)

The memory savings become substantial in systems with multiple communication channels. A typical sensor processing pipeline might require 10-15 communication paths, where queues consume 1.6KB while notifications use zero additional memory.

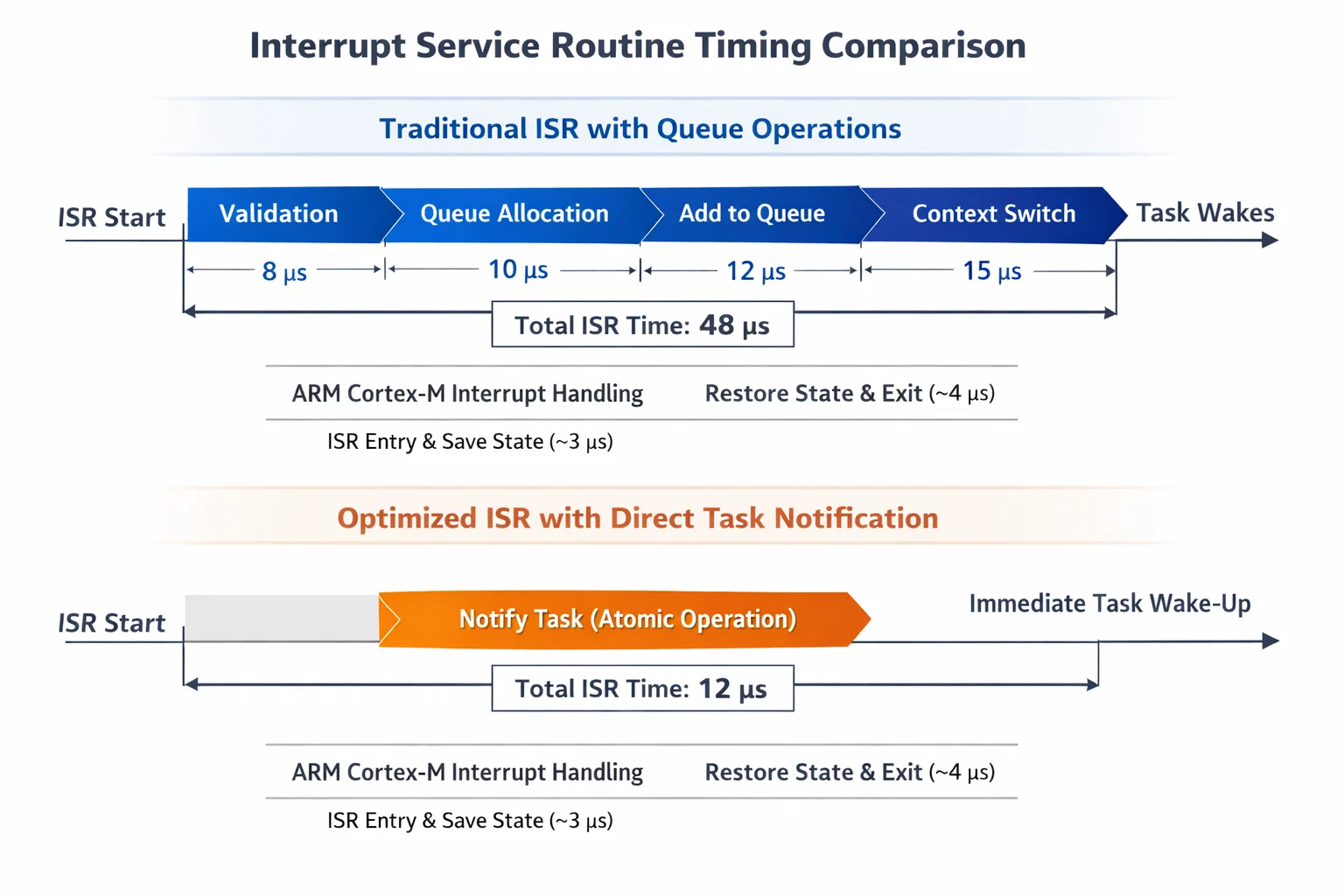

Latency Measurements

Our performance instrumentation reveals striking latency differences:

- Queue Operations: 15-25μs average latency (including copy operations)

- Direct Notifications: 2-5μs average latency (atomic operations only)

This 5x performance improvement becomes critical in time-sensitive applications. For a 30 FPS camera system, reducing ITC latency from 25μs to 5μs per operation can recover several milliseconds per frame—enough headroom for additional processing or higher frame rates.

Context Switch Overhead

Task notifications excel in interrupt-driven scenarios. Traditional queue operations from ISR context require additional validation and may trigger longer context switches. Direct notifications use optimized ISR primitives:

// Queue from ISR - multiple validation steps

BaseType_t xHigherPriorityTaskWoken = pdFALSE;

xQueueSendFromISR(queue, &message, &xHigherPriorityTaskWoken);

portYIELD_FROM_ISR(xHigherPriorityTaskWoken);

// Direct notification - single atomic operation

BaseType_t xHigherPriorityTaskWoken = pdFALSE;

vTaskNotifyGiveFromISR(task_handle, &xHigherPriorityTaskWoken);

portYIELD_FROM_ISR(xHigherPriorityTaskWoken);

Architectural Trade-offs

When to Choose Direct Task Notifications

Ideal Scenarios:

- Simple Signaling: Boolean events, counters, or completion flags

- High-Frequency Operations: Interrupt-driven processing pipelines

- Memory-Constrained Systems: Where every byte matters

- One-to-One Communication: Direct producer-consumer relationships

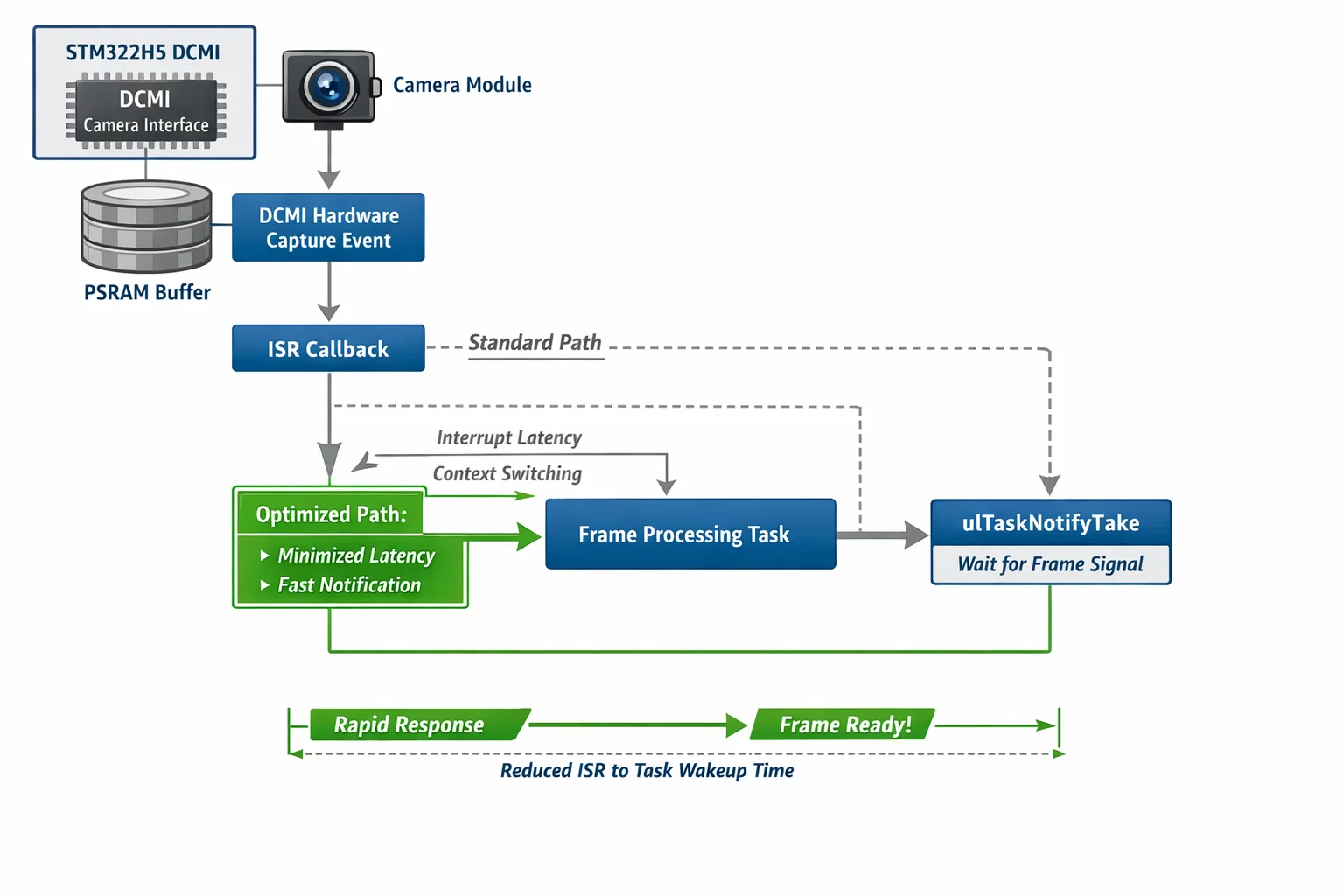

Real Implementation Example:

In our camera system, frame capture completion uses direct notifications to minimize ISR latency:

// Frame processing task waits for notification

void frame_processing_task(void *pvParameters) {

for (;;) {

ulTaskNotifyTake(pdTRUE, portMAX_DELAY);

// Process frame immediately

}

}

// ISR notifies completion with minimal overhead

void HAL_DCMI_FrameEventCallback(DCMI_HandleTypeDef *hdcmi) {

BaseType_t xHigherPriorityTaskWoken = pdFALSE;

vTaskNotifyGiveFromISR(frame_processing_task_handle,

&xHigherPriorityTaskWoken);

portYIELD_FROM_ISR(xHigherPriorityTaskWoken);

}

When Queues Remain Essential

Critical Requirements:

- Complex Data Structures: Multi-field messages or variable-length data

- Buffering: Handling burst traffic or producer-consumer rate mismatches

- Multiple Producers: Several tasks contributing to the same processing pipeline

- Message Ordering: FIFO guarantees for sequential processing

Implementation Example:

Storage systems benefit from queues when multiple sensors generate data asynchronously:

typedef struct {

uint32_t sensor_id;

uint32_t timestamp;

uint8_t *data_buffer;

size_t data_length;

} sensor_data_t;

QueueHandle_t storage_queue = xQueueCreate(16, sizeof(sensor_data_t));

Optimization Strategies

Hybrid Approaches

Sophisticated systems often combine both mechanisms strategically. Fast notification paths handle time-critical signaling, while queues manage complex data flows:

// Fast path: immediate processing notification

vTaskNotifyGiveFromISR(urgent_task_handle, &yield);

// Slow path: detailed processing through queue

xQueueSendFromISR(processing_queue, &complex_message, &yield);

Performance Tuning Guidelines

- Profile Before Optimizing: Measure actual latencies in your target hardware

- Consider System Load: Performance characteristics change under different CPU utilization

- Memory vs Speed Trade-offs: Direct notifications save memory but limit functionality

- Scalability Planning: Design communication patterns that accommodate future requirements

Hoomanely's Technical Innovation

These performance optimizations directly support Hoomanely's mission to revolutionize pet healthcare through continuous monitoring. In pet health sensing applications, rapid response to physiological changes can be life-critical. By implementing optimized ITC patterns, sensor systems can achieve sub-millisecond response times for emergency detection while maintaining energy efficiency for 24/7 operation.

Hoomanely's edge AI processing relies on these high-performance communication patterns to deliver real-time health insights. The combination of direct task notifications for critical signaling and selective queue usage for complex data processing enables their biosense AI engine to process multiple sensor streams simultaneously without compromising response times.

Key Takeaways

Performance Implications:

- Direct task notifications provide 5x latency reduction for simple signaling

- Memory savings of 100+ bytes per communication channel

- Optimized ISR performance for interrupt-driven systems

Design Decisions:

- Choose notifications for simple, high-frequency signaling

- Reserve queues for complex data structures and multi-producer scenarios

- Consider hybrid approaches for comprehensive system optimization

Implementation Guidelines:

- Profile actual performance in target hardware conditions

- Design communication patterns to match data complexity requirements

- Balance memory efficiency with functional requirements

The choice between queues and direct task notifications represents a fundamental trade-off in embedded systems design. Understanding these performance characteristics enables developers to build responsive, efficient systems that meet the demanding requirements of modern IoT and edge computing applications. Whether building the next generation of health monitoring devices or industrial sensor networks, these optimization strategies form the foundation of high-performance embedded software architecture.