When Optimization Breaks: The Debug vs Release Performance Paradox

In embedded systems development, there's a particularly frustrating class of bugs that every developer encounters: code that works perfectly in debug mode but mysteriously fails when compiled for release. At Hoomanely, where we develop precision pet health monitoring devices with clinical-grade accuracy, this phenomenon has taught us valuable lessons about optimization trade-offs that directly impact our mission-critical IoT systems.

The Optimization Divide

Our edge AI devices capture and process thousands of sensor readings per minute, transmitting vital health data to our cloud infrastructure. When developing these systems, we routinely encounter the classic embedded systems challenge: debug builds (-O0) work flawlessly, but release builds (-Os) introduce subtle failures that can compromise data integrity.

Understanding the Fundamental Difference

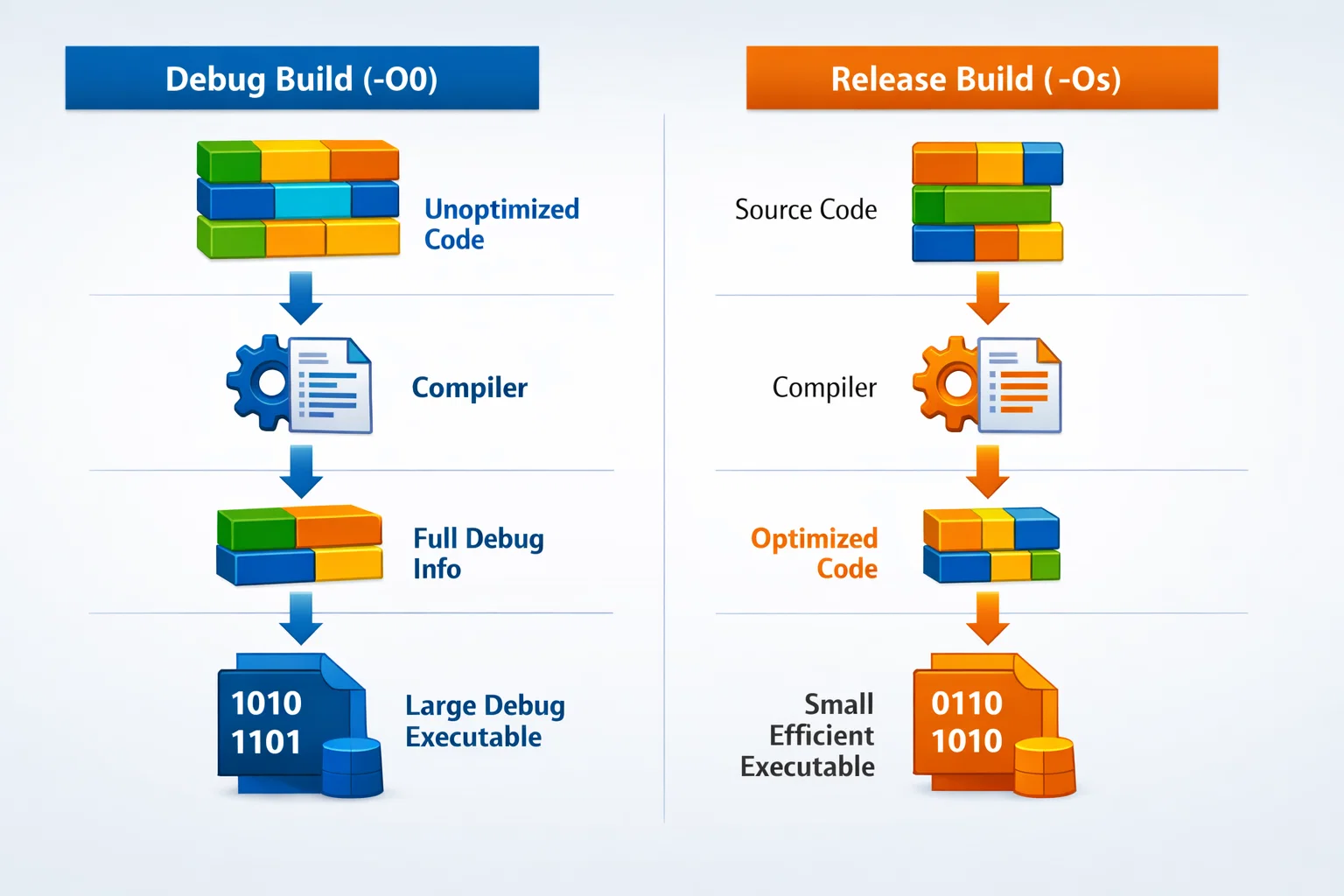

The distinction between debug and release optimizations runs deeper than simple performance gains. Debug builds prioritize developer experience and debugging capability, while release builds focus on runtime efficiency and binary size reduction. This philosophical divide creates distinct execution environments that can expose latent bugs.

Debug Mode Characteristics (-O0 -g3):

- No optimization transformations

- Full debugging symbols retained

- Predictable execution order

- Stack frame preservation

- Variable lifetime guarantees

Release Mode Characteristics (-Os -g0):

- Aggressive size optimization

- Function inlining and loop unrolling

- Dead code elimination

- Register allocation optimization

- Stack optimization and reordering

Real-World Case Study: Memory Management in Pet Monitoring Devices

Our systems process multiple data streams simultaneously – thermal imaging, accelerometry, and environmental sensors. During development, we encountered a challenging optimization bug that perfectly illustrates this phenomenon.

The Problem Manifestation

In debug mode, our external PSRAM (Pseudo-Static Random Access Memory) operations executed flawlessly. The following code pattern worked consistently:

// PSRAM test pattern that worked in debug

uint8_t testPattern[4096];

uint8_t readBuffer[4096];

// Fill test pattern

for (uint32_t i = 0; i < sizeof(testPattern); i++) {

testPattern[i] = (uint8_t)(i & 0xFF);

}

// Direct PSRAM write

for (uint32_t i = 0; i < sizeof(testPattern); i++) {

*((__IO uint8_t *)(PSRAM_BASE_ADDRESS + i)) = testPattern[i];

}

However, when compiled with -Os optimization, data corruption occurred sporadically, manifesting as incorrect sensor readings that could potentially affect our health monitoring accuracy.

Root Cause Analysis

The optimization-related failure stemmed from multiple factors:

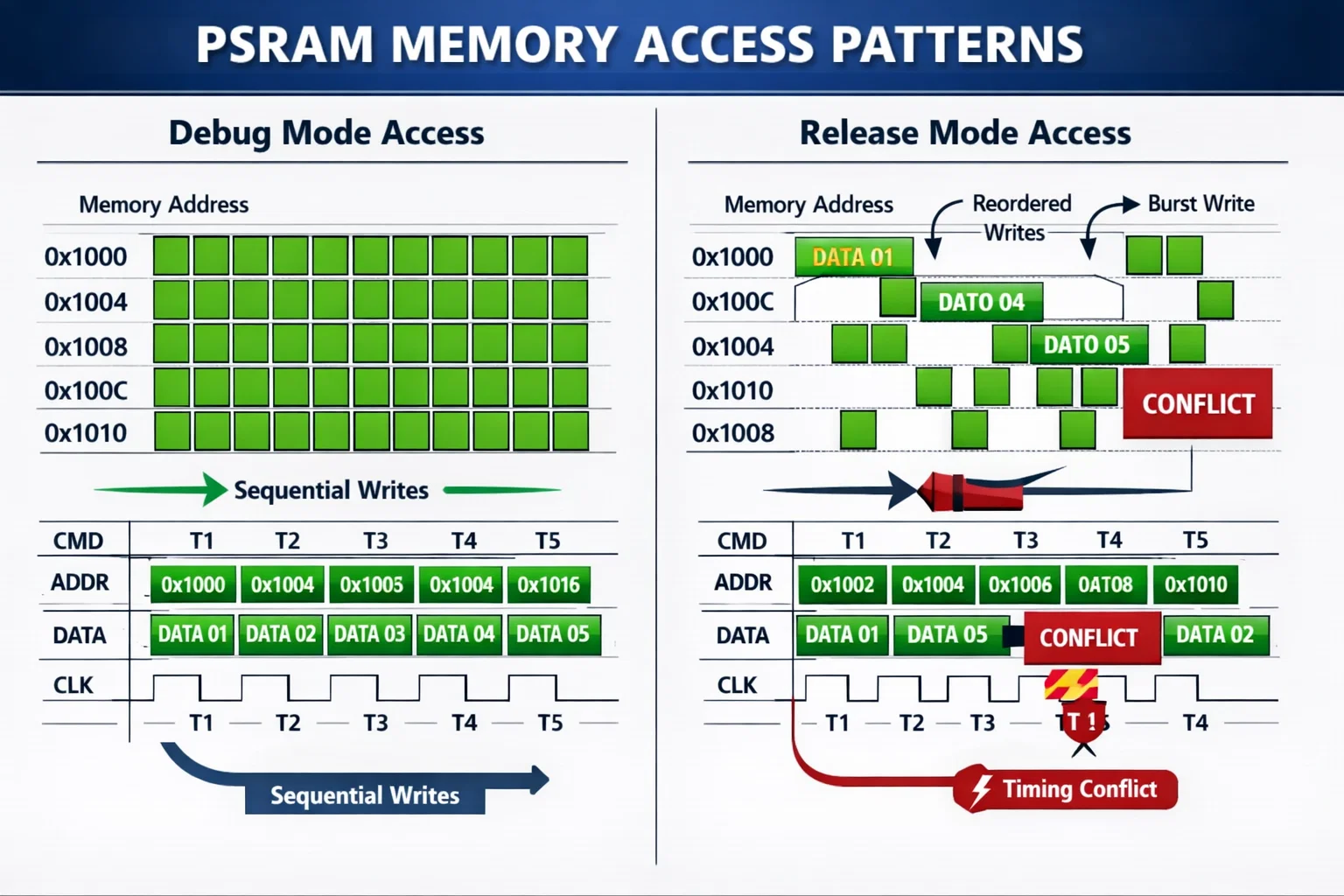

1. Memory Access Timing

Release optimization reordered memory operations, causing write transactions to occur faster than the PSRAM interface could reliably handle. Debug mode's unoptimized execution provided natural timing delays that masked this hardware constraint.

2. Volatile Qualifier Omission

The compiler optimized away redundant memory reads, assuming memory content remained static. Our memory-mapped PSRAM interface required volatile semantics to ensure actual hardware access.

3. Stack Layout Changes

Optimization altered local variable placement, changing cache behavior and memory alignment, which affected DMA transfer reliability.

The Solution Strategy

We implemented a multi-layered approach:

Memory Barrier Implementation:

// Added memory barriers for hardware synchronization

__DSB(); // Data Synchronization Barrier

__ISB(); // Instruction Synchronization Barrier

Proper Volatile Usage:

// Corrected memory-mapped access

volatile uint8_t* psram_ptr = (volatile uint8_t*)PSRAM_BASE_ADDRESS;

*psram_ptr = test_value;

Optimization-Aware Design:

- Implemented hardware abstraction layers that work consistently across optimization levels

- Added explicit timing controls independent of compiler optimization

- Used function attributes to control optimization on critical code sections

The Performance vs Reliability Balance

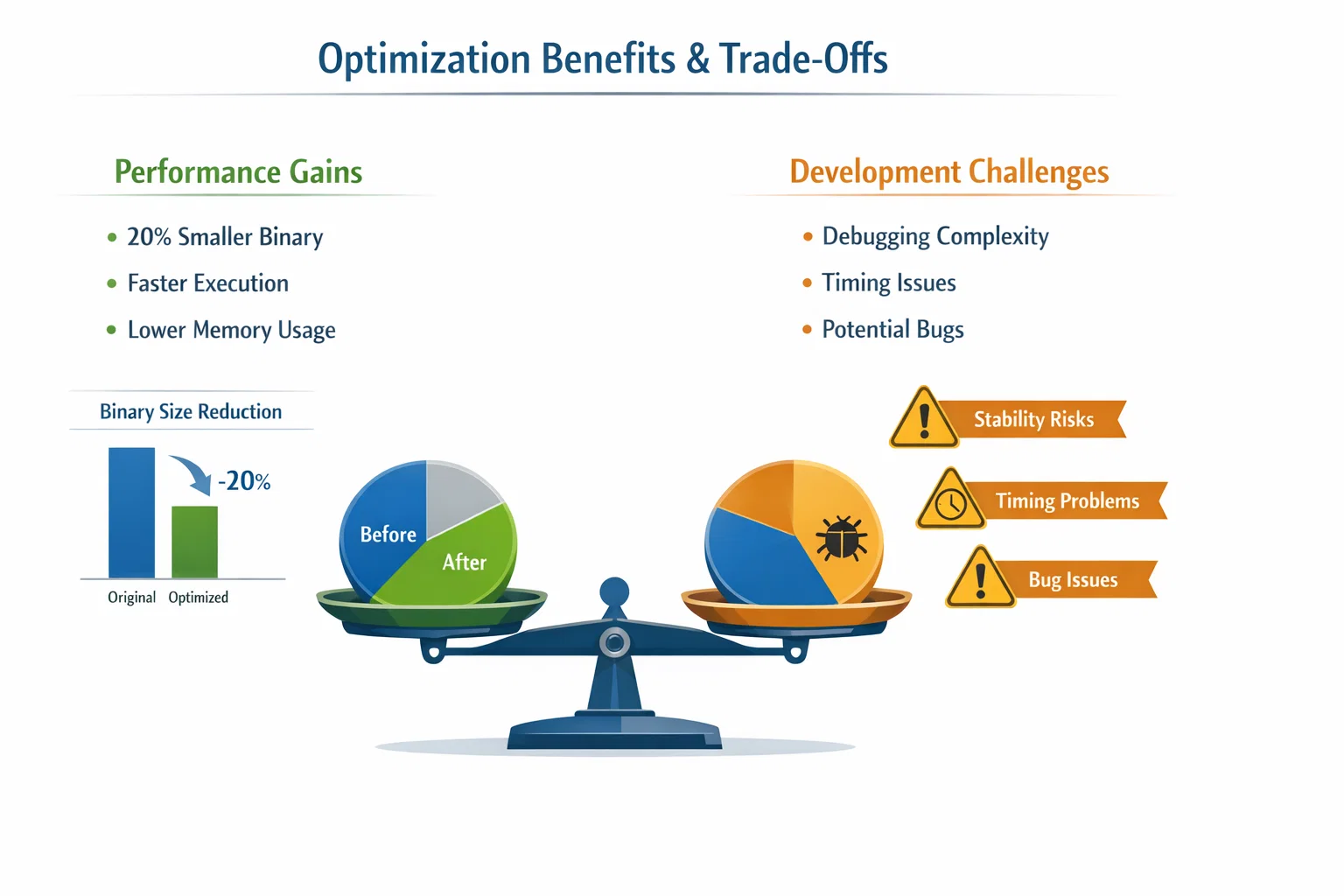

Our optimization journey revealed that aggressive size optimization (-Os) delivered significant benefits for our resource-constrained devices:

Binary Size Reduction: ~20%

- Smaller code footprint allows more space for sensor data buffering

- Reduced flash wear through smaller program images

- Lower memory requirements enable more sophisticated AI algorithms

Link-Time Optimization (LTO) Benefits:

- Cross-module optimization eliminated redundant function calls

- Improved inline decisions across compilation unit boundaries

- Better constant propagation through the entire program

However, these gains came with debugging complexity and potential stability risks that required careful mitigation.

Best Practices for Optimization-Resilient Code

Through our development process, we established several principles:

1. Hardware Interface Discipline

Always use volatile qualifiers for memory-mapped registers and ensure proper memory barriers around hardware operations.

2. Timing-Independent Logic

Avoid relying on execution timing for correctness. Use explicit synchronization mechanisms instead of depending on debug mode's slower execution.

3. Optimization Testing Strategy

- Test critical paths with maximum optimization enabled early in development

- Use static analysis tools to identify potential optimization-sensitive code

- Implement comprehensive automated testing across all optimization levels

4. Progressive Optimization Approach

- Start with -O1 to identify basic optimization issues

- Graduate to -Os only after thorough validation

- Use function-level optimization attributes to fine-tune critical sections

Our proactive approach to optimization challenges reinforces our commitment to precision healthcare technology. By solving these fundamental engineering problems, we create a more robust foundation for our pet health ecosystem, ensuring that every sensor reading, every thermal image, and every behavioral pattern contributes to longer, healthier lives for pets.

Key Takeaways

The debug vs release performance paradox teaches us that optimization is not merely about speed – it's about understanding the fundamental assumptions our code makes about execution environment. Success requires:

- Disciplined hardware interface design that remains consistent across optimization levels

- Comprehensive testing strategies that validate behavior under all compilation modes

- Proactive optimization planning rather than reactive debugging

For embedded systems developers working on precision applications, these lessons are particularly crucial. The cost of optimization-induced bugs in healthcare monitoring systems extends beyond development time – it directly impacts the reliability of life-critical functionality.

In our journey to transform pet healthcare through technology, mastering these optimization challenges represents more than technical excellence. It demonstrates our commitment to the foundational engineering discipline that enables truly reliable IoT health monitoring systems.