Aligning the Invisible: The Science Behind Stereo Camera Sensor Calibration

Introduction

When we look at an object, our brain effortlessly merges two slightly different images from our eyes to perceive depth and distance. Replicating this ability in machines, however, is anything but simple. At Hoomanely, where we design intelligent pet wellness systems, stereo vision plays a crucial role in understanding depth, shape, and spatial relationships. From mapping a pet’s movement to estimating distance and gaze, accurate stereo alignment forms the foundation of reliable perception.

Stereo camera calibration is the science of teaching two sensors to see as one. Through intrinsic and extrinsic calibration, pixel fusion, and proximity correction, we transform raw, misaligned data into precise 3D reconstructions that capture the world with high fidelity. This post dives deep into the math, engineering, and intuition behind aligning what’s invisible.

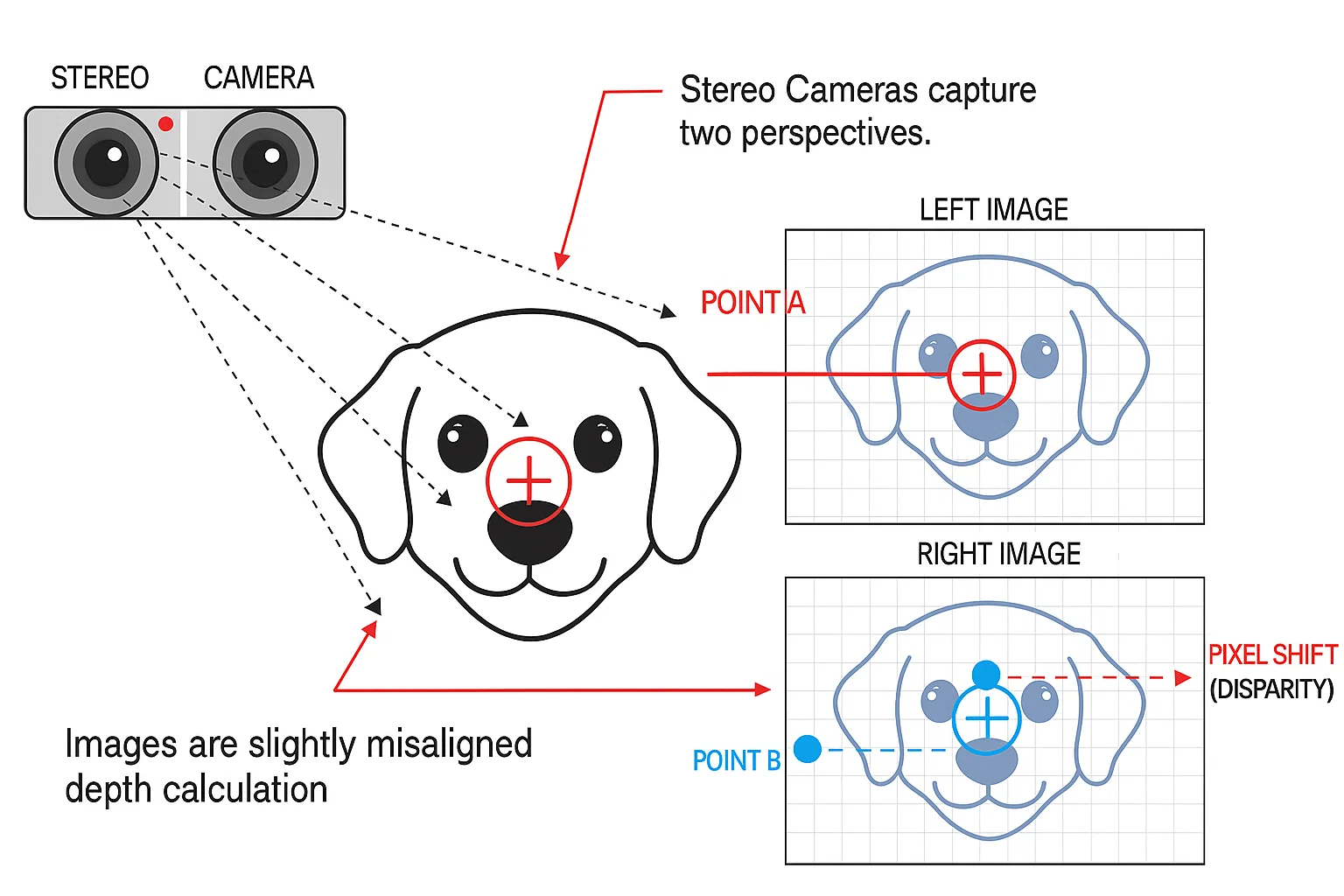

1. The Problem: Two Eyes, Two Realities

Every camera has its own personality - a unique lens curvature, sensor size, focal length, and optical center. Even if two cameras are rigidly mounted, they don’t agree on what a point in space looks like. For instance, a single pixel representing a dog’s left eye in the left frame might correspond to a slightly offset pixel in the right frame. That small misalignment - often just a few millimeters - can completely distort depth perception.

In our case, accurate alignment was critical. A 2–3 pixel mismatch could mean the difference between smooth depth reconstruction and jittery, unreliable data.

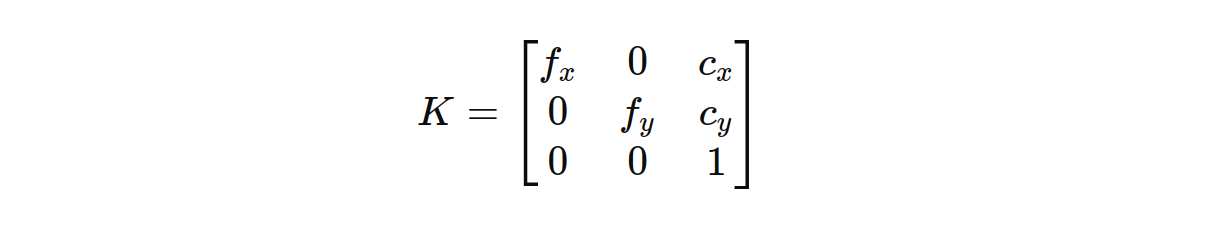

2. Intrinsic Calibration: Teaching Each Camera About Itself

Intrinsic calibration helps us understand the internal geometry of a camera - its focal length, optical center, and lens distortion. We start by photographing a checkerboard pattern at various angles and distances. Using OpenCV’s calibration routines, we solve for each camera’s intrinsic matrix:

Here, (f_x) and (f_y) are the focal lengths (in pixel units), and (c_x), (c_y) represent the optical center. Correcting for lens distortion ensures that straight lines in the real world remain straight in the image.

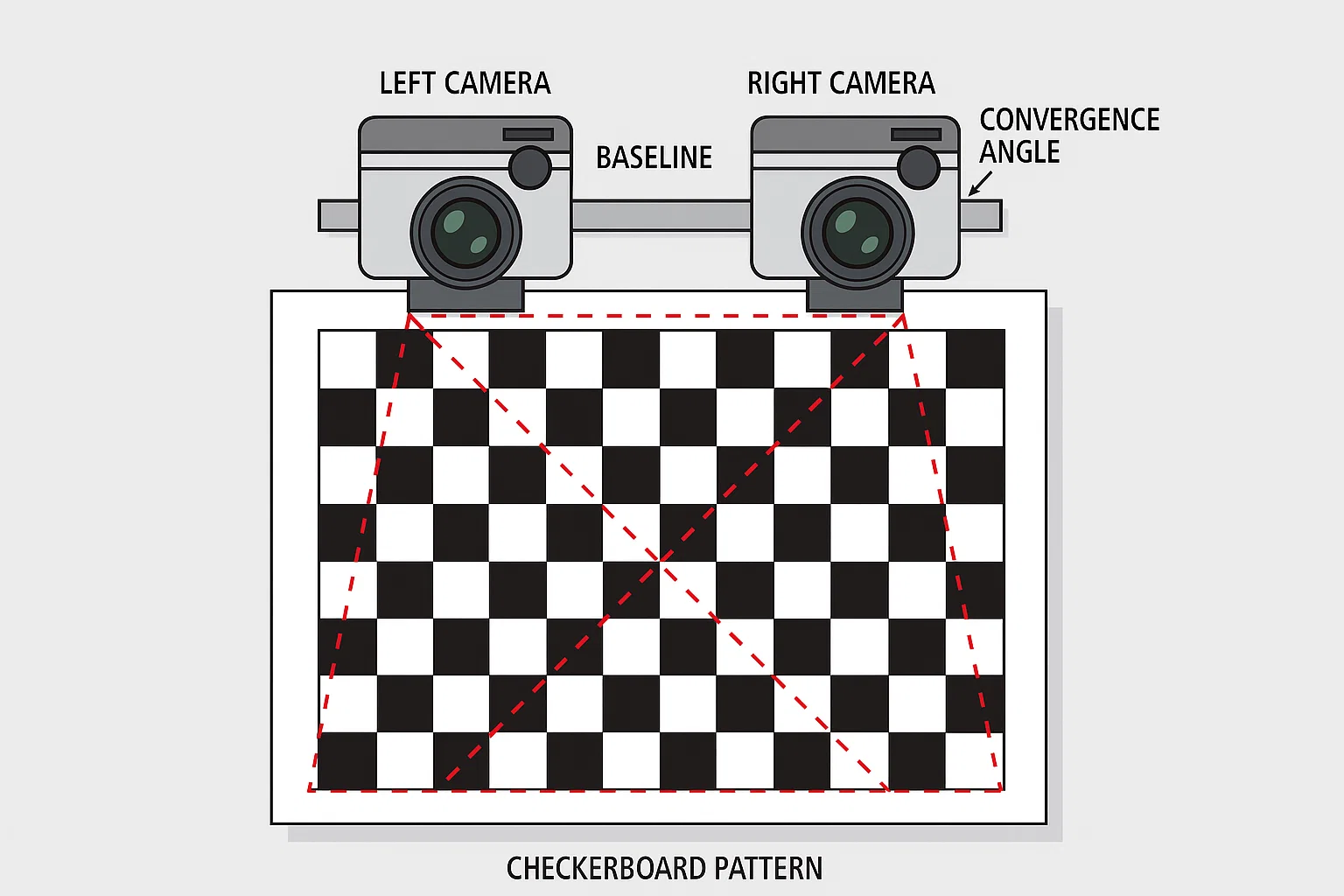

3. Extrinsic Calibration: Teaching the Cameras About Each Other

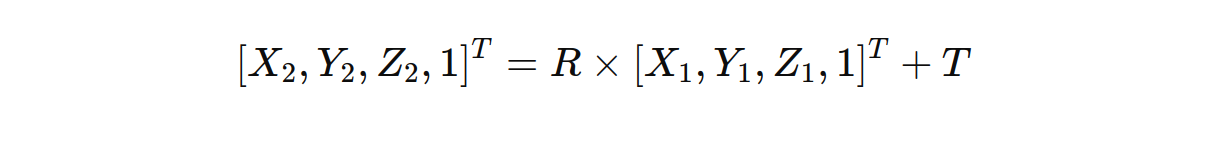

Once we know how each camera behaves individually, the next challenge is to find their spatial relationship - rotation (R) and translation (T) between them. This is extrinsic calibration, and it’s what allows one camera’s coordinate system to be mapped onto the other.

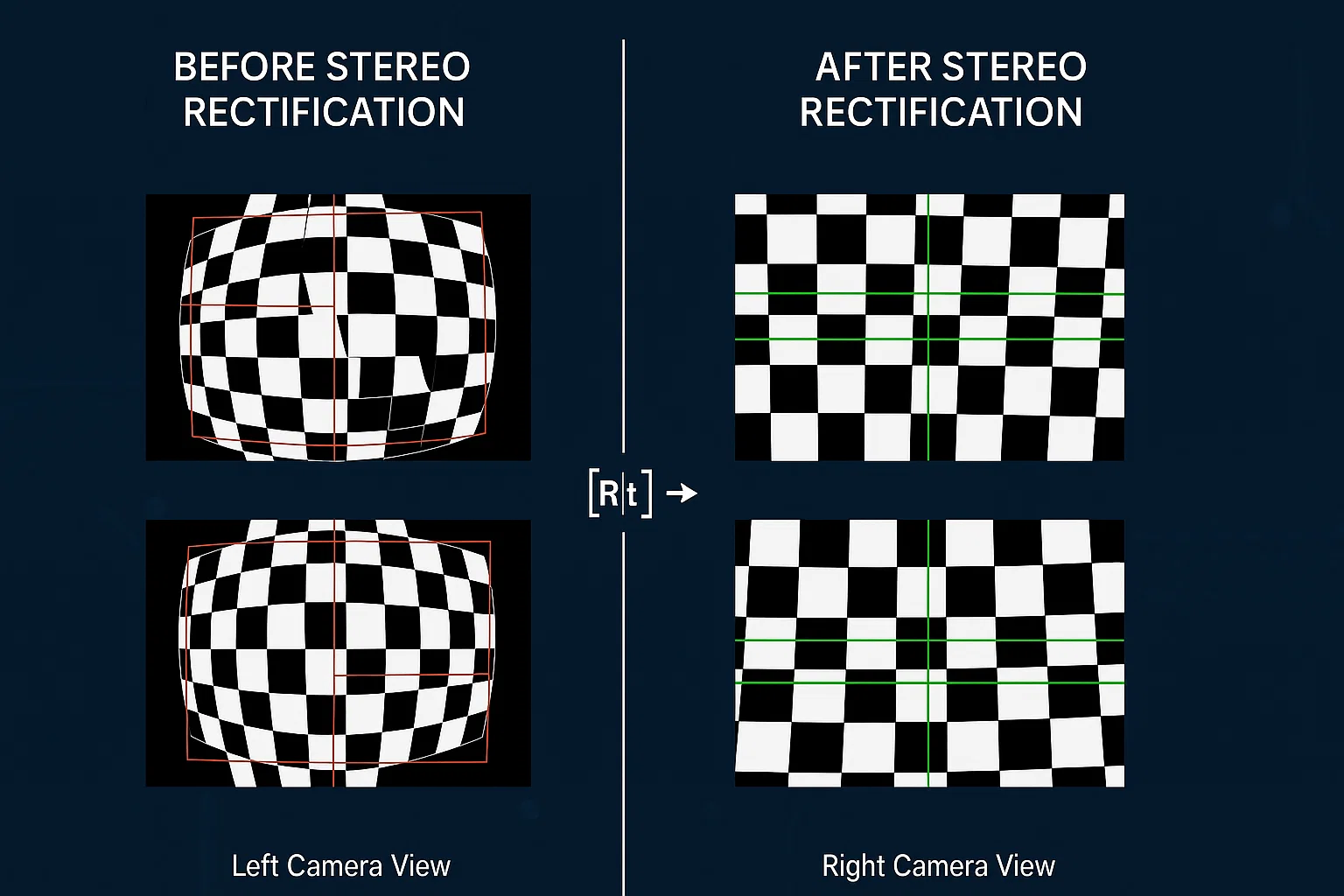

For our setup, we used a dual-sensor rig. By simultaneously capturing checkerboard images from both cameras, we computed the transformation matrix:

This relationship enables stereo rectification - aligning the two camera planes so that corresponding points lie on the same horizontal lines.

4. Pixel Fusion: Making Two Images One

With calibration complete, we can now merge both views into a unified coordinate space. This pixel fusion step transforms points from one image into the other camera’s frame using the calibration matrices. Each point is projected and matched to compute a disparity map, which encodes depth information.

However, real-world conditions - like small shifts in sensor mount or lens expansion - introduce residual misalignments. To correct these, we introduced a proximity-based correction mechanism. Using data from our proximity sensor, we dynamically adjust the alignment scale to maintain consistency across varying object distances.

5. Quantitative Impact: When Math Meets Precision

Before calibration, the average pixel alignment error between stereo frames was around 2.8 pixels RMS. Post-calibration, after applying proximity correction and rectification, this dropped to 0.4 pixels RMS - an improvement of nearly 85%.

This precision directly enhances depth accuracy, ensuring consistent disparity estimation even in complex scenes with fur texture, occlusions, or motion.

6. Real-World Benefit: Seeing the World in True Depth

Accurate stereo calibration enables precise depth estimation - a key ingredient in building systems that understand space as humans do. Whether it’s measuring distance to a bowl, tracking movement, or estimating shape contours, calibration ensures every pixel has meaning.

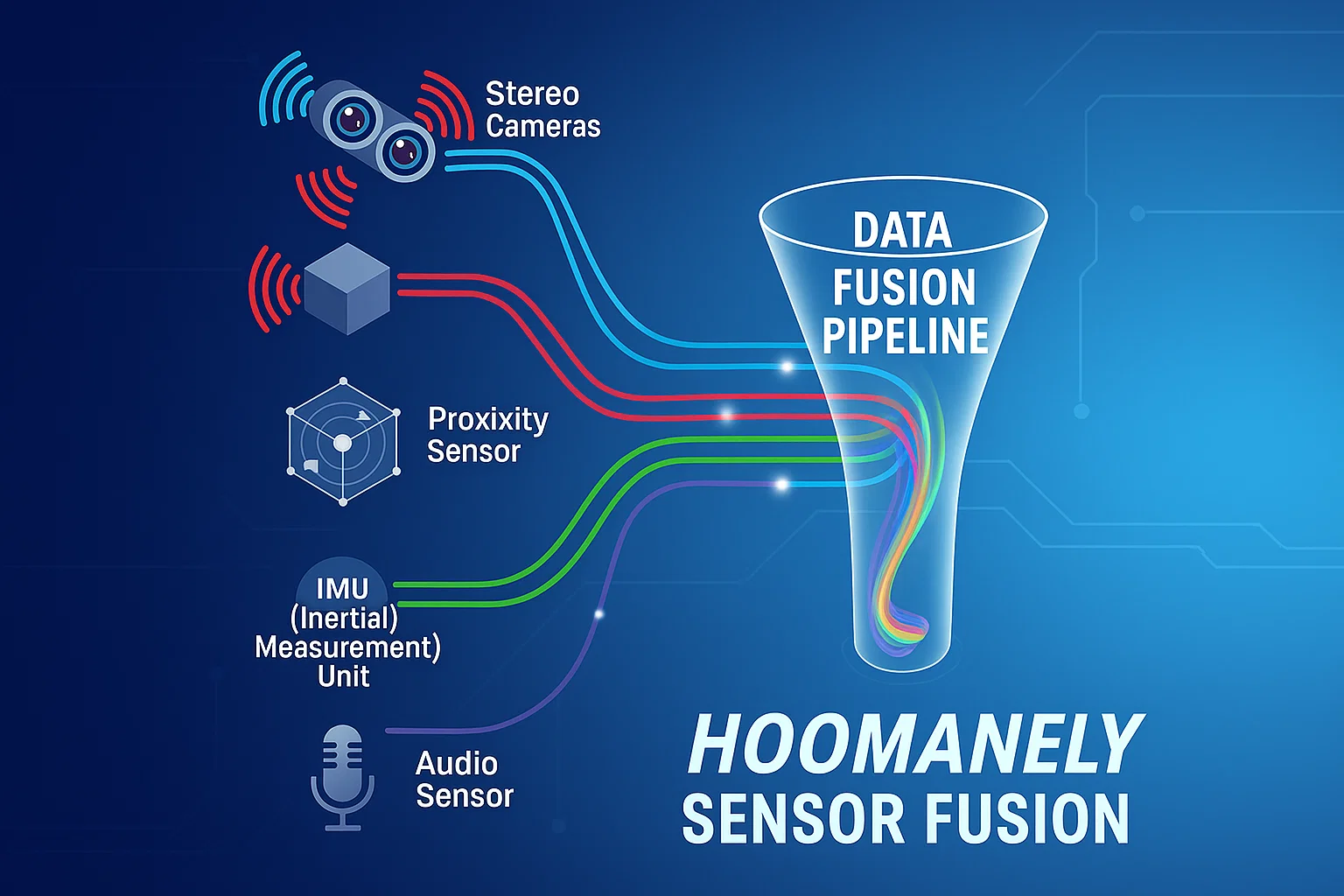

At Hoomanely, this is just one piece of a larger picture. We build products that combine multiple sensing modalities - from cameras and proximity sensors to IMUs and microphones - into a unified, intelligent perception system. Stereo vision calibration lays the foundation for this sensor fusion, ensuring that every signal aligns perfectly in time and space.

7. Key Takeaways

- Intrinsic calibration corrects each camera’s internal distortions.

- Extrinsic calibration aligns the two cameras spatially.

- Pixel fusion creates a coherent 3D view through disparity mapping.

- Proximity correction maintains depth accuracy across varying distances.

- Result: Alignment error reduced by ~85%, enabling high-fidelity spatial understanding.

- Hoomanely Impact: Stereo calibration is one of many foundations supporting our broader sensor fusion ecosystem, integrating depth, motion, and audio for holistic pet perception.

Author’s Note

At Hoomanely, every solved calibration matrix and every millimeter of alignment strengthens our vision of empathetic AI - machines that see, sense, and respond intelligently. By aligning the invisible - the pixels, depth, and geometry behind the scenes - we create technology that perceives the world as it truly is. Because true understanding begins when all sensors work together in harmony.