Beyond the Dropdown: Engineering a Seamless Onboarding

Onboarding friction kills conversion. When building Hoomanely's pet parent onboarding flow, we discovered that one seemingly simple question - "What breed is your pet?"—can cause unexpected dropoff. Pet parents would pause, scroll through endless dropdown menus, type partial breed names that didn't match our taxonomy, or worse, abandon the flow entirely. We needed a solution that respected the user's time while maintaining data accuracy.

The answer wasn't just adding autocomplete or better search. We eliminated the question altogether by predicting the breed from the pet's photo - a photo users were already uploading as part of onboarding. This post explores the technical journey behind that decision: the methods we evaluated, the tradeoffs we navigated, and why a multimodal LLM approach fit our constraints better than purpose-built computer vision models.

The Problem Space: Why Breed Selection Matters

Pet breed isn't vanity metadata. At Hoomanely - a platform helping pet parents manage their pet's health, nutrition, and care - breed information drives personalised recommendations. A Labrador's dietary needs differ from a Chihuahua's. Breed-specific health risks inform preventive care suggestions. Insurance quotes, grooming schedules, even behavioral training content all benefit from accurate breed data.

But collecting this data through traditional UI patterns created problems:

Decision paralysis: Dropdowns with 200+ dog breeds overwhelm users. The American Kennel Club recognises 200 dog breeds alone; add mixed breeds, and regional variations, and the taxonomy explodes.

Input errors: Users misspell breed names, use colloquial terms ("German Shepherd" vs "Alsatian"), or select the wrong breed from visually similar options.

Mixed breed ambiguity: For multi-breed pets, users don't know which breed to prioritise or whether to select "Mixed" and lose specificity.

Mobile friction: Scrolling and searching on mobile devices during onboarding adds cognitive load when users are already uploading photos, entering names, and providing other details.

The photo upload step was already mandatory—we needed it for the pet's profile. If we could extract breed information from that image, we'd eliminate an entire friction point while improving data quality.

Evaluating Technical Approaches

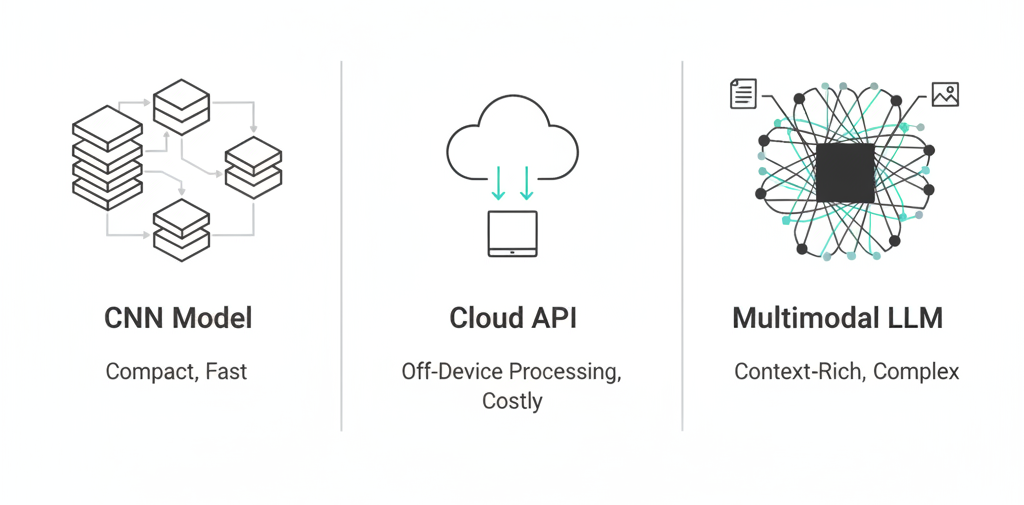

We evaluated three distinct approaches for image-based breed detection, each with different architectural philosophies and operational tradeoffs.

Approach 1: Specialised CNN Models

Purpose-built convolutional neural networks trained specifically on pet breed datasets represent the traditional computer vision approach. Models like Stanford's Dogs Dataset-trained ResNets or fine-tuned EfficientNets achieve impressive accuracy on breed classification tasks.

Pros:

- High accuracy on known breeds (90%+ on standardised datasets)

- Fast inference (50-200ms on GPU, 200-500ms on CPU)

- Small model size (10-100MB), suitable for edge deployment

- Deterministic outputs with confidence scores

Cons:

- Rigid taxonomy—adding new breeds requires retraining

- Poor handling of mixed breeds or edge cases

- Requires maintaining separate models for dogs vs cats

- Struggles with unusual angles, occlusions, or low-quality photos

- No contextual reasoning (can't handle "this is a puppy German Shepherd")

Approach 2: Vision APIs (Cloud Vision, Recognition)

Cloud-based computer vision services from Google, AWS, and Microsoft offer pre-trained object and animal detection with breed classification capabilities.

Pros:

- Zero model maintenance

- Handle diverse image qualities

- Regular updates and improvements

- Simple API integration

Cons:

- Higher latency (300-800ms including network round-trip)

- Per-request costs add up at scale

- Limited customisation for specific needs

- Inconsistent breed taxonomies across providers

- No explanation capability when predictions seem wrong

Approach 3: Multimodal LLMs

Large language models with vision capabilities (GPT-4V, Claude with vision, Llama Vision) can process images and generate structured responses in natural language.

Pros:

- Flexible outputs—not constrained to predefined breed lists

- Handles ambiguity gracefully ("appears to be a Lab-Golden mix")

- Single model works across species

- Can provide reasoning or ask clarifying questions

- Easy to refine prompts without retraining

Cons:

- Higher latency (1-2 seconds for full inference)

- More expensive per request than specialised models

- Non-deterministic outputs require parsing

- Larger infrastructure requirements

Why We Chose Llama Vision

The decision to use Llama Vision model wasn't about raw performance metrics—it was about product fit and operational flexibility.

Reasoning Over Recognition

Pure classification models output a breed label. Llama Vision outputs reasoning: "This appears to be a Golden Retriever mix, possibly with Labrador, based on the coat texture and ear shape." For edge cases—and user trust—this matters. When users see "Golden Retriever / Lab Mix", they understand the system's logic. A dropdown pre-filled with "Golden Retriever" (the highest confidence class from a CNN) feels presumptuous and wrong.

Graceful Degradation

Multimodal LLMs handle uncertainty naturally. When encountering an unusual breed or poor photo quality, the model can respond with "Unable to determine specific breed—appears to be a medium-sized terrier mix." This preserves the onboarding flow while signaling data quality issues. Specialised models either force a low-confidence guess or fail entirely.

Operational Simplicity

One model handles breed prediction, and edge cases. We don't maintain separate pipelines, breed taxonomies, or retraining schedules. Prompt engineering lets us refine outputs—like asking for top 3 breed possibilities or handling specific edge cases—without touching model weights.

Cost-Latency Tradeoff We Could Accept

Our onboarding flow isn't latency-critical. Users upload a photo, then continue entering their pet's name, age, and medical history—actions taking 30-60 seconds. A 2-second breed prediction happening asynchronously doesn't block the user. We display a tasteful loading state while the prediction runs in the background.

Running Llama Vision model on AWS proves to be cheaper than Cloud Vision APIs at scale. The model runs on our infrastructure, avoiding vendor lock-in and external API dependencies.

The Tradeoffs We Accepted

This choice wasn't without compromises:

Latency ceiling: Even optimised, LLM inference won't match CNN speeds. For applications requiring <200ms responses, this approach doesn't work.

Output parsing overhead: LLMs return natural language, requiring regex or structured prompting to extract breed labels. We use schema-enforced generation (JSON mode) to mitigate this, but it adds complexity.

Non-determinism: The same image might occasionally produce slightly different outputs.

Infrastructure requirements: Running 11B parameter models needs GPU instances. For startups without ML infrastructure, this is a higher entry barrier than calling a Vision API.

Key Takeaways

Choosing between specialised models and multimodal LLMs isn't about which is "best"—it's about which tradeoffs align with your product constraints.

Use CNNs when:

- Latency is critical (<200ms requirements)

- Your taxonomy is fixed and well-defined

- You need deterministic outputs

- Cost per prediction must be minimal

Use Cloud Vision APIs when:

- You lack ML infrastructure

- Volume is low-to-medium (<100K monthly predictions)

- You value zero-maintenance solutions

Use multimodal LLMs when:

- You need flexible, evolving outputs

- Edge case handling matters more than raw speed

- You can tolerate 1-3 second latencies

- Natural language reasoning improves UX

For Hoomanely, eliminating onboarding friction while maintaining breed data quality required a solution that understood ambiguity and communicated reasoning. Llama Vision fit that need. As the technology matures—with faster inference, better structured outputs, and lower costs—we expect this class of models to expand into more latency-sensitive applications.