Beyond Traditional Augmentation: Training Edge Models for Real-World Chaos

When your AI model performs flawlessly in testing but stumbles in production, you're likely facing one of computer vision's most persistent challenges: domain shift. At Hoomanely, we encountered this exact problem with our dog segmentation model deployed on the Everbowl, our edge device designed to monitor pet health and behavior. Here's how we leveraged transformer-based image generation to bridge that gap—and why synthetic data became our unexpected ally.

The Problem: When Perfect Training Data Isn't Enough

Our segmentation model had been trained on a diverse, high-quality dataset—thousands of dog images captured under controlled conditions, professional lighting, and varied backgrounds. The model's performance metrics looked impressive. But when we deployed it to the Everbowl, reality hit differently.

The Everbowl operates in real homes: dim kitchens at dawn, bright backyards at noon, cluttered living rooms with mixed lighting. The camera sensors, mounting angles, and lens characteristics introduced their own signature to every frame. Our model, trained on pristine data, struggled to maintain consistent segmentation accuracy in these unpredictable conditions.

This is domain shift in action—the gap between training distribution and deployment reality. The model had learned patterns that didn't generalize to our specific hardware and use cases. We needed training data that reflected the actual visual conditions our device would encounter.

Exploring the Solution Space

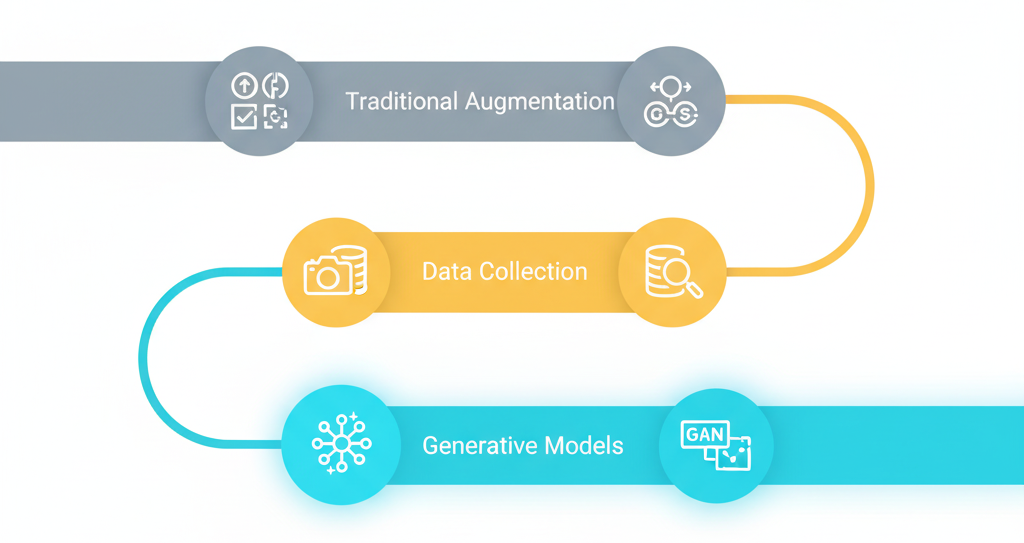

We considered several paths forward:

Traditional data augmentation offered the quickest route—geometric transforms, color jittering, noise injection. But these techniques operate within constrained boundaries. Rotating an image or adjusting brightness doesn't fundamentally change the scene composition, lighting physics, or camera characteristics. We needed something more adaptive.

Collecting more Everbowl images seemed obvious. We could deploy devices, gather thousands of real-world captures, and label them manually. But this approach had serious friction: deployment time, annotation costs, privacy considerations, and the sheer logistics of capturing enough diverse scenarios. We needed hundreds of examples across lighting conditions, dog breeds, poses, and backgrounds. The timeline was untenable.

Synthetic data generation presented a third option—using AI models to create new training images that mimicked our deployment conditions. Recent advances in diffusion models and transformer architectures had demonstrated remarkable capability in generating photorealistic images with fine-grained control. Could we generate images that carried the visual signature of Everbowl captures?

Why Generative Models Made Sense

We chose to explore transformer-based generative models for a few compelling reasons that aligned with our constraints and technical requirements.

Domain adaptation through learned priors. Modern generative models learn rich visual priors from massive datasets, then allow fine-tuning on smaller, domain-specific collections. This meant we could start with models that understood general image composition, lighting physics, and object relationships, then guide them toward the specific visual characteristics of Everbowl imagery. We didn't need thousands of labeled images—just enough to teach the model what "Everbowl-style" meant.

Controllable generation. Unlike classical augmentation, generative models could produce entirely new compositions while maintaining semantic consistency. We could specify poses, lighting conditions, backgrounds, and even subtle details like lens distortion or sensor noise patterns. This controllability meant we could deliberately fill gaps in our training distribution—underrepresented breeds, challenging lighting scenarios, or unusual camera angles.

Rapid iteration and scaling. Once fine-tuned, these models could generate synthetic training data in hours rather than weeks. We could experiment with different augmentation strategies, test their impact on model performance, and iterate quickly. The feedback loop between data generation and model training compressed dramatically.

Quality that matters for segmentation. Here's what surprised us: the images didn't need to be perfect. Segmentation models focus on boundaries, shapes, and spatial relationships—not minute texture details that might concern a human evaluator. As long as the generated images maintained plausible geometry and lighting behavior, they provided valuable training signal. The model learned to recognize dogs under Everbowl conditions, even if individual whiskers weren't photorealistic.

Why This Matters for Edge AI

This approach solved more than just a data scarcity problem. It addressed fundamental challenges in deploying AI to edge devices.

Hardware-specific adaptation. Different camera sensors, lenses, and mounting positions create distinct visual signatures. Generative models let us adapt training data to specific hardware without collecting massive per-device datasets. As we iterate on Everbowl hardware, we can quickly generate appropriate training data for new configurations.

Handling the long tail. Edge deployment means encountering rare but important scenarios—dogs in unusual poses, edge-case lighting, partial occlusions. These appear infrequently in collected data but matter for user experience. Synthetic generation let us deliberately oversample these challenging cases during training.

Privacy preservation. Home monitoring devices face inherent privacy concerns. Generating synthetic training data reduces our dependence on collecting and storing actual footage from users' homes. We can train robust models while minimizing the collection of potentially sensitive real-world data.

Continuous improvement. As we identify failure modes in production, we can rapidly generate targeted synthetic data to address specific weaknesses. The model evolves without waiting for organic data collection to capture those scenarios.

Impact and Insights

Integrating synthetically augmented training data transformed our segmentation model's real-world performance. The model became substantially more robust to the lighting variations, camera angles, and environmental complexity that characterise actual Everbowl deployments. It maintained consistent accuracy across the diverse scenarios we couldn't adequately represent in our original training set.

But beyond the technical wins, this experience reshaped how we think about data for edge AI. The traditional paradigm—collect data, label data, train model—assumes you can easily gather representative samples from your deployment environment. Edge devices break this assumption. They operate in environments you can't fully control or predict, on hardware with specific characteristics, facing scenarios that emerge only after deployment.

Generative models offer a different paradigm: learn the deployment domain characteristics from limited real samples, then systematically expand your training distribution to cover the space your model will actually encounter. It's not about replacing real data—it's about making real data go further.

Key Takeaways:

- Domain shift between training and deployment environments is a critical challenge for edge AI systems

- Transformer-based generative models enable controlled synthetic data generation that preserves deployment domain characteristics

- Fine-tuning on limited real-world samples allows generative models to replicate hardware-specific visual signatures

- Synthetic augmentation addresses data scarcity, long-tail scenarios, and privacy concerns simultaneously

- For edge devices, adaptive training data strategies are as important as model architecture choices