Boosting Edge AI with Latent Audio Generation

Edge AI is transforming how devices interact with the real world, from smart speakers to pet care devices. A critical challenge in deploying these models is robustness in varied acoustic environments. While collecting massive labeled datasets can help, real-world constraints often limit data availability, especially for specialised domains like pet sounds or rare events. This is where audio augmentation becomes essential — it allows models to learn from synthetic variations of existing recordings, improving generalisation without expanding the physical dataset. However, unlike image augmentation, audio is temporal, multimodal, and highly sensitive to distortions, making augmentation a non-trivial task. Exploring advanced augmentation strategies is crucial to building accurate, reliable, and deployable edge AI models.

The Challenge of Audio Augmentation

Audio data presents unique challenges for augmentation that images or text rarely encounter:

- Temporal dependencies: Sounds evolve over time, and minor shifts in rhythm or pitch can drastically alter meaning. For instance, a short bark versus a yip might differ only in microsecond timing.

- Frequency content: Audio contains harmonics, overtones, and background subtleties that must be preserved. Standard transformations like adding noise or pitch shifting can compromise these critical features.

- Sensitivity to denoising pipelines: Many real-world systems include noise suppression or echo cancellation, meaning naïve augmentations may be filtered out entirely.

- Limited labeled datasets: Domain-specific applications often have few recordings, making traditional data-hungry generative methods impractical.

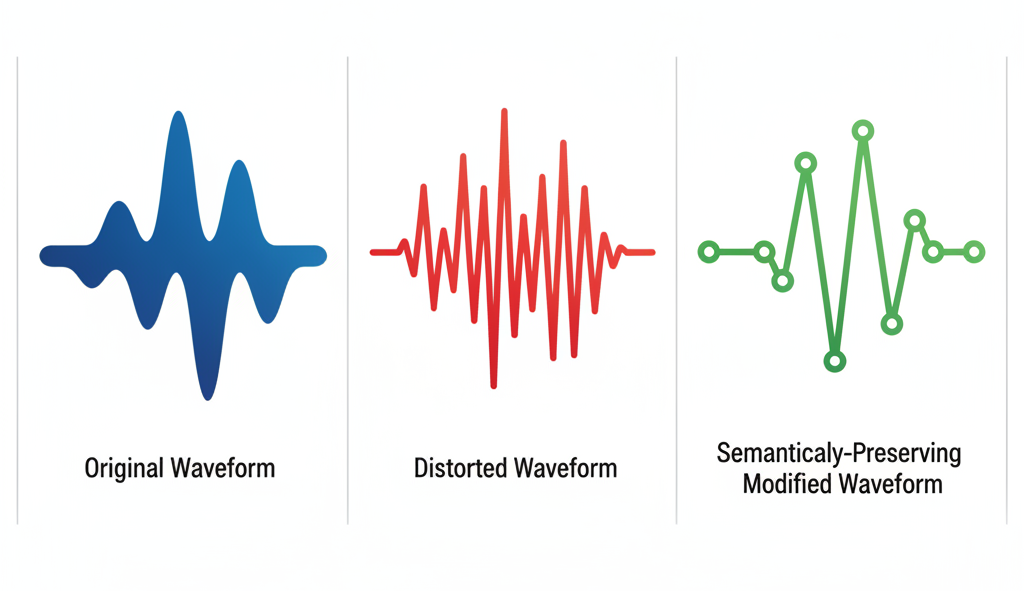

The consequence: building a robust audio model isn’t just about feeding more data—it’s about generating synthetic samples that maintain semantic fidelity while introducing natural variation.

Generic Approaches to Audio Augmentation

1. Signal-Level Transformations

- Time stretching, pitch shifting, volume scaling, noise injection.

- Use cases: works well for speech datasets or environmental sounds.

- Limitations: often incompatible with edge systems that include noise cancellation. Can distort semantic features in sensitive domains like animal vocalisations.

2. Spectrogram-Level Augmentation

- Manipulating spectrograms using masking, frequency scaling, or warping.

- Use cases: improves model invariance to frequency shifts or partial data loss.

- Limitations: transforms can produce unrealistic artifacts when inverted back to waveform, affecting downstream classification.

3. Generative Audio Models

- GANs, VAEs, and Diffusion Models trained on raw audio or spectrograms.

- Use cases: can create entirely new audio samples or class-conditioned sounds.

- Limitations: require large datasets, compute, and careful training to avoid mode collapse or unnatural artifacts.

Takeaway: Existing methods either compromise realism or require large data and compute, leaving a gap for small-scale, high-fidelity augmentation.

Why Traditional Approaches Fall Short for Specialised Dog Sounds

While many audio augmentation techniques exist, not every method is suitable for domain-specific edge applications like identifying dog behaviours. Traditional signal-level transformations, such as time stretching, pitch shifting, or adding random noise, are easy to implement but offer superficial variation. For example, adding Gaussian noise or slightly changing pitch may increase dataset size, but in our use case, such modifications risk destroying the semantic characteristics of distinct dog sounds like barks, yips, growls, or eating noises. Since edge systems often include denoising or noise suppression pipelines, these artificially modified clips could be filtered out or misrepresented, rendering them ineffective for training robust models.

Spectrogram-level manipulations provide more sophisticated transformations, such as frequency masking or warping, which can improve invariance in certain audio models. However, these techniques are generally class-agnostic and do not guarantee preservation of subtle characteristics specific to animal sounds. They may introduce artifacts when converting back to waveform, which can negatively impact model performance in a domain where minor temporal and spectral cues matter.

Generative models like GANs, VAEs, or full-scale diffusion approaches have the potential to create entirely new audio samples. While powerful, they come with practical limitations: they typically require large datasets to learn meaningful distributions, are computationally expensive, and often need class conditioning or text guidance. In our context, where the dataset is small and unlabeled, these methods risk producing outputs that either do not align with the specific types of dog sounds we need or are unstable and noisy for edge deployment.

Our approach — latent audio-to-audio diffusion using a pretrained codec — addresses these challenges. By operating in the latent space of a pretrained autoencoder, it generates synthetic audio that is semantically faithful to the original clips while introducing controlled, realistic variation. It does not rely on adding arbitrary noise, altering pitch, or class labels. Instead, it creates new variations that maintain the specific identity of each sound type, ensuring the synthetic dataset is highly relevant for downstream training of our edge models.

Positioning Our Approach

For edge deployments the goal is clear:

- Generate synthetic audio that preserves semantic content (bark, yip, eating sound, growl)

- Maintain compatibility with edge noise suppression

- Operate effectively on limited datasets

The adopted strategy is latent audio-to-audio augmentation via pretrained audio codecs and latent-space diffusion models:

- Pretrained Audio Autoencoder (Codec)

- Maps waveform into a compact latent representation

- Preserves temporal and spectral structure

- Acts as a denoiser and dimensionality reducer

- Latent-Space Diffusion

- Introduces controlled variations in latent space

- Generates semantically similar but non-identical audio

- Avoids direct waveform perturbation, ensuring denoiser compatibility

This method merges data efficiency with semantic fidelity, producing augmented audio that is ready for edge inference pipelines.

The approach leverages pretrained neural audio codecs, which are frozen during augmentation, ensuring the system doesn’t overfit or drift from real audio statistics. The audio is mapped into a latent representation, capturing both frequency and temporal structures. A lightweight 1D UNet diffusion model operates in this latent space, learning to reconstruct clean latents from slightly perturbed ones. This method avoids large-scale GANs or raw waveform diffusion, making it trainable on small datasets.

Key design principles:

- Latent-space augmentation: Ensures variations are semantically consistent and survive noise-suppression pipelines.

- Audio-to-audio diffusion: Starts from an existing latent rather than pure noise, focusing on controlled variability rather than generative novelty.

- Segment-level training: Breaks audio into temporal windows, multiplying effective dataset size without introducing artificial artifacts.

- Inference strategy: Multiple synthetic variants are produced per original clip, balancing dataset expansion with semantic fidelity.

The result is synthetic audio that behaves almost identically to real data from the perspective of downstream classifiers, while being novel enough to improve model robustness.

Impact on Edge Models

By introducing realistic variations:

- The student model generalises better to new acoustic environments.

- Avoids overfitting to limited training samples.

- Enhances prediction accuracy for rare or subtle events (like soft yips or eating noises).

Key Takeaways

- Audio augmentation is critical for improving edge AI robustness.

- Traditional methods often fail in small datasets or denoised environments.

- Latent audio-to-audio diffusion provides a data-efficient, semantically faithful alternative.

- Pretrained audio codecs + latent-space diffusion allows controlled variability without compromising signal quality.

- Edge AI systems benefit from improved generalization, reduced overfitting, and deployment-ready synthetic data.

- This approach demonstrates a pragmatic intersection of generative modeling and edge ML, bridging data scarcity and real-world robustness.