Bounded Intelligence: Operating AI Systems That Remain Stable, Predictable, and Trustworthy

As AI systems move from experimental features to core product capabilities, the definition of “good intelligence” changes. In early stages, intelligence is judged by fluency, creativity, or raw problem-solving ability. In production systems, those qualities matter far less than consistency, predictability, and control.

A production AI system does not operate in isolation. It runs inside distributed infrastructure, under fluctuating traffic, partial failures, retries, cost ceilings, and strict latency expectations. In that environment, intelligence without boundaries becomes volatile. Small changes in timing or context can produce meaningfully different outputs. Parallel executions can diverge. Costs can drift. User trust can erode—not because the AI is incorrect, but because it behaves inconsistently.

Bounded intelligence is the discipline of designing AI systems that remain stable under real conditions. It focuses on intentional constraints: on context, time, concurrency, authority, and failure behavior. These constraints do not weaken AI systems. They make them reliable enough to earn long-term trust.

The Core Idea: Intelligence Must Operate Within Explicit Limits

Bounded intelligence starts with a simple premise: In production, every AI capability is a finite resource that must be governed.

This includes:

- How much information the model is allowed to see

- How long it is allowed to think

- How many times it is allowed to run

- What parts of the system it is allowed to influence

- How it behaves when conditions are not ideal

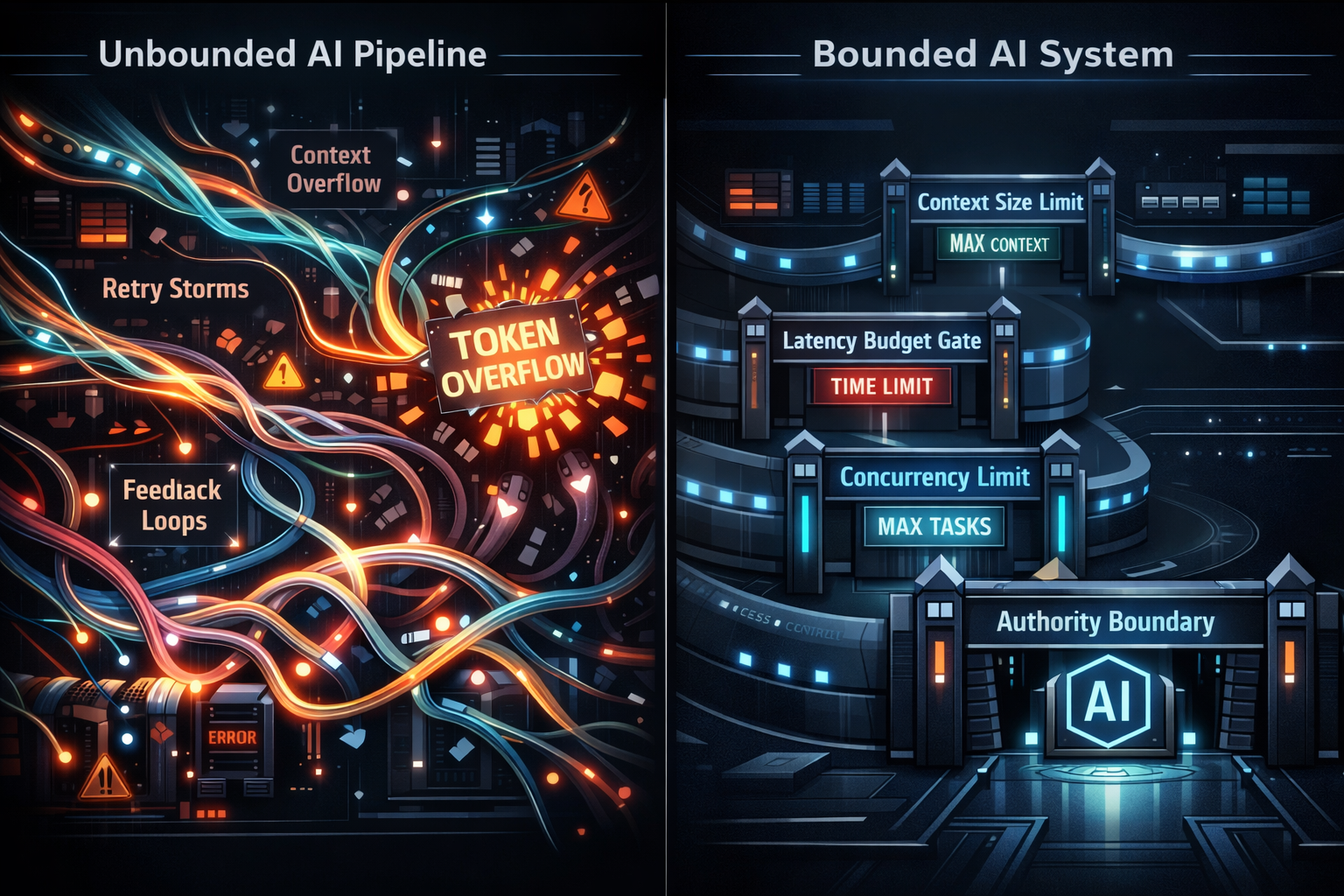

Unbounded systems implicitly assume best-case execution. Bounded systems assume retries, contention, partial failure, and human unpredictability as normal operating conditions.

Rather than asking, “Can the model answer this?”, bounded intelligence asks:

- Can it answer within a defined time window?

- Can it answer consistently across retries?

- Can it answer without destabilizing surrounding systems?

- Can it answer without exceeding its intended authority?

These questions define production readiness.

Context Is a Budgeted Resource

Context is often treated as an unlimited well. When answers degrade, the instinctive response is to add more:

- More retrieval chunks

- More conversation history

- More system instructions

- More tool outputs

This approach works briefly, then collapses under scale.

Why Context Must Be Bounded

Unbounded context introduces several systemic risks:

- Latency amplification as retrieval and prompt assembly grow

- Signal dilution, where relevant information is drowned in noise

- Non-determinism, where small timing differences produce different context windows

- Retry divergence, where the same logical action sees different inputs

Bounded intelligence reframes context as a fixed envelope, not an elastic buffer.

Practical Context Bounding

A bounded context strategy typically includes:

- Hard caps on total tokens per request

- Explicit prioritization rules (recent > relevant > historical)

- Tiered retrieval (primary evidence first, summaries later)

- Intent-level context snapshots that remain stable across retries

If required information does not fit within the budget, the system does not stretch. It degrades intentionally—by summarizing, narrowing scope, or asking for clarification.

The goal is not maximal recall. It is repeatable understanding.

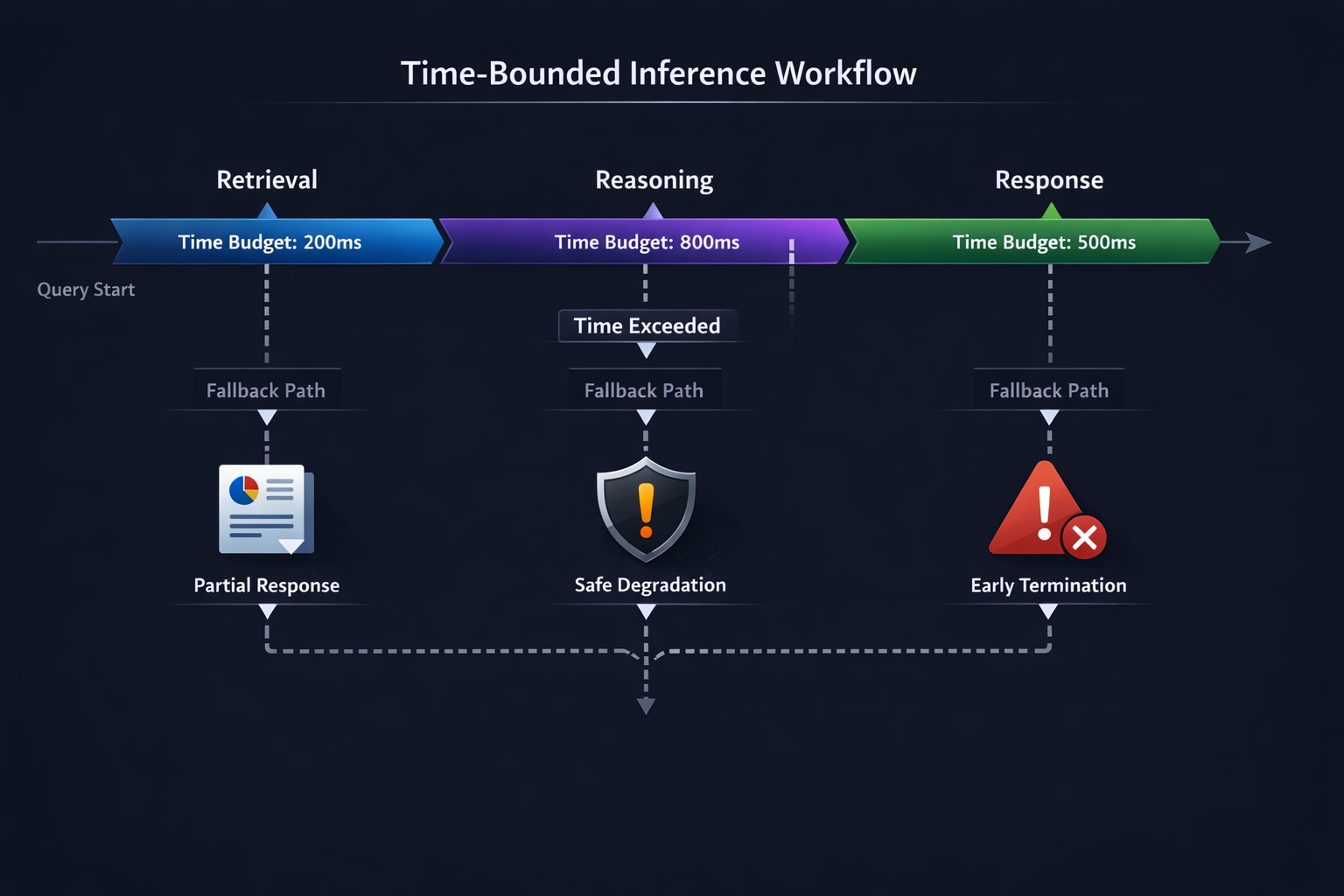

Time Is Part of Semantic Correctness

Latency is often framed as a performance concern. In AI systems, it is also a correctness constraint.

An answer delivered too late may be factually accurate yet semantically wrong:

- The user has already taken another action

- State has changed

- A retry has been triggered

- The interaction context has shifted

Bounded intelligence treats time as a first-class input.

Time-Bounded Inference

Production-grade AI systems enforce:

- End-to-end latency budgets

- Per-stage deadlines (retrieval, reasoning, post-processing)

- Early termination with safe partial outputs

- Explicit “no-answer” or “deferred” states

Rather than blocking indefinitely, bounded systems return the best possible answer within the allowed window. This keeps AI aligned with real user timelines, not theoretical model limits.

Predictable timing is often more valuable than deeper reasoning delivered inconsistently.

Concurrency Defines System Stability

Concurrency is where AI systems encounter real-world physics. Mobile reconnects, background jobs, retries, and user bursts all converge here.

Without bounds, concurrency introduces:

- Duplicate inferences

- Conflicting outputs

- Exponential cost growth

- Inconsistent user experiences

Designing Bounded Concurrency

Bounded intelligence enforces limits such as:

- Fixed inference concurrency per user or session

- Shared execution keys to deduplicate identical intents

- Cancellation of stale or superseded requests

- Backpressure instead of uncontrolled queue growth

Retries are treated as normal, expected behavior—not exceptional cases. The system is designed so that executing the same logical AI action multiple times produces the same observable result.

Stability emerges not from eliminating concurrency, but from shaping it deliberately.

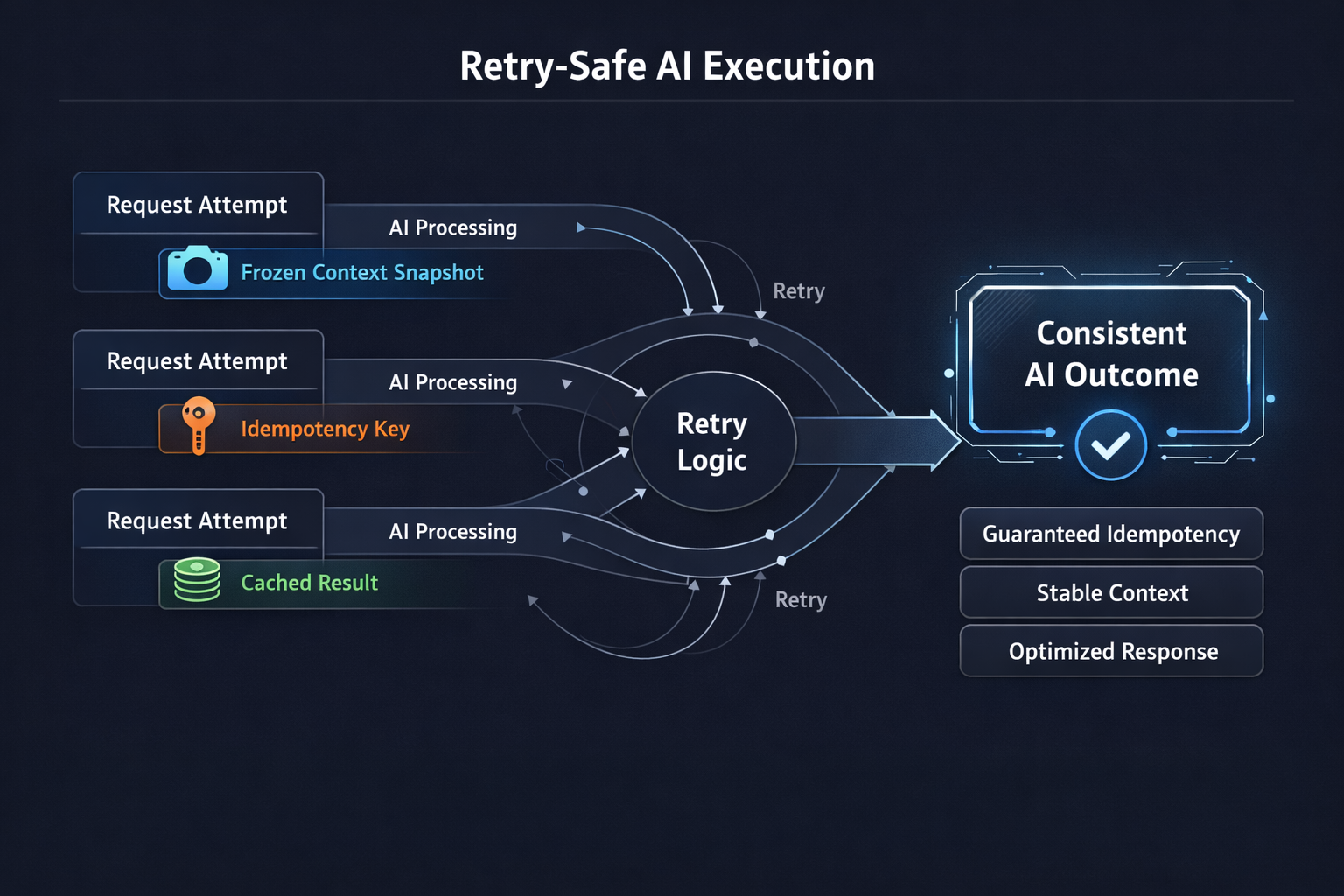

Retries Must Preserve Meaning

Distributed systems retry by default. AI systems must be designed with this assumption, not layered on afterward.

The Meaning Drift Problem

When retries are unbounded:

- Context can change subtly between executions

- Model stochasticity can introduce variation

- Tool calls may repeat with unintended effects

- Costs can multiply invisibly

Bounded intelligence prevents retries from altering meaning.

Retry-Safe AI Design

This typically involves:

- Idempotency keys tied to logical user intent

- Frozen context snapshots per action

- Cached or memoized outputs reused across retries

- Separation between inference execution and result commitment

The principle is simple but strict: Retries may repeat computation, but must not change outcomes.

Authority Must Be Explicitly Limited

One of the most important boundaries in production AI systems is authority.

AI is exceptionally good at generating recommendations, explanations, and insights. Problems arise when those outputs silently cross into decision-making power.

Advisory vs Authoritative Intelligence

Bounded intelligence enforces a clear separation:

- AI systems generate advice, insights, and suggestions

- Deterministic systems apply rules, validations, and state changes

- Every mutation of system state passes through non-AI checks

This separation ensures that:

- AI errors do not directly corrupt state

- Decisions remain explainable and auditable

- Governance and compliance remain intact

AI informs the system. It does not replace it.

Degradation Is a Designed Capability

Every AI system will encounter:

- Slow retrieval

- Partial data

- Model unavailability

- Cost or quota limits

Bounded intelligence treats degradation as a first-class design requirement, not an afterthought.

Intentional Degradation Paths

Well-designed degradation may include:

- Reduced answer scope instead of speculation

- Summaries instead of detailed reasoning

- Cached insights instead of fresh inference

- Clear user prompts for missing information

What bounded systems never do is silently hallucinate or block indefinitely. Degradation is visible, explainable, and predictable.

This preserves user trust even when conditions are imperfect.

At Hoomanely, bounded intelligence is treated as a foundational design principle rather than a reactive safeguard.

AI systems are built to operate close to real-world signals—user interactions, device data, and behavioral patterns—where variability is inherent. In such environments, stability comes from constraints:

- Fixed inference budgets

- Clear separation between insight and action

- Time-aware decision pipelines

- Observable degradation behavior

The emphasis is not on extracting maximum intelligence from every request, but on delivering consistent intelligence across long-running user relationships.

Bounded intelligence ensures that AI remains dependable as usage scales, features evolve, and systems grow more interconnected.

The Outcomes of Bounded Intelligence

When AI systems are intentionally bounded, several effects compound over time:

- Latency distributions tighten

- Costs become predictable and controllable

- Outputs stabilize across sessions and retries

- Failures become understandable rather than mysterious

- Engineering teams regain operational confidence

Most importantly, users learn to trust the system—not because it is always perfect, but because it behaves consistently and transparently.

Takeaways

- Bounded intelligence is a production discipline, not a model limitation

- Context, time, concurrency, and authority must be explicitly constrained

- Retries are normal; divergence is not

- Advisory AI must remain separate from authoritative system state

- Graceful degradation is a feature, not a fallback

- Predictability beats occasional brilliance in real systems

AI systems earn trust not through spectacular outputs, but through reliable behavior over time.