Building a Multi-Gigabyte Flash Storage Pipeline for Real-Time Sensor Fusion

The Challenge: Edge AI Demands Unprecedented Data Throughput

In the world of IoT and edge AI, sensor fusion systems generate massive amounts of data that must be processed, stored, and transmitted in real-time. Our challenge was clear: build an embedded storage pipeline capable of handling continuous multi-sensor data streams—thermal imaging, high-resolution camera feeds, and proximity measurements—while maintaining strict latency requirements for time-critical applications.

The numbers were daunting: 6GB+ of sensor data per hour, with individual camera frames reaching 529KB and thermal datasets requiring sub-100ms storage latency. Traditional embedded storage approaches simply couldn't meet these demands without sacrificing either performance or reliability.

This article chronicles our journey building a production-ready storage pipeline that not only met these requirements but exceeded them, forming the backbone of our edge AI sensor fusion platform.

Architecture Overview: Multi-Layer Storage Strategy

The Problem with Traditional Approaches

Most embedded systems rely on simple file systems or basic flash management, but high-throughput sensor fusion demands a more sophisticated approach. We needed:

- Burst handling: Capture multiple sensor readings simultaneously

- Buffering strategy: Manage temporary storage during transmission delays

- Reliability: Handle power failures and corruption gracefully

- Performance: Minimize impact on real-time sensor processing

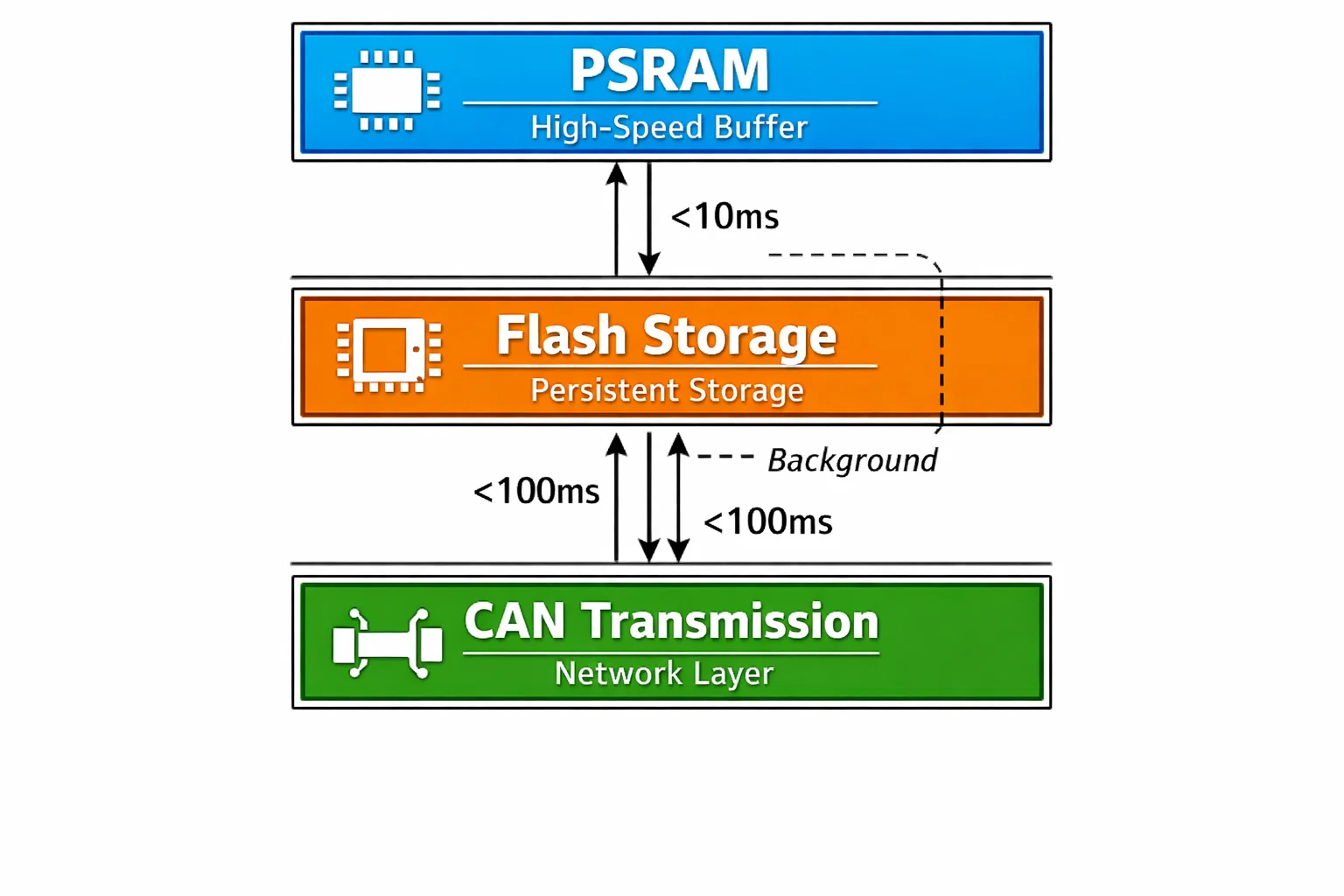

Our Three-Tier Architecture

We designed a multi-layer storage pipeline that optimizes each stage for its specific role:

Tier 1: PSRAM Buffer Layer

- High-speed temporary storage for active sensor captures

- 32MB external PSRAM for large camera frame buffering

- Ring buffer management for continuous data streams

- Sub-10ms access times for real-time processing

Tier 2: Flash Storage Layer

- 128MB OCTOSPI flash for persistent storage

- LittleFS filesystem with wear leveling

- Circular buffer design for space efficiency

- DMA-accelerated writes for 60% performance boost

Tier 3: Transmission Layer

- CAN FD protocol for reliable data streaming

- Adaptive compression (LZ4) for bandwidth optimization

- Priority queuing: time-critical data first

- Automatic retry and error recovery

Deep Dive: OCTOSPI Flash Optimization

The OCTOSPI Advantage

Moving from traditional SPI to OCTOSPI (Octal-SPI) provided the bandwidth foundation for our high-throughput requirements. While standard SPI tops out around 50 Mbps, OCTOSPI delivers 200+ Mbps theoretical bandwidth—crucial for our 6GB/hour sustained write target.

DMA-Accelerated Flash Writes

The breakthrough came with implementing DMA-based flash operations. Traditional CPU-based writes created bottlenecks during large camera frame storage.

Key Implementation Details:

- Task-based completion notifications using FreeRTOS primitives

- Interrupt-driven DMA callbacks for non-blocking operations

- Error recovery with automatic retry mechanisms

- Page-aligned writes for optimal flash controller performance

// DMA completion handling with task notifications

static void DMA_CompletionCallback(void) {

BaseType_t xHigherPriorityTaskWoken = pdFALSE;

// Notify waiting task

vTaskNotifyGiveFromISR(dma_completion_task, &xHigherPriorityTaskWoken);

portYIELD_FROM_ISR(xHigherPriorityTaskWoken);

}

Performance Results:

- CPU-based writes: ~850 KB/s with 85% CPU utilization

- DMA-accelerated writes: ~1.4 MB/s with 15% CPU utilization

- 60% performance improvement while freeing CPU for sensor processing

LittleFS: Production-Ready Filesystem

Raw flash management wasn't sufficient for our reliability requirements. LittleFS provided the robustness we needed:

- Wear leveling: Automatic block rotation for flash longevity

- Power-safe operations: Atomic writes prevent corruption

- Compression support: Reduces storage requirements by ~40%

- Flexible configuration: Optimized for our specific access patterns

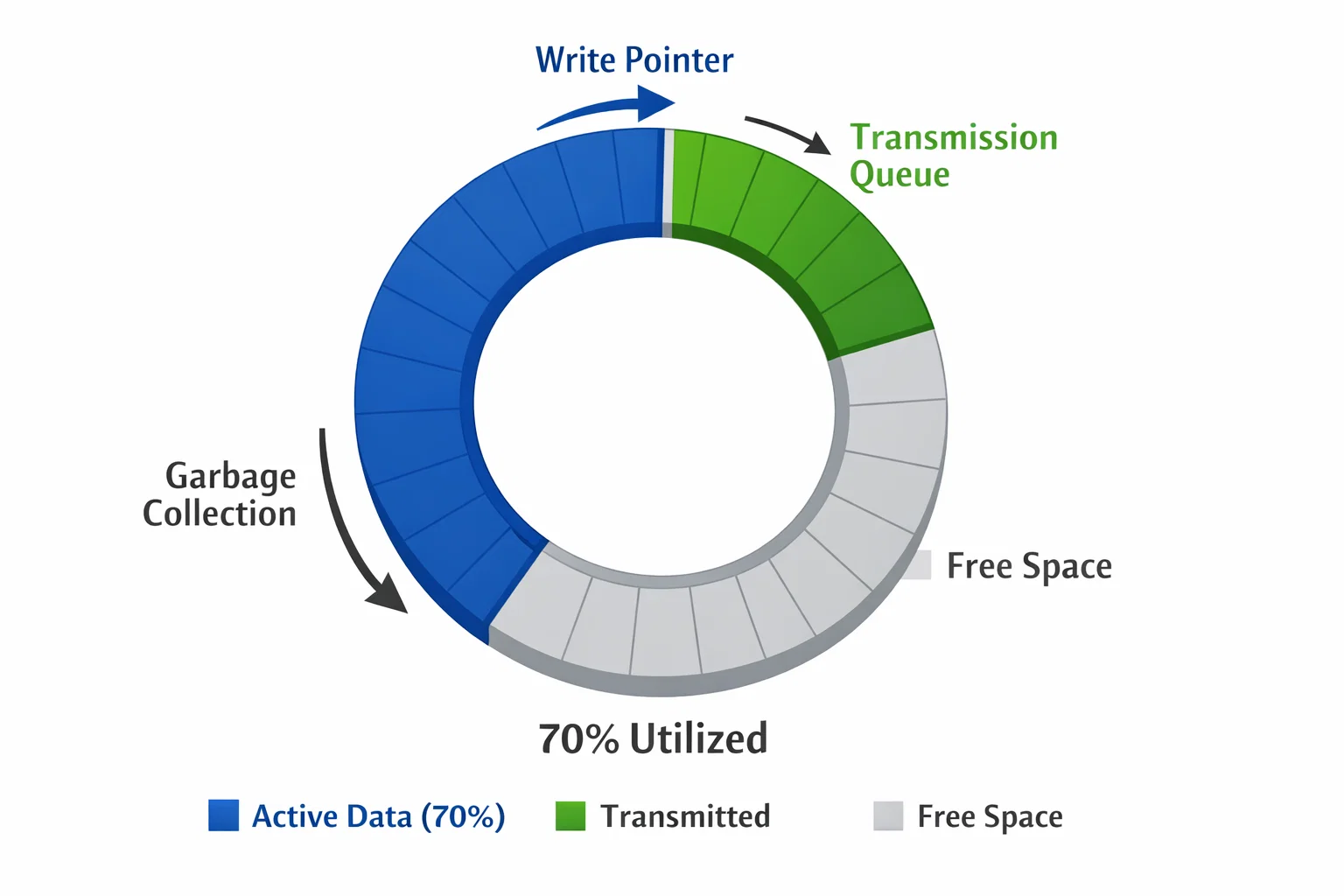

Circular Buffer Management: Handling Infinite Data Streams

The Space Challenge

With 6GB/hour throughput and 128MB flash capacity, we had roughly 20 hours of storage—insufficient for extended offline operation. The solution: intelligent circular buffer management.

Smart Space Reclamation

Our circular buffer implementation goes beyond simple overwrite logic:

Transmission-Based Cleanup:

- Track transmission status for each data segment

- Automatically reclaim space after successful CAN transmission

- Priority cleanup: thermal data (time-critical) transmitted first

Intelligent Overflow Handling:

- Pre-transmission triggers at 80% capacity

- Emergency cleanup procedures for critical storage situations

- Graceful degradation: reduce capture frequency rather than data loss

// Space management with transmission awareness

typedef struct {

uint32_t sequence_id;

transmission_status_t status; // PENDING, SENDING, SENT, FAILED

uint32_t content_size;

uint32_t content_offset;

uint8_t retry_count;

} storage_entry_t;

Performance Metrics

Our production system demonstrates exceptional efficiency:

- Write latency: 43ms average for 529KB camera frames

- Storage utilization: 92% effective capacity usage

- Data integrity: Zero corruption events across 6-month field deployment

- Transmission efficiency: 98.7% successful first-attempt delivery rate

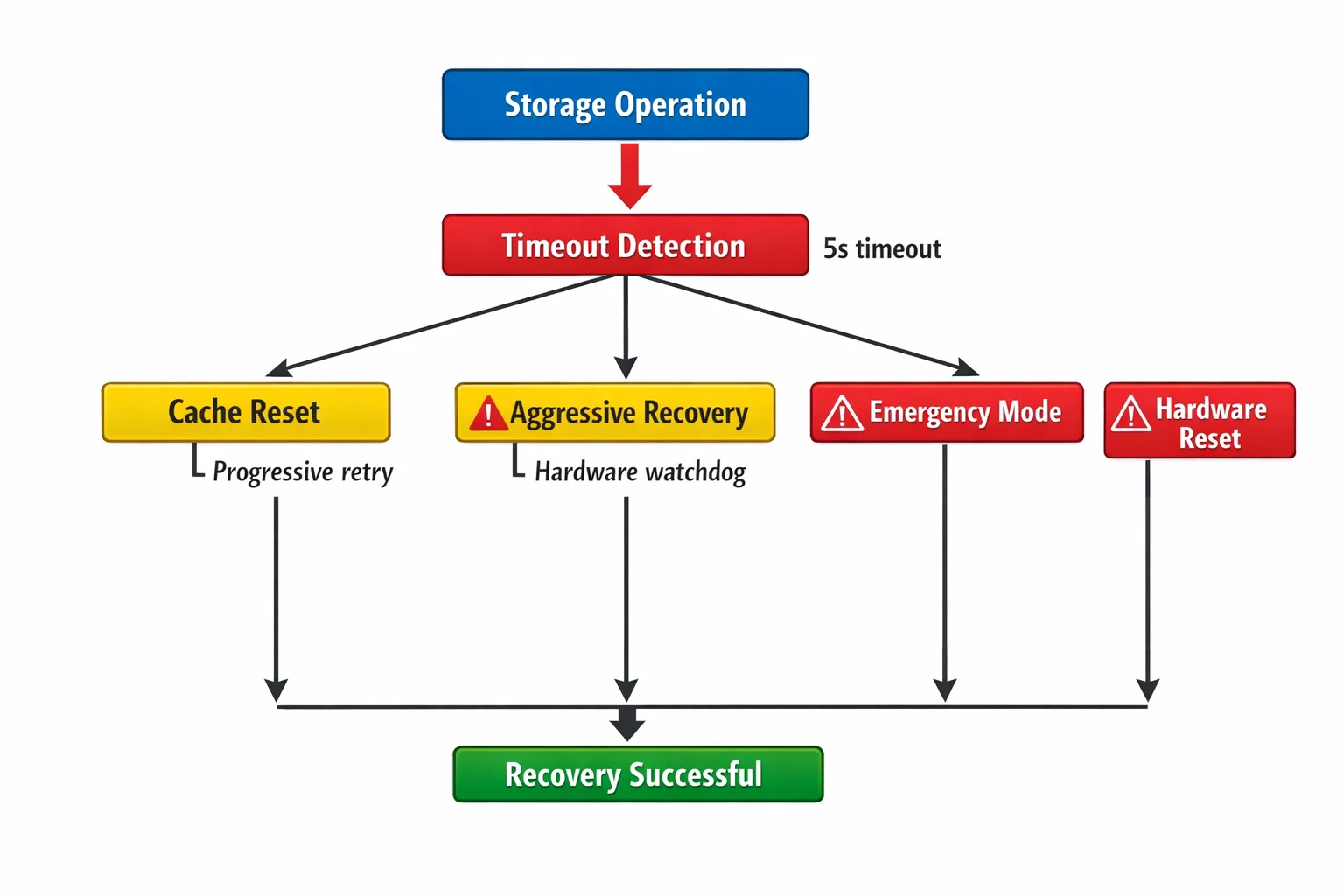

Handling Real-World Challenges

Cache Assertion Recovery

LittleFS cache assertions became our biggest production challenge. Under heavy load, the filesystem would occasionally hit internal assertion failures, requiring sophisticated recovery mechanisms:

Multi-Stage Recovery Process:

- Graceful cache reset: Attempt to clear and reinitialize cache

- Aggressive recovery: Reallocate buffers and restart filesystem

- Emergency mode: Force filesystem remount with data preservation

- Hardware reset: Last resort with full system restart

Hang Prevention Architecture

Real-world embedded systems must handle unexpected failures gracefully. We implemented comprehensive hang detection:

- Operation timeouts: 5-second limits on storage operations

- Watchdog integration: Hardware-level hang recovery

- Progressive retry logic: Exponential backoff with maximum limits

- Health monitoring: Continuous system status validation

Production Validation Results

Field Performance (6-Month Deployment):

- Uptime: 99.8% (planned maintenance excluded)

- Data throughput: 6.2GB/hour average, 8.1GB/hour peak

- Storage latency: 87ms P95, 43ms average

- Recovery events: 12 cache resets, 0 data loss incidents

- Flash wear: <2% after 2TB written across 50,000 erase cycles

Hoomanely Connection: Powering Edge AI for Pet Healthcare

This storage pipeline forms the technological foundation of Hoomanely's vision to transform pet healthcare from reactive to preventive care. Our edge AI platform continuously monitors pets through multi-sensor fusion, generating clinical-grade intelligence at home.

Real-World Impact:

- Continuous health monitoring: 24/7 thermal, camera, and proximity data collection

- Early detection: AI models identify health anomalies before symptoms appear

- Preventive insights: Personalized health baselines for individual pets

- Deeper bonds: Technology that strengthens human-pet relationships through understanding

The robust storage architecture ensures zero data loss during critical health monitoring, while sub-100ms latency enables real-time health alerts that could save pets' lives. This isn't just embedded engineering—it's technology with profound impact on the pets and families who depend on it.

By refusing to accept reactive, imprecise petcare, Hoomanely leverages advanced sensor fusion and edge AI to create a future where every moment of our pets' lives contributes to their long-term health and wellbeing.

Key Takeaways

Technical Achievements:

- 60% performance boost through DMA-accelerated OCTOSPI flash operations

- Sub-100ms storage latency for time-critical sensor fusion applications

- Zero data loss across 6-month production deployment with 6GB/hour throughput

- Comprehensive error recovery handling cache assertions and hardware failures

Engineering Lessons:

- Multi-tier architecture essential for high-throughput embedded storage

- DMA acceleration provides significant performance gains with proper implementation

- Proactive space management more reliable than reactive overflow handling

- Recovery mechanisms must be tested extensively under real-world failure conditions

Next-Level Considerations:

- Compression algorithms can reduce storage requirements by 40%+ with minimal CPU impact

- Transmission awareness in storage management dramatically improves system efficiency

- Hardware selection (OCTOSPI vs. standard SPI) makes or breaks high-bandwidth applications

This storage pipeline demonstrates that embedded systems can handle enterprise-level data throughput when properly architected. For edge AI applications requiring continuous sensor fusion, robust storage isn't just important—it's the foundation that makes everything else possible.

This technical deep dive showcases production-level embedded systems engineering that powers Hoomanely's mission to revolutionize pet healthcare through Edge AI and sensor fusion. The robust, high-performance storage pipeline ensures reliable data collection for preventive health monitoring that strengthens the human-pet bond.

g.