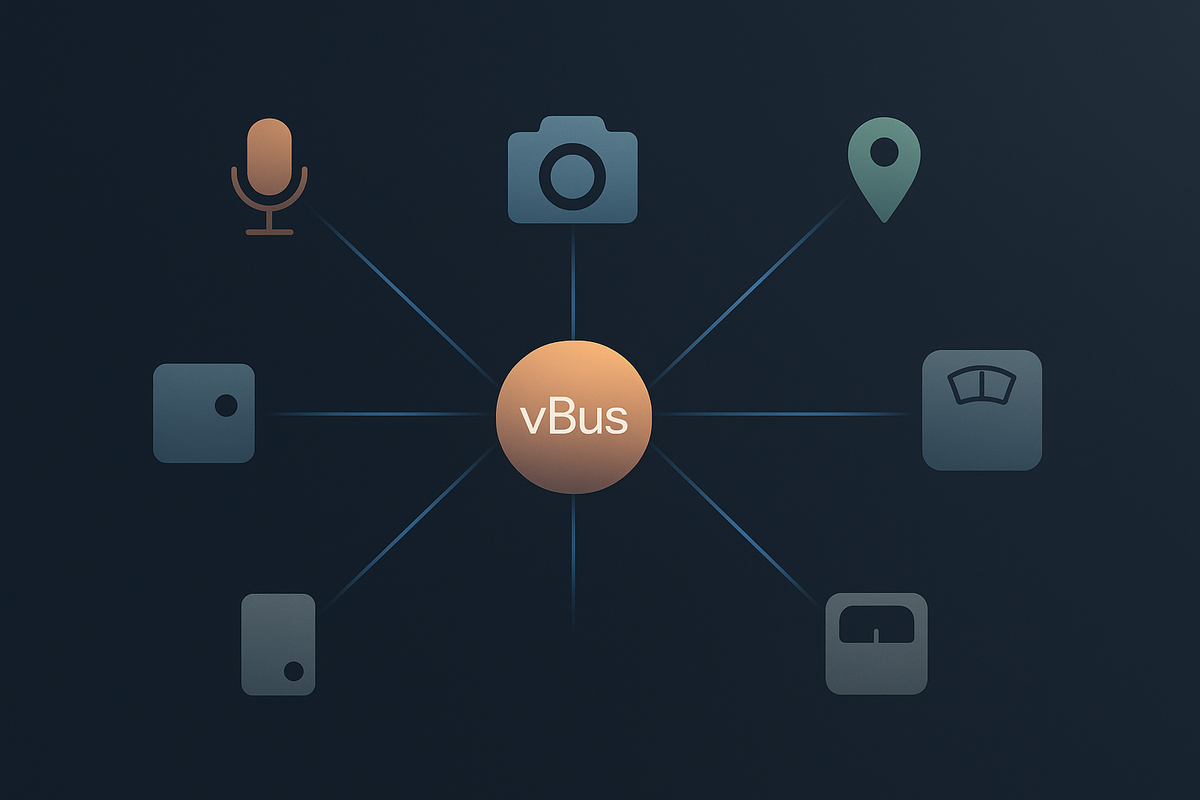

Building vBus: A Universal Sensor Protocol for Smart Pet Products

The Problem That Started Everything

At Hoomanely, we set out to build connected pet products that could genuinely understand pet behavior and health through multiple sensors working together.

Trackers needed to monitor activity, detect vocalizations, track location, and communicate over long distances.

Smart bowls needed to measure food consumption with precision, detect presence, capture video, and monitor audio.

Smart hub needed to orchestrate all incoming data and run edge compute on it in real-time with the data flowing from it's own sensor as well.

Each product required fundamentally different sensor types operating at wildly different scales—from discrete events to continuous streams.

The Initial Approach Failed

We started conventionally: different protocols for different sensors-standard approach, proven technology.

What went wrong:

Each sensor spoke a different protocol. Our microcontroller ran out of peripheral interfaces. We added converters. Firmware complexity exploded. Memory became scarce.

Battery-powered devices couldn't sleep effectively - constantly polling different interfaces, waking for every interrupt. We were off target by an order of magnitude.

Priority handling was impossible. Health alerts got blocked by diagnostic data. Software-level solutions added complexity without solving the fundamental issue.

Adding a new sensor took weeks: new driver code, new message formats, new filtering logic, extensive testing.

The smart bowl's sensor crystallized the problem: high-frequency data saturated the bus, preventing other sensors from communicating. We faced an impossible trade-off between precision and reliability.

The Realization

We weren't fighting individual technical challenges - we were fighting the wrong architecture. Multiple incompatible protocols forced us to treat fundamentally different sensors uniformly, while preventing effective coordination.

We needed one communication layer that could handle any sensor, regardless of its native protocol, while preserving each sensor's unique characteristics.

Semantic Transport Layer Fusion

The breakthrough insight: What if message semantics could participate in hardware-level routing decisions?

Traditional protocols separate concerns - the transport layer moves bytes, the application layer interprets meaning. This is elegant but wasteful: every received message wakes the CPU, consumes power for deserialization, and only then gets discarded if irrelevant.

vBus inverts this model by encoding semantic information directly into transport layer addressing. Message type and subtype aren't just payload metadata - they're routing primitives that hardware can filter before the CPU wakes.

The Three-Tier Semantic Hierarchy

Layer 1: Message Type (Command/Response/Event)

Layer 2: Message Subtype (specific capability identifier)

Layer 3: Structured Payload (capability-specific data)This hierarchy maps directly to how multi-sensor systems work:

Commands represent control flow (configuration, actuation) Responses close control loops (acknowledgment, status) Events represent data flow (sensor readings, state changes)

By making this distinction first-class in the protocol, we enable:

- Hardware filtering by intent: "Wake me only for events, not commands"

- Priority inheritance: Event urgency encoded in routing, not payload

- Zero-copy discrimination: Discard irrelevant messages without deserialization

Framing: Intelligent Message Structure

Hardware-Level Filtering

By encoding message semantics in the transport layer addressing, components can filter at the hardware level. A battery-powered processor ignoring certain message types never wakes its CPU—the messages simply don't trigger interrupts.

The addressing structure embeds:

Priority levels | Destination/Source | Message SubtypeThe trade-off: A small amount of overhead per message enables orders of magnitude more power savings through avoided processing.

Priority-Based Routing

The protocol supports multiple priority levels:

- Highest: Emergency events, critical health alerts

- High: Real-time sensor data requiring immediate action

- Medium: Regular sensor readings, status updates

- Low: Diagnostic data, background telemetry

High-priority messages preempt lower-priority processing at the hardware level through built-in arbitration, ensuring critical alerts deliver quickly even during high data load.

Multi-Layer Transport: Right Tool for Each Job

Unified Semantics, Optimized Transports

The same message structure works across multiple physical layers:

High-speed bus: Handles time-critical sensor data- video frames, high-frequency motion data, continuous analog streams. Provides hardware filtering, large payloads, and built-in error detection with automatic retransmission.

Low-speed control bus: Manages device configuration, status polling, and firmware updates. Uses a simple two-wire interface with support for multiple devices, flow control, and packet error checking.

Critical insight: Priority levels traverse transport boundaries intact. A high-priority message on the low-speed bus gets promoted appropriately when bridged to the high-speed bus. Message urgency is preserved end-to-end.

Capability Discovery: Dynamic and Continuous

Self-Describing Components

When a component initializes, it broadcasts a structured capability descriptor:

Capabilities Advertised:

- Data streams offered (type, rate, precision)

- Commands supported (configuration parameters)

- Power modes available (performance vs. battery trade-offs)

- Protocol version and feature flagsThe receiving system doesn't need prior knowledge of this specific sensor combination. It discovers capabilities and dynamically routes them to interested consumers.

Key insight: The capability descriptor itself is versioned and extensible. Future sensors can advertise capabilities that don't exist today, and old firmware can gracefully ignore unknown capabilities while utilizing known ones.

Continuous Renegotiation

Capabilities adapt to runtime conditions:

- Battery state changes → Renegotiate sampling rates and power modes

- Thermal conditions → Adjust duty cycles and disable high-power features

- Network congestion → Switch from raw data streams to processed summaries

- Feature activation → Request higher fidelity only when needed

This negotiation happens through the same Command/Response protocol—no separate configuration channel required.

Dual-Capability Sensor Abstraction

Demand-Driven Operation

Sensors advertise multiple simultaneous capabilities for the same physical sensor.

A weight sensor might advertise:

- Raw readings at high frequency (for calibration)

- Consumption events (threshold-based detection)

- Continuous monitoring (averaged readings at lower frequency)

Consumers subscribe to the capability that matches their needs. The sensor responds to actual demand.

The efficiency gain: When no consumer subscribes to raw data, the sensor never transmits it. When battery is low, high-frequency capabilities can be disabled while keeping essential event detection active.

This is demand-driven sensor operation where data production automatically matches consumption.

Why This Matters Beyond Pet Tech

The innovations in vBus generalize to any multi-sensor, power-constrained system:

- Wearables: Activity trackers, health monitors, AR glasses

- IoT devices: Smart home sensors, environmental monitoring

- Automotive: Sensor fusion for ADAS, in-cabin monitoring

- Industrial: Distributed sensor networks in manufacturing

The common thread: diverse sensors, real-time requirements, power constraints, evolving feature sets.

Impact on Development

Before vBus: Adding a new sensor type took weeks of integration work.

After vBus: Adding a new sensor takes days - define capabilities, implement the sensor interface, test integration.

The unlock: Engineers focus on sensor innovation rather than communication infrastructure.

Conclusion

vBus solved our immediate problem: connecting diverse sensors within and across our products. The real value is long-term: rapid experimentation with new sensors, seamless field updates, and a platform that scales from prototype to production.

The innovation is in the integration: how semantic information flows through hardware filtering, how capabilities negotiate continuously, how synchronization happens as a byproduct of normal communication, how one protocol handles everything from precision measurements to video streams.

For Hoomanely, this means rapid product iteration. For the industry, this demonstrates a new model for building multi-sensor systems that are both powerful and practical.

We're not just connecting sensors. We're redefining how sensors communicate.