Designing Audio Anomaly Detection for Pets: A Real‑World Engineering Blueprint

Detecting when something "sounds wrong" during a pet's mealtime seems simple - until you try to engineer it. Background noise, human speech, utensils clinking, bowls moving, fans humming, and wildly different pet eating styles create a dense acoustic jungle. Within this chaos, the goal is to identify subtle anomalies: hesitation, discomfort, choking-like patterns, sudden stops, or unusually loud gulps.

At Hoomanely, where we build multi-sensor systems like EverSense and EverBowl, audio becomes a silent guardian. We don’t mention the hardware often, but it matters in the background - enabling real-world, edge‑deployed models that must run reliably in kitchens, balconies, farms, apartments, and everything in between.

This article breaks down the engineering blueprint behind a robust, production-ready audio anomaly detection pipeline for pets. The approach is generic enough for any consumer IoT setup, yet deeply shaped by lessons from deploying real systems in the wild.

1. The Real Problem: Audio Is Messy

In controlled lab conditions, anomaly detection looks elegant. In homes, it quickly becomes survival engineering.

Challenges engineers face:

- Heterogeneous noise: fans, TV, human speech, traffic, utensils.

- Pet variability: different jaw sizes, eating speeds, chewing rhythms.

- Room acoustics: echoey kitchens vs soft-carpeted living rooms.

- Hardware limits: small microphones, low-power processors, small models.

The goal isn’t to eliminate all noise - it’s to extract consistent embeddings that tell normal vs abnormal behavior apart.

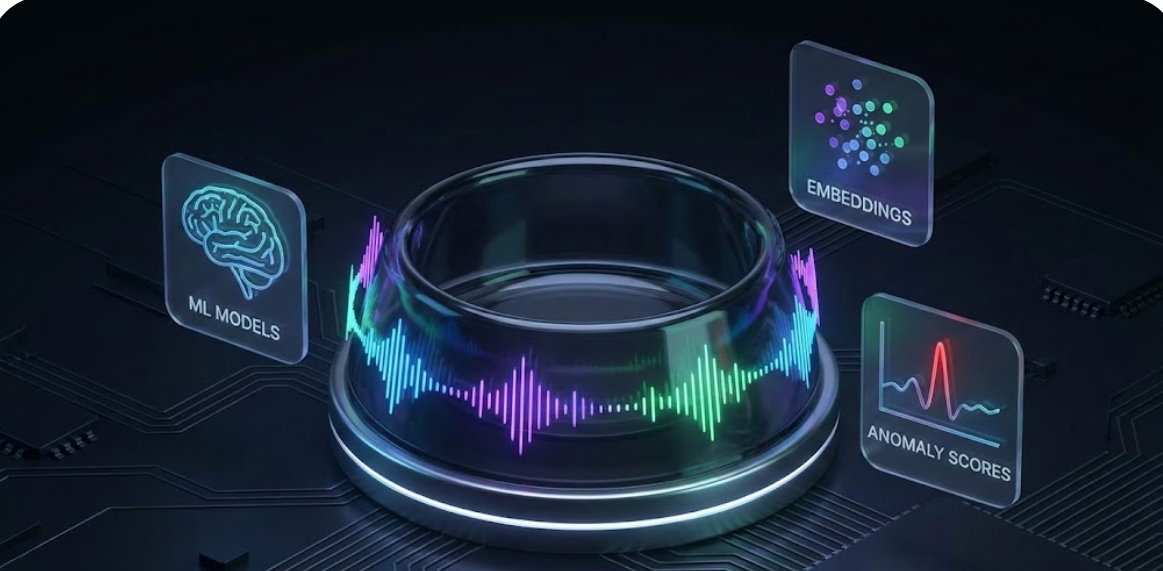

2. Our Approach: A Two‑Stage System

The most reliable blueprint is a two‑stage pipeline:

- Eating Activity Classifier - detects whether the sound corresponds to eating.

- Anomaly Scorer - learns what “normal” eating looks like for this specific pet.

Many systems mix both into one model, but separating them makes everything easier: explainability, debugging, personalization, and scalability.

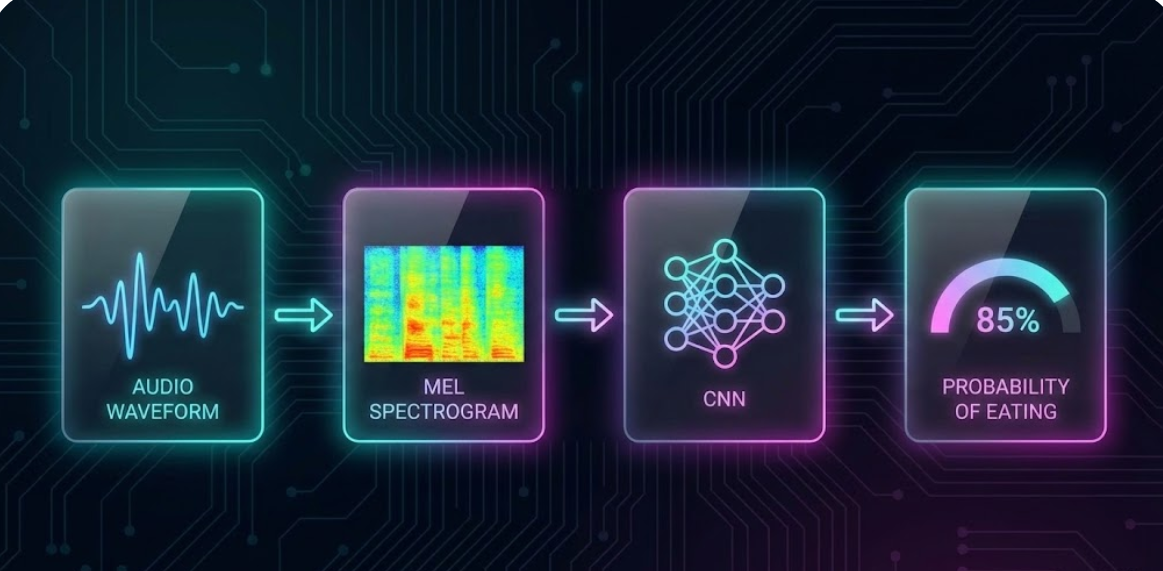

3. Stage 1 - Classifying Eating vs Non‑Eating

We start with a simple, reproducible recipe.

Step 1: Audio → Mel-Spectrogram

Short windows (around 20–40ms hop) are converted into 64–128 Mel bins.

This gives us a time–frequency image.

Step 2: CNN for Local Acoustic Patterns

Eating sounds contain bursts, crunches, and rhythmic textures. A small CNN excels here-lightweight, edge-friendly.

Step 3: Output = P(eating)

We only pass high-confidence windows downstream.

4. Stage 2 - Embeddings + Personalized Anomaly Detection

Once we isolate eating windows, we embed them.

Why embeddings?

Embeddings compress acoustic behavior into a vector capturing:

- chewing force

- frequency distribution

- rhythm consistency

- texture of crunch

For anomaly detection, embeddings are gold.

Step 1: Extract zₜ (64–128D embedding)

We use the last hidden layer of the CNN or a small GRU.

Step 2: Build a pet-specific baseline

For each pet, collect ~10–20 normal meals.

Compute:

- mean vector μ

- covariance σ²

We now define "normal eating behavior".

Step 3: Score new meals

We compute anomaly scores using something like:

score = (z_t - μ).T @ Σ^-1 @ (z_t - μ) # Mahalanobis distance

This gives a smooth, per-window anomaly estimate.

Step 4: Final meal score

Aggregate across all eating windows.

Examples:

- percentile thresholding

- max-pooling

- temporal smoothing

This final score determines whether the meal was normal or unusual.

5. Why Personalization Matters

Dogs differ - sometimes more than humans.

A large-breed dog’s eating pattern can be 10× louder and crunchier than a toy breed. Using a single global model leads to:

- frequent false alarms

- sensitivity issues

- poor user trust

Personalization fixes this.

At Hoomanely, personalization is embedded across many pipelines - thermal calibration, identity recognition, food volume estimation - and audio anomaly detection aligns with that philosophy.

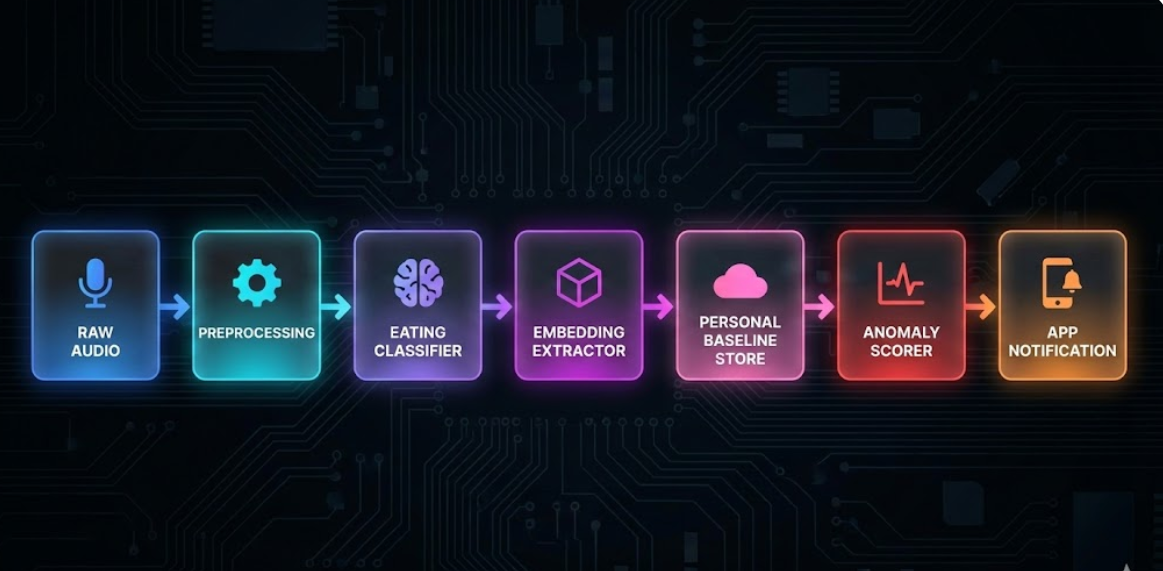

6. Engineering the Full Pipeline (End-to-End)

Here’s the architecture many real-world systems converge to:

Breakdown:

- On-device: preprocessing, eating detection, embedding extraction.

- Cloud: baseline storage, anomaly scoring, dashboarding.

- App: alerting, timeline visualization.

Keeping the heavy computation on-device ensures privacy and latency. Cloud storage helps track trends over weeks and months.

7. Practical Considerations That Matter

1. Windowing strategy

Short windows capture details, long windows capture rhythm.

Mixed-window strategies work beautifully.

2. Noise suppression

You don’t need perfect denoising.

You need consistency.

Use:

- simple band-pass filters

- log-Mel compression

- normalize per-window energy

3. Handling bowl movement

Bowl drags produce strong low-frequency noise.

Add them as a separate class or filter them out.

4. When to alert users

We found it’s best to:

- alert only on sustained anomalies

- avoid alerts for first 2–3 meals during personalization

5. False positives hurt trust

Anomaly detection must be conservative.

This is especially true for pet parents.

8. How We Use This Internally

Within Hoomanely’s ecosystem, this pipeline supports early detection of unusual eating events - hesitation, rapid eating, or sudden pauses. While the blog keeps things generic, the internal system integrates audio anomaly scores with EverSense depth sensing and EverBowl eating logs, creating a multi-modal health profile.

This contributes to our mission: building intelligent pet health systems that understand behavior, nutrition, and wellness as naturally as humans do.

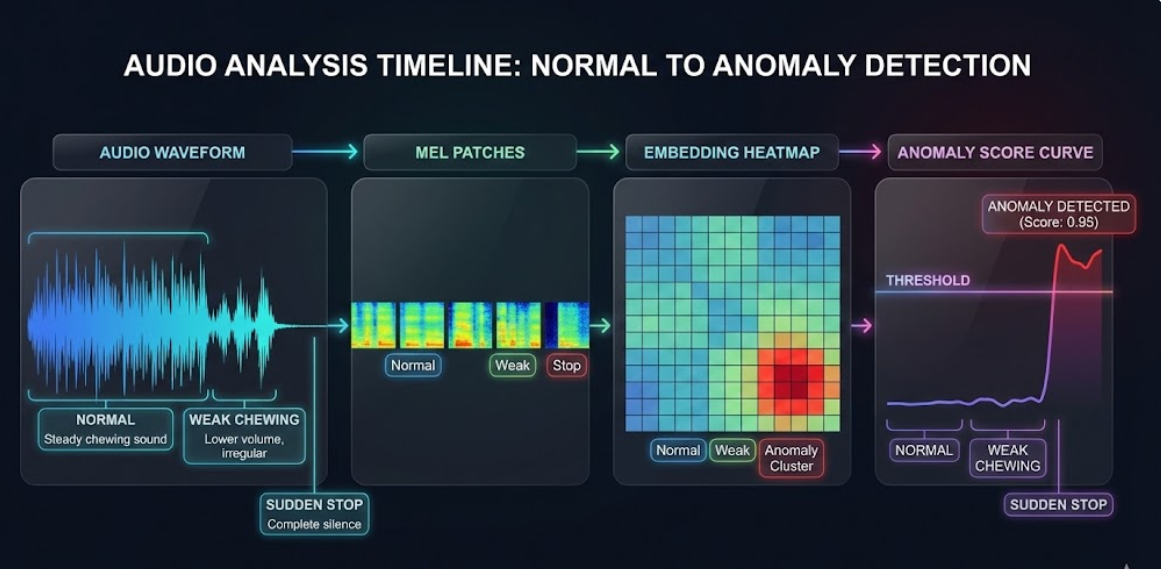

9. Example Timeline: From Raw Audio to Alert

Let’s look at a simplified real-world timeline.

Window 0–3s: Detect high P(eating) → embeddings → normal

Window 3–6s: Sudden silence → high anomaly

Window 6–9s: Weak chewing → moderate anomaly

Aggregate → threshold → user notification.

10. Key Takeaways

- Real-world anomaly detection is more about robustness than sophistication.

- Using a two-stage approach (activity → anomaly) massively simplifies engineering.

- Embeddings + personalization unlock high accuracy across diverse pets.

- Smart windowing, normalization, and conservative alerting build user trust.

- Multi-modal integration (audio + thermal + depth) creates a powerful health signal.