Fixing Onboarding by Reducing Uncertainty, Not Steps

Problem

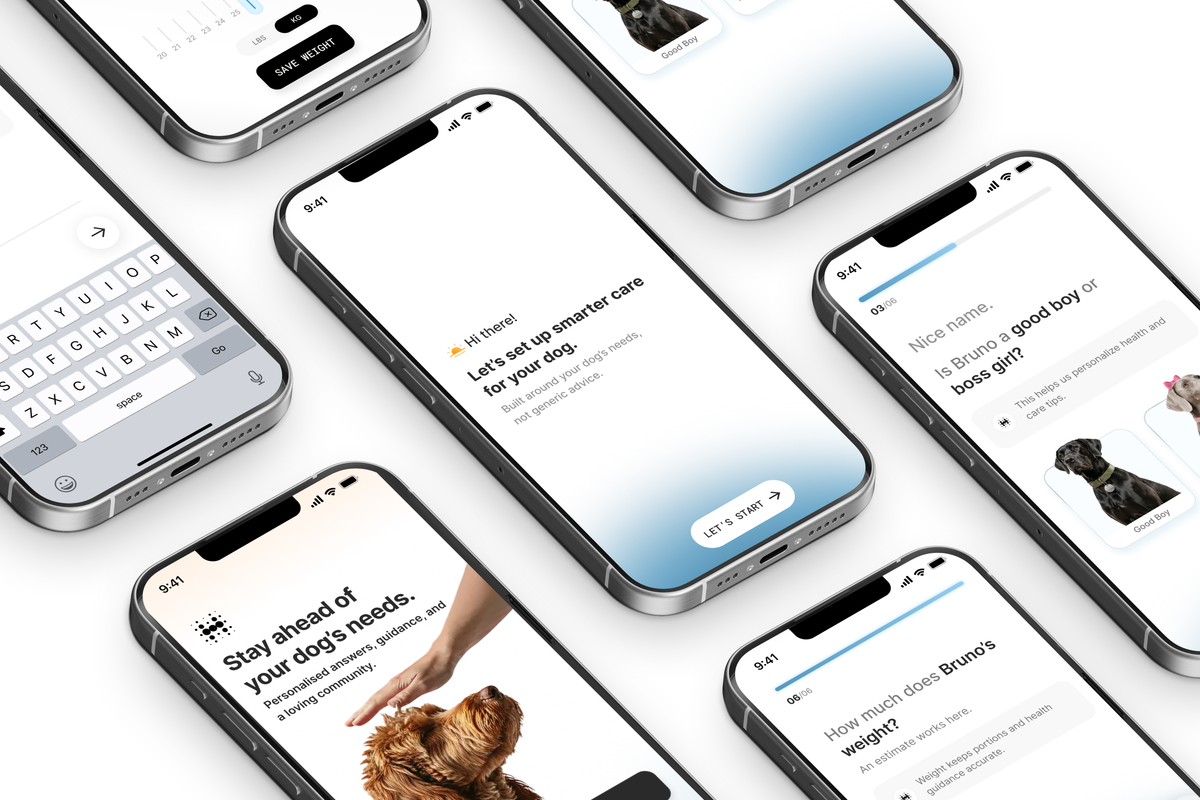

Personalisation was central to Everwiz. To deliver meaningful insights, especially breed-specific guidance, we needed users to share accurate profile details during onboarding. That meant collecting information like the pet’s name, photo, breed, age, weight, and medical conditions.

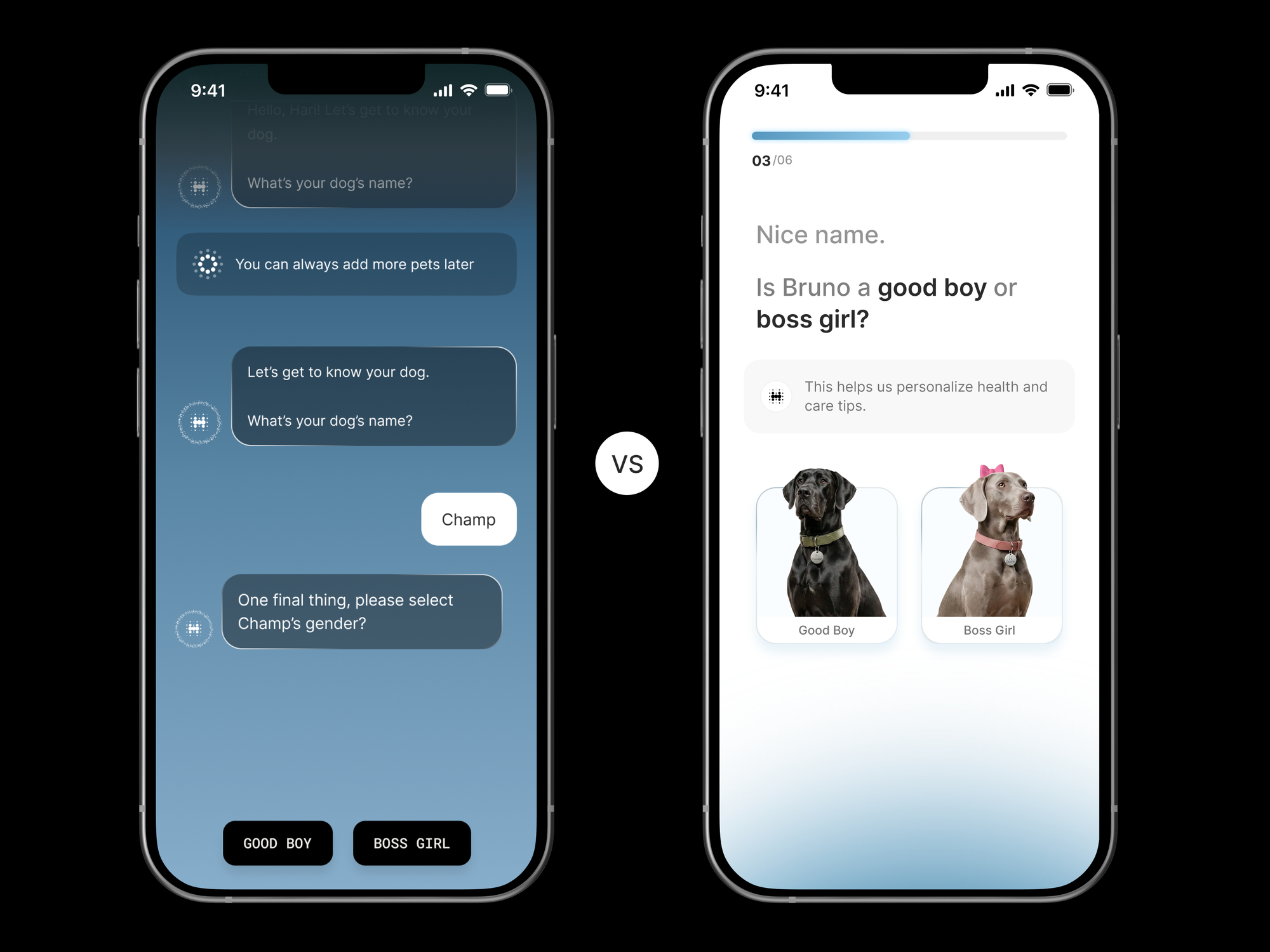

The original onboarding flow was designed to feel friendly. We framed it as a conversational, chat-style experience almost like talking to an AI assistant. One question appeared at a time. The system asked for the pet’s name, then prompted users to upload a photo to analyse the breed using AI. If the model couldn’t confidently identify the breed, we asked users to type it manually. We followed that with chat-style insights about the breed, then continued with date of birth, weight, and medical conditions.

On paper, this sounded engaging. In reality, it introduced friction we didn’t fully anticipate.

The numbers made the problem clear:

- Onboarding completion hovered at 60–70%

- Total time to complete was above 2 minutes, yet drop-offs occurred at almost every step

This ruled out speed as the core issue. Users were already spending time but uncertainty kept increasing with every question.

What we observed was a growing sense of anxiety as the flow progressed. Each new question appeared without any indication of how many were left. Users had no idea when the onboarding would end or what they’d get at the end of it. As we increased the number of questions to strengthen personalisation, the experience started to feel endless.

Our challenge was clear:

How do we keep onboarding quick while still collecting the information needed to personalise the Everwiz experience?

Approach

The obvious solution would have been to reduce questions or push data collection to later. But personalisation is core to Everwiz. Weak onboarding data would weaken everything downstream from recommendations to insights.

So instead of asking what can we remove, we reframed the problem:

How can we make the same information feel easier to give?

The focus shifted from reducing screens to reducing uncertainty. We believed users would complete onboarding if:

- They knew how many steps were involved

- They could see progress as they moved forward

- Each input felt quick and intentional

Process

1. Understanding the Drop-Off Pattern

Drop-offs weren’t concentrated at one specific step. They happened across almost every question. This indicated the problem wasn’t usability or complexity, it was structural.

The chat interface made every question feel like it could be followed by many more. Conversations don’t have clear endings, and that ambiguity worked against task completion.

2. Moving Away From a Chat-Style Flow

We decided to let go of the conversational UI entirely.

Onboarding wasn’t a conversation, it was a setup task. Users weren’t looking to interact with AI; they wanted to finish setting up their profile.

So we redesigned the experience as a clear, step-based flow with a defined start and end.

3. Introducing Progress Visibility

We added a progress indicator that showed:

- Total number of steps

- Current step

- Steps remaining

This immediately reduced anxiety. Users no longer wondered how long onboarding would last, they could see it.

4. Reducing Effort Per Step

We carefully evaluated every question and reduced friction wherever possible:

- Minimized the number of steps without compromising personalisation

- Used pre-selected inputs instead of free-text fields

- Redesigned date and weight pickers to be faster and more intuitive

- Used images only when they added real value, such as photo uploads for breed analysis

The goal wasn’t to collect less data, but to make each interaction feel lighter.

5. Separating Momentum From Insight

Previously, breed insights were shown mid-flow in a chat format. While informative, they slowed users down.

In the new flow:

- Insights were delivered after onboarding completion

- Onboarding focused on progress, not explanation

This helped users stay in motion.

6. Validating Through User Testing

User testing confirmed what analytics suggested:

- The flow felt faster, even when time spent was similar

- Progress visibility increased confidence

- Inputs felt more decisive and less draining

Only after validating this did we launch the redesigned onboarding.

Results

The impact was immediate.

- Onboarding completion (Signup → UserCreated): 94.7%

Up from the earlier 60–70% range. Nearly every user who signs up now completes their profile. - Install → Signup conversion: 56%

Up from the previous 35–40% average, adding an extra 15–20% of users into the funnel.

These gains compound across the journey. More installs turn into signups, and more signups reach activation with complete, high-quality profile data.

Importantly, this improvement didn’t come from shortening onboarding dramatically.

It came from making the experience predictable and finite.

Takeaways

- Time spent doesn’t guarantee completion

Users will drop off even after investing time if the path ahead feels unclear. - Conversational UI isn’t always ideal for setup flows

What feels friendly can create uncertainty in task-driven experiences. - Progress indicators reduce anxiety

Visibility into what’s left builds confidence. - Clarity beats creativity in onboarding

Direct questions and decisive inputs outperform clever interactions. - Better onboarding doesn’t require more screens

It requires fewer unknowns.

A Note on Hoomanely

At Hoomanely, our mission is to build technology that understands pets as individuals. Strong personalisation starts with strong foundations, and onboarding is where those foundations are formed.

This redesign reinforced a simple truth:

Human-centred design isn’t about sounding human, it’s about making people feel in control.

By reducing uncertainty without compromising data quality, we strengthened both the onboarding experience and Everwiz’s personalisation engine.