Golden Journeys: Synthetic Conversations as Regression Tests for AI Assistants

AI assistants are becoming deeply embedded across apps, devices, and internal tools. They read context, recall previous turns, guide users through troubleshooting, analyze data, and invoke tools on behalf of the user. Yet the systems beneath them—models, prompts, routing layers, retrieval indexes, formatting logic, safety filters—are in constant motion. A simple prompt refinement, a quiet model upgrade, a small schema update, or a retrained retriever can subtly shift the assistant’s behavior.

Users don’t notice these changes in isolation. They notice when a familiar flow suddenly “feels different.” The assistant becomes vague in turn five. It forgets context. It offers steps that no longer align with documentation. It over-refuses safe queries. It calls the wrong tool. These aren’t catastrophic failures; they’re micro-regressions that confound teams because they emerge only in the space between turns.

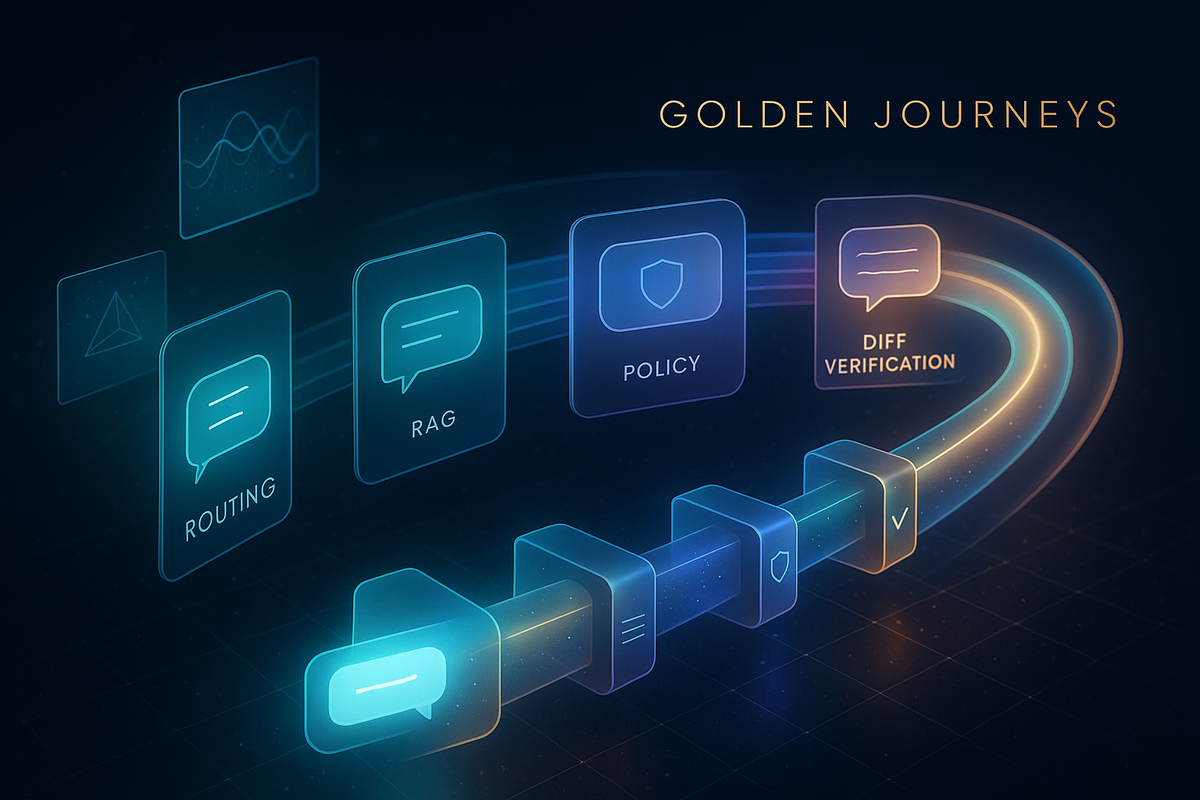

Golden Journeys are a way to bring order to that chaos. They are curated, synthetic, multi-turn conversations that define how your assistant should behave across its most important scenarios. Each journey is serialized, replayed deterministically, evaluated structurally and semantically, and incorporated into CI/CD. Instead of guessing whether a model changed or a routing tweak affected reasoning, Golden Journeys give engineering teams precise, reproducible evidence.

They turn “the assistant is acting weird” into “turn 4 of this journey failed for a clear reason.”

And when used consistently, they become one of the strongest quality guarantees you can add to an AI-driven platform.

Why AI Assistants Drift in Subtle, Hard-to-Debug Ways

Traditional software regresses in familiar patterns. Bad merges break APIs. Incorrect schemas cause crashes. Unhandled exceptions make functions fail. With LLM-based assistants, regressions are rarely so explicit. They manifest in tone, structure, reasoning, step sequencing, retrieval quality, and context carryover.

The source of drift often comes from many small changes that blend together:

Prompt revisions

As teams add new safety instructions, refine clarity, adjust verbosity, or update formatting, they change how the model interprets user intent and how it structures multi-step outputs.

Model shifts

Vendors may roll out silent updates. Even deterministic settings like temperature 0 aren’t enough to guarantee identical behavior across versions. The reasoning pattern underneath can shift in subtle but meaningful ways.

Routing changes

Scaling decisions—moving certain flows onto cheaper models, adjusting thresholds, or using faster variants—directly affect follow-up responses and task sequencing.

Retrieval variations

Changes to chunk sizes, ranking methods, document structure, or embeddings can degrade recall in specific flows even when total accuracy numbers remain stable.

Post-processing refactors

JSON parsers, formatting layers, metadata extraction, and chain-of-thought suppression logic all influence downstream turns.

Safety-layer evolution

A stricter classifier or newer policy may push previously normal queries into a "refusal" zone or require additional verification steps.

Tool integration

Small changes in tool signatures, naming, or argument structures break tool-dependent flows without immediately failing any conventional tests.

None of these are easily caught by unit or integration tests. They are multi-turn, context-rich regressions that appear only when a user walks through a real scenario.

Golden Journeys target exactly these cases.

What Golden Journeys Really Are

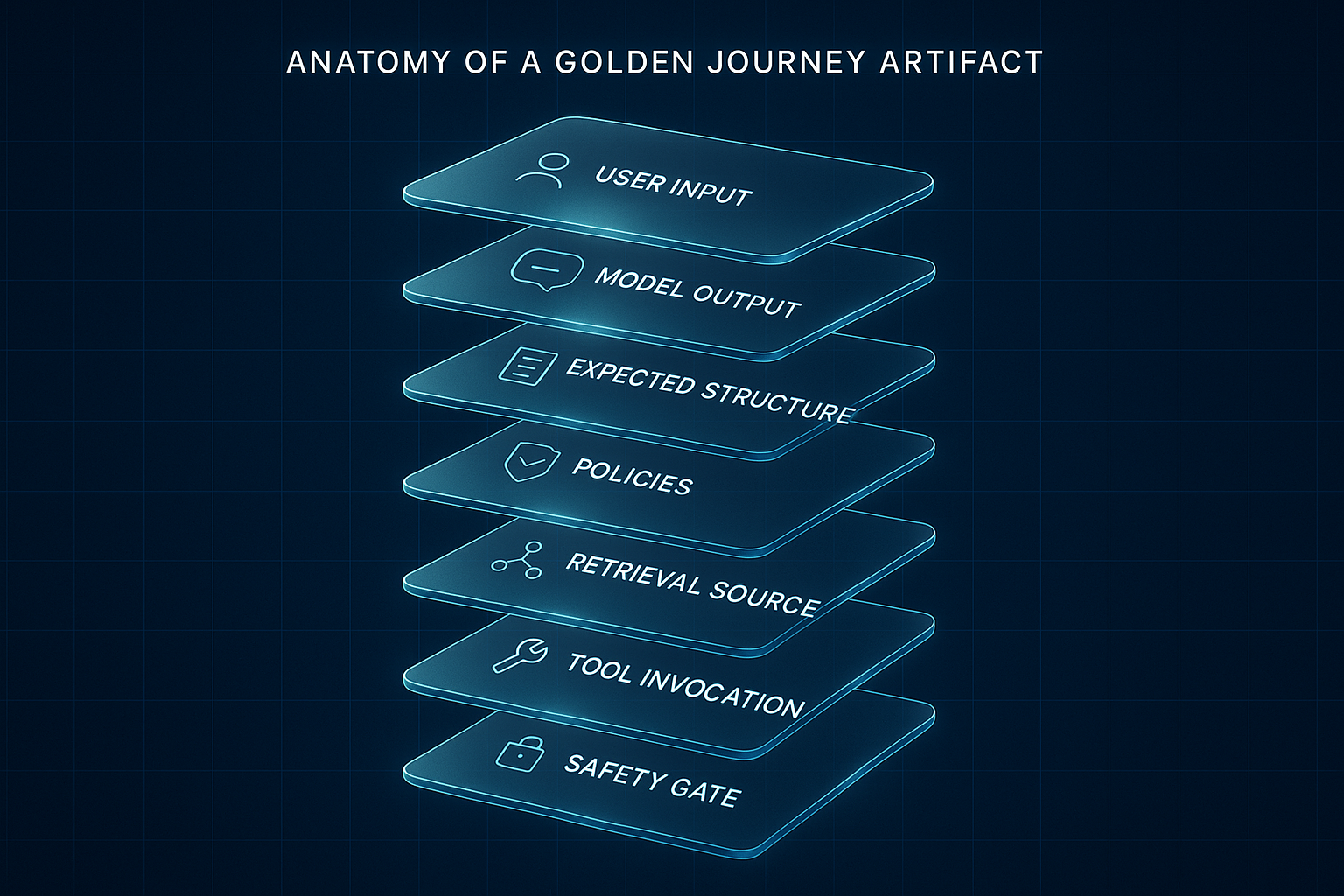

A Golden Journey is a serialized, multi-turn conversation designed to test whether your assistant behaves predictably across the entire flow. Unlike brittle “golden answers” or single-prompt tests, a journey encodes expectations at multiple levels:

- The shape and structure of responses

- The reasoning steps the assistant should take

- Policies it must follow

- Retrieval it must use

- Tools it must call

- Safety conditions that must not trigger

- Model routing decisions that must remain consistent

- Semantic meaning that must be preserved even if wording varies

Journeys don’t require exact text matching. Instead, they assert patterns, structure, intent, and metadata. This gives them longevity even as your system evolves.

A good journey represents a real scenario: onboarding, troubleshooting, planning, interpretation of data, or contextual guidance. For a system like Hoomanely’s assistant, that includes flows such as interpreting EverSense activity patterns, explaining feeding behavior captured by EverBowl, or helping users resolve device issues. But the concept is entirely generic and applies to any AI assistant operating in multi-turn mode.

When your system works correctly, Golden Journeys pass quietly. When something subtle breaks, they produce high-signal diffs that make the cause obvious.

Why Golden Journeys Matter for Engineering Teams

Golden Journeys create a shared understanding between engineering, ML, QA, and product teams. Each journey encodes what “correct behavior” means in the context of your product. This bridges silos naturally:

- Backend teams use journeys to verify prompt formatting, routing, post-processing, and tool execution.

- ML teams use journeys to validate retrieval quality, semantic robustness, safety behavior, and hallucination boundaries.

- QA teams use journeys to elevate conversational testing beyond manual, ad-hoc checks.

- Product managers use journeys as living artifacts of expected user experience.

The greatest value of Golden Journeys is that they turn qualitative evaluations (“it feels off”) into quantitative signals (“structure missing at turn 3”). They scale organizational intuition into engineering rigor.

How to Design a Golden Journey That Will Last

Good journeys are not transcripts—they are specifications. They define what should remain stable even as the assistant becomes more powerful, more concise, or more context-aware.

Represent Genuine User Behavior

The journey should feel like a real conversation: incomplete sentences, backtracking, clarifying questions, and interruptions. Avoid overly formal exchanges. Real users don’t speak in polished, structured sentences.

Capture Intent and Structure, Not Keywords

A journey shouldn’t fail because the assistant used synonyms. What matters is whether the assistant:

- Offered a correct troubleshooting sequence

- Asked clarifying questions at the right time

- Recalled earlier context

- Used the correct data

- Chose an appropriate tone

- Performed or avoided a tool call

- Cited relevant knowledge when required

This approach makes the journey resilient across model upgrades.

Layer Expectations Thoughtfully

Each turn may specify expectations such as:

- Structural elements

- Follow-up prompts

- Policies (e.g., “no internal implementation details”)

- Retrieval context

- Safety boundaries

- Routing constraints

- Tool behavior

These expectations define the “contract” for the journey.

Keep Journeys Focused

A journey should validate a scenario, not a feature set. Too many expectations turn journeys brittle; too few make them uninformative. The right balance takes experience, and it improves as your library of journeys grows.

Inside a Golden Journey Runner

A Golden Journey runner is the engine that brings these scenarios to life inside CI/CD. It loads the journey, replays it turn by turn, applies normalization, performs diffing, and generates reports.

Loader

Discovers journey files, validates structure, resolves shared configs, and prepares execution context.

Executor

Sends each turn to the AI gateway, capturing:

- Model output

- Routing decisions

- Retrieval traces

- Tool calls

- Safety flags

- Latency and token usage (optional)

This is where realism comes in. The journey uses the same gateway path users hit.

Normalizer

Cleans the model output so comparisons are stable:

- Normalizes punctuation

- Structures lists

- Parses JSON tool calls

- Extracts markdown

- Removes formatting artifacts

Normalization prevents false failures.

Diff Engine

The core of the system.

It performs:

- Structural comparison

- Semantic similarity evaluation

- Policy enforcement

- Safety rule checks

- Retrieval validation

- Routing verification

- Tool-call alignment

Golden Journeys work because this diff engine is flexible enough to allow healthy variation yet strict enough to detect behavioral regressions.

Reporter

Produces human-friendly HTML reports, journey summaries, and turn-level annotations. These reports enable fast debugging, even for subtle regressions.

Integrating Golden Journeys Into CI/CD

The real power of Golden Journeys emerges when they become part of your continuous deployment lifecycle. They prevent subtle behavioral regressions from reaching production—even if each change seems small.

When to Run Journeys

- Prompt/template changes

- Model routing updates

- New model rollouts

- KB restructuring

- Safety classifier modifications

- Tool schema updates

- Gateway refactors

- Nightly drift checks

- Weekly deep evaluations

This ensures behavior remains stable across all layers.

Fast, Live, and Deep Modes

A mature implementation uses three execution modes:

Fast Mode

Lightweight. Uses cached or stubbed responses to detect schema or post-processing regressions.

Live Mode

Uses real model calls. Detects semantic, structural, and retrieval drift.

Deep Mode

Runs multiple models, fallback paths, heavy RAG scenarios, and safety evaluations. Useful for weekly test cycles.

Failure Thresholds

Not all deviations are harmful. Journeys should:

- Fail on structural or safety violations

- Fail on incorrect routing or retrieval

- Warn on stylistic deviations

A smart diff engine prevents pipelines from breaking over harmless variations.

Organizing Your Journey Suite

Journeys can be arranged by category:

- Core user flows

- Troubleshooting

- RAG-heavy conversations

- Long-term memory scenarios

- Safety-related flows

- Model-routing checks

- Personalization flows

This makes it easier to expand, audit, or prune journeys over time.

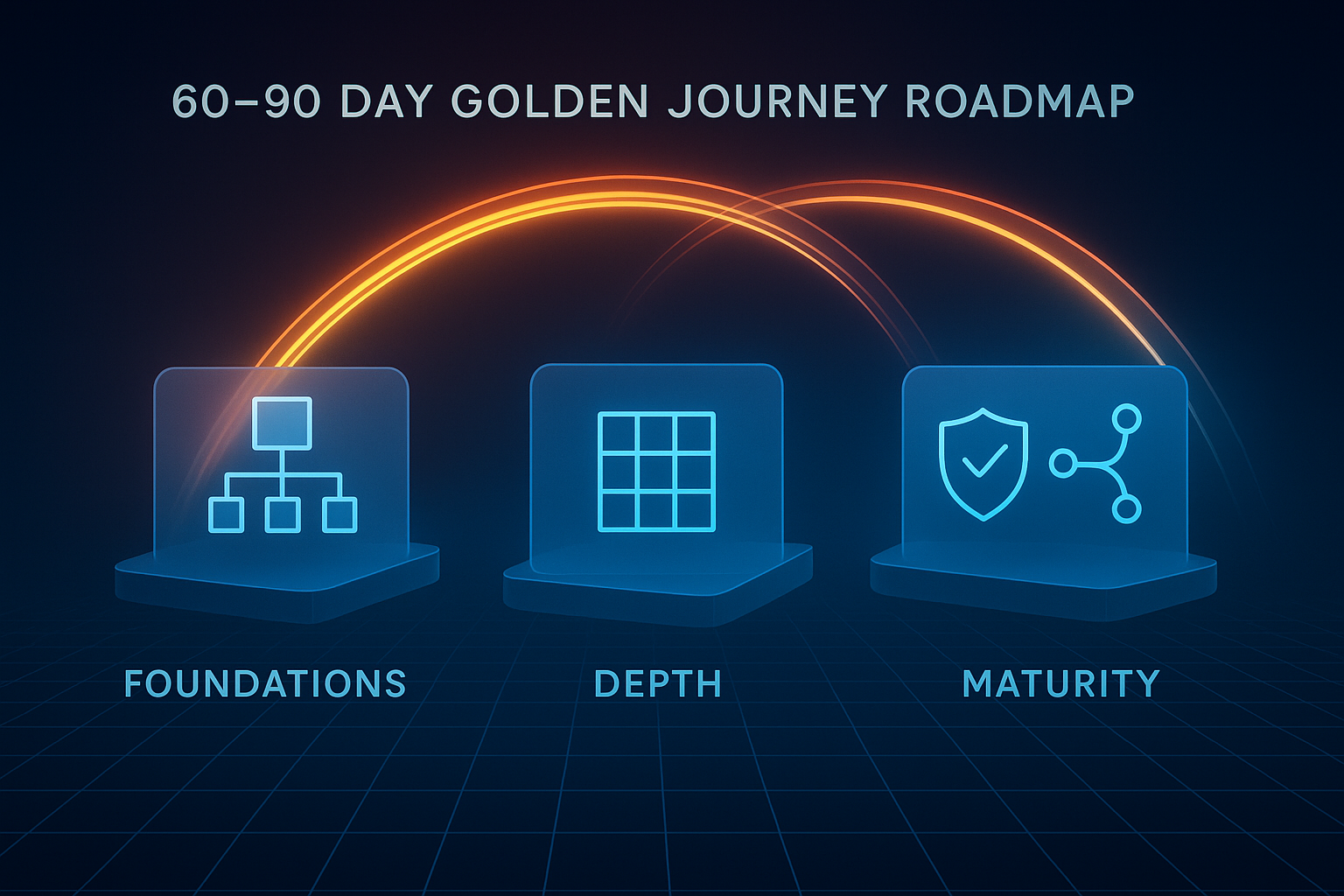

Rolling Out a Golden Journey Framework in 60–90 Days

A pragmatic rollout timeline looks like this:

Phase 1 — Foundations

Define schema

Build loader + executor

Write first 20 journeys

Add deterministic sandbox mode

Integrate fast-mode checks into CI

Phase 2 — Depth

Add semantic and metadata diffing

Implement HTML reporting

Add nightly live walks

Increase journey count to 60

Phase 3 — Maturity

Introduce multi-model evaluation

Add safety and privacy journeys

Test fallbacks

Expand to 100+ journeys

Visualize journey coverage

By the end of this period, your assistant becomes noticeably more stable and predictable—even as the underlying AI stack continues to evolve rapidly.

A Realistic Regression Caught by Golden Journeys

A troubleshooting flow for a blinking LED on a connected device initially worked perfectly. It retrieved the correct KB chunk, offered targeted steps, and escalated appropriately.

Then a refactor changed metadata fields used by the retriever. Retrieval still looked “correct” for generic queries, but in this specific case, an important chunk lost relevance ranking. The assistant shifted from LED-specific guidance to generic troubleshooting recommendations.

Manually, this regression looked like harmless variation. But the Golden Journey encoded expectations:

- Retrieval must use LED-state KB

- Output must contain LED-state interpretation

- Follow-up must diagnose based on color

When replayed, the journey caught the deviation instantly. The regression was fixed before reaching production.

This is the power of Golden Journeys: they elevate subtle conversational problems into clear, actionable diffs.

Hoomanely’s assistant guides pet parents through understanding activity patterns, feeding schedules, sensor anomalies, and device states. These flows rely on synchronized knowledge from EverSense, EverBowl, and the app’s RAG system. Firmware updates, new KB docs, additional model routing strategies, and expanded insights all introduce noise.

Golden Journeys ensure that the assistant’s reasoning, explanations, troubleshooting sequences, and safety guidelines remain dependable, structured, and accurate—no matter how fast the ecosystem evolves. They preserve product trust and conversational clarity as new capabilities come online.

Key Takeaways

Golden Journeys transform conversational AI reliability from guesswork into engineering discipline.

- They capture multi-turn behavior that isolated tests can’t see.

- They validate structure, semantics, routing, retrieval, tools, and safety.

- They integrate into CI/CD to detect real regressions early.

- They scale from a dozen journeys to hundreds without failing on harmless variation.

- They offer a 60–90 day path to full maturity.

- They strengthen the stability and predictability of AI assistants in fast-moving ecosystems like Hoomanely.

Golden Journeys ensure that as your AI evolves, your user experience doesn’t deteriorate. They convert vague “it feels wrong” feedback into precise test failures—and that change transforms how teams build, ship, and trust AI behavior.