How We Shrunk Python Environment Size for Edge Devices

Introduction

Running Python applications on edge devices is fundamentally different from deploying them on servers or developer laptops. These devices - whether embedded compute modules, IoT gateways, or sensor hubs - operate under strict storage ceilings and must deploy updates reliably without exhausting disk space. At Hoomanely, our edge systems process audio, thermal, and motion data locally, often on devices with only 4–8GB of storage, of which operational runtime, logs, and ML models also compete for space.

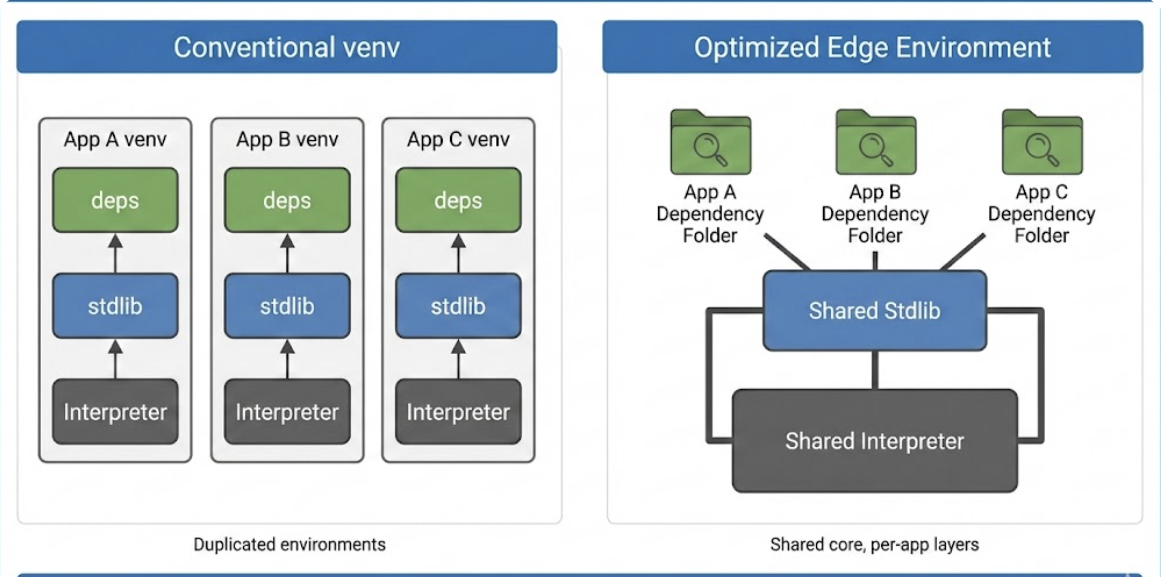

A conventional python -m venv virtual environment frequently consumes 300–600MB, creating unnecessary duplication of interpreters, standard libraries, and dependency trees. For edge systems, this is neither efficient nor sustainable. This article presents a structured, professional evaluation of production‑grade alternatives to Python virtual environments, followed by the solution architecture that best meets the constraints of modern edge deployments.

The Problem: Environment Isolation vs Storage Constraints

Isolation is essential for Python applications - it ensures version consistency, dependency stability, and predictable behavior across devices. However, environment isolation typically duplicates:

- Python interpreter binaries

- Standard library files

- Dependency folders and metadata

On constrained filesystems, duplication becomes the primary bottleneck. The challenge, therefore, is to maintain isolation without redundancy.

Goal: Provide isolated, reproducible Python environments that minimize storage usage and simplify deployment across thousands of field devices.

How We Reduced Our Python Environment Footprint

Rather than exploring alternatives academically, this section outlines the strategic engineering decisions we made to bring our Python environment size down by by a substantial amount-while maintaining isolation, reliability, and ML‑stack compatibility across all Hoomanely edge devices.

This is not a tutorial or a list of options-it is the actual, deliberate sequence of architectural improvements we implemented to achieve a lean, reproducible Python runtime suitable for constrained embedded platforms.

1. Establishing a Shared Runtime Foundation

Using the system Python as the base runtime avoids interpreter duplication entirely. Applications load their own dependency folders atop the system installation via PYTHONPATH.

Advantages

- Zero interpreter duplication

- Smaller footprint than venv

- Straightforward deployment

Limitations

- Requires consistent system‑level Python across all devices

- Minor risk of unintentional coupling if not version‑locked

This forms the foundation for more modular solutions.

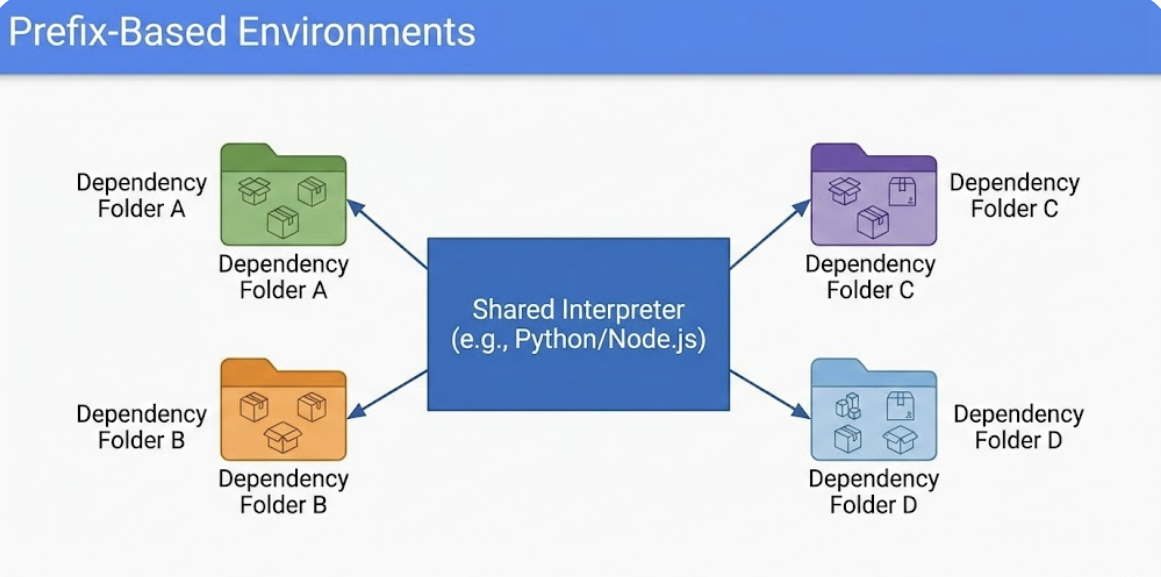

2. Introducing Lightweight, Prefix‑Based Dependency Environments (Core Improvement)

The most effective and scalable pattern for edge deployments is using per‑application dependency prefixes. Instead of replicating a full venv, only application‑specific dependencies are stored in isolated folders.

How It Works

Dependencies are installed using:

pip install --prefix=/opt/app1/ -r requirements.txt

Applications run using the shared interpreter but reference isolated dependency directories:

PYTHONPATH=/opt/app1/lib/python3.11/site-packages python3 main.py

Why This Architecture Works Exceptionally Well

- major reduction in storage vs venv (often more than half)

- No interpreter duplication

- Reproducible across thousands of deployments

- Modular - each service updates independently

- Alignment with modern immutable‑infrastructure principles

This is now the standardized environment strategy across Hoomanely's edge compute stack.

3. Considering Containers for Higher‑Capacity Hardware

Containerization ensures strict isolation and reproducibility, but may not always be optimal on low‑storage devices.

Pros

- Strong isolation boundaries

- Easy version control

Cons

- Base image size (150–300MB+)

- Runtime overhead

- Not supported on all embedded Linux distributions

Containers remain suitable for higher‑tier gateways with more storage, but prefix‑based environments remain more efficient for ultra‑constrained devices.

4. Using Frozen Builds Where Applicable

Python's zipapp or tools like PyInstaller bundle code into portable executables. These work well for:

- CLI utilities

- Single‑purpose micro‑scripts

However, for ML workloads or native‑library‑heavy stacks, these techniques introduce significant build complexity and often increase size rather than reducing it.

They remain valid options, but not generalizable for edge ML deployments.

Why Prefix‑Based Environments Became Our Standard Across All Edge Deployments

After applying engineering criteria - footprint, reproducibility, update simplicity, compatibility, and runtime stability - prefix‑based dependency folders paired with a shared interpreter emerged as the most reliable architecture.

Key Technical Advantages

1. Meaningful Storage Optimization

- venv size: 450–600MB depending on ML packages

- prefix env size: often 120–200MB depending on dependency mix

Most of the savings come from avoiding duplicated copies of:

- Python binaries

- Standard library copies

- Repeated shared object files

2. Clear Isolation Without Redundancy

Every application maintains its own dependency set, preventing version conflicts.

3. Predictable Deployments Across Fleets

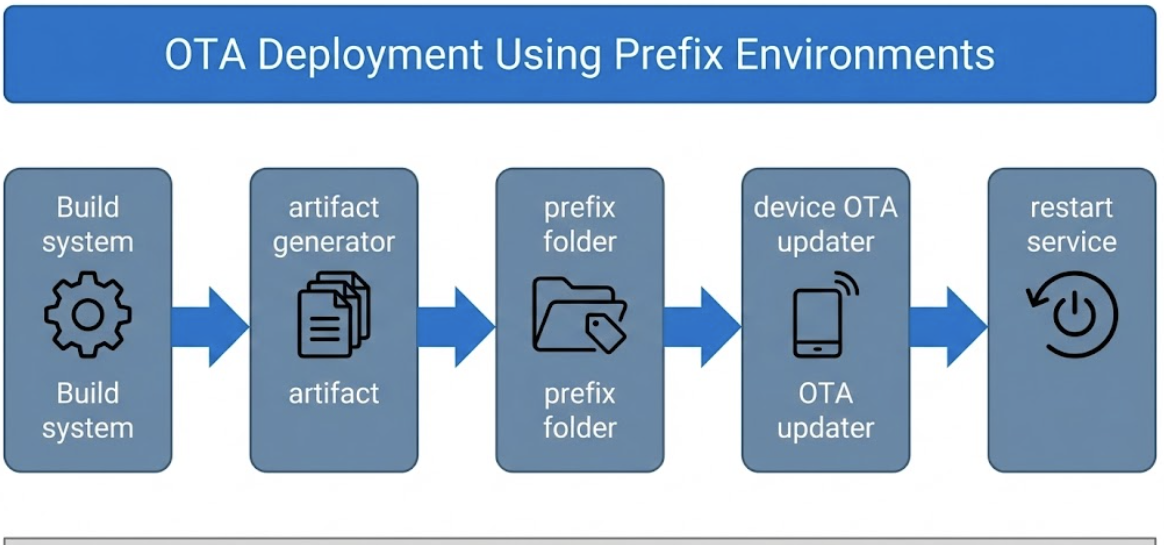

Prefix folders are copied as‑is during OTA deployment, ensuring consistent environments on every bowl or collar in production.

4. Compatibility With Heavier Scientific Libraries

Unlike Alpine/musl or fully frozen builds, this method supports:

- PyTorch

- NumPy/SciPy

- OpenCV

- Custom native extensions

5. Fast and Safe OTA Updates

Updates involve swapping a single dependency directory rather than reinstalling environments on‑device - reducing downtime and risk.

Additional Optimizations for Ultra‑Low Storage Devices

To further enhance efficiency in production environments, we apply the following optimizations:

1. Strip Bytecode and Test Artifacts

Removing *.pyc and unneeded metadata trims space further.

2. Prefer Pre‑Built Wheels

pip install --only-binary :all:

Wheels avoid build toolchains and reduce footprint.

3. Consolidate Shared Native Libraries

Native .so files used across apps can live in /usr/lib to avoid duplication.

4. Separate Runtime vs Model Storage

Keeping ML models in a dedicated storage partition simplifies cleanup and updates.

Key Takeaways

- Traditional Python virtual environments are unsuitable for constrained edge devices due to high redundancy.

- Optimal edge deployments need isolation without doubling system components.

- Prefix‑based dependency environments provide the best balance of:

- Minimal storage usage

- Strong isolation

- Reproducibility

- ML‑stack compatibility

- OTA‑friendly deployment

- This architecture is now standard across Hoomanely’s edge compute systems.