Intelligent Conversation Memory: A 2-Level Summary System

Imagine this: You're chatting with an AI vet about your anxious rescue dog, Luna. Over hours, you've discussed her separation anxiety, dietary restrictions, medication schedules, and behavioural patterns. Then the next day, you ask a follow-up question about her progress—and the AI responds as if you're strangers meeting for the first time.

This isn't a hypothetical scenario. It's the fundamental limitation of conversational AI today.

Most chat systems face a brutal tradeoff: either maintain full conversation history (hitting token limits and degrading performance), or discard older context (losing critical continuity). For pet health conversations, this isn't just frustrating—it's potentially dangerous. A forgotten allergy mention or overlooked symptom pattern could lead to harmful advice.

At Hoomanely, where we're building AI-powered pet healthcare assistant for pet parents, this problem is existential. Our conversations aren't casual chitchat-they're longitudinal information. Pet parents return days or weeks later expecting the AI to remember Luna's anxiety triggers, her medication changes, and that time she ate something she shouldn't have.

We needed a system that could maintain conversation continuity across days or weeks without hitting LLM context limits. The solution? A hierarchical memory architecture that mimics how human memory works - compressing details while preserving meaning.

Why Traditional Approaches Fall Short

Before diving deep, let's understand what are the obvious approaches and where does it fall short:

Full history retrieval sounds ideal but crashes into hard limits. LLMs can have a large token context window, but in practice, you do not want to fill it entirely with conversation transcripts. You need space for other contexts as well like the pet details, relevant information about the question, etc. And hitting the context window limit poses another problem of increased latency.

Sliding window approaches (keeping only the last N messages) work for customer support but fail catastrophically for healthcare. If someone mentions a critical detail in message 3, and you're now at message 12, that information is gone. In veterinary contexts, early symptom mentions are often crucial for later diagnosis.

Simple summarisation (periodically compressing history into a single summary) creates its own problems. As conversations grow, that one summary becomes a bottleneck—either too vague to be useful or too detailed to fit in the context window. You end up summarising the summary, losing fidelity with each compression.

The real challenge isn't just remembering—it's remembering hierarchically, the way humans do.

The Landscape of Memory Solutions

When we started architecting our memory system, we surveyed the field. The industry has converged on a few dominant patterns, each with distinct tradeoffs.

Vector Embeddings & Semantic Search

The trendy approach: embed all messages as vectors, store them in a vector database, and retrieve semantically similar context for each new query.

The appeal is obvious—you get "smart" retrieval that finds relevant past conversations even if they used different words. A question about "stomach upset" might retrieve earlier discussions about "digestive issues" or "vomiting."

The reality is messier. Semantic similarity doesn't capture temporal causality—the fact that Event A happened before Event B matters in healthcare conversations. A symptom mentioned last week is more relevant than a similar symptom from three months ago that was resolved. Vector search treats time as just another dimension, not as the organising principle.

More fundamentally, embeddings lose the narrative thread. You get snippets of relevant past conversations, but not the story of what happened. "Luna had diarrhoea" retrieved from week 3 isn't as useful as knowing "Luna had diarrhoea in week 3, we tried a diet change in week 4, symptoms improved by week 5, then recurred in week 7 after stress."

For healthcare, temporal coherence matters more than semantic similarity.

Fixed-Window with Keyword Extraction

A pragmatic middle ground: keep a sliding window of recent messages, but extract keywords or "facts" from older conversations and store them separately.

"Luna is allergic to chicken" becomes a stored fact. "Owner is concerned about separation anxiety" becomes another fact. These facts get injected alongside the recent message window.

This approach scales better than full history and preserves some long-term context. But it reduces rich conversations to bullet points. Nuance dies in extraction.

When a pet parent says "Luna seems anxious again," the system knows there's a history of anxiety discussions (stored fact), but not the progression—what was tried, what worked, what patterns emerged. Facts without narrative context lead to repetitive advice.

The Missing Ingredient: Hierarchical Compression

What all these approaches miss is that human memory doesn't work through retrieval—it works through layered abstraction.

You don't remember every word of a conversation from last month. You remember themes, outcomes, and emotional beats. You compress details into progressively higher-level summaries as time passes.

A conversation from yesterday? You remember specifics. A conversation from last week? You remember the gist. A conversation from last month? You remember it happened and what mattered.

This is the insight we built around.

Two-Level Memory Architecture

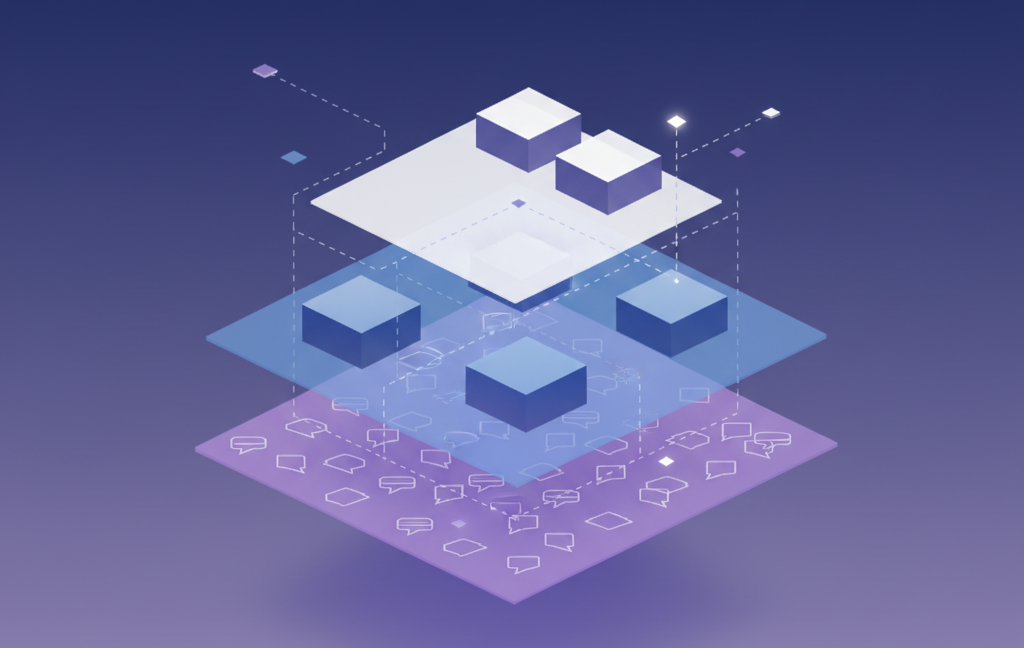

We built a system inspired by human memory formation: immediate recall for recent events, consolidated summaries for older context, and high-level patterns for long-term understanding.

The L1 Layer: Tactical Summaries

The first layer captures conversation chunks—typically 5-10 message exchanges grouped by natural conversation boundaries. Think of these as short-term memory snapshots.

When a conversation crosses the threshold (say, 10 unsummarised message pairs accumulate), we trigger L1 generation. The system extracts:

- Core themes discussed in that chunk (e.g., "Introduced new grain-free diet, monitoring for allergies")

- User concerns and emotional state (anxious about symptoms, seeking reassurance)

- Actionable items or recommendations given

Each L1 summary includes metadata: which messages it covers (via timestamp), how many exchanges it represents, and its creation time. This metadata becomes critical for the next layer.

The beauty of L1 summaries is specificity. They preserve details because they're covering a small window—think "remember what happened Tuesday afternoon" rather than "remember what happened this year."

The L2 Layer: Strategic Memory

The second layer consolidates multiple L1 summaries into higher-order insights. When, let's say, 3+ L1 summaries accumulate, we trigger L2 generation.

L2 summaries don't just concatenate L1s—they extract patterns:

- Recurring themes across conversations (e.g., "Ongoing anxiety management, tried three different approaches")

- Temporal progressions (how symptoms evolved, what interventions were attempted)

- Cross-topic connections (diet changes correlating with behaviour improvements)

- Long-term user concerns (persistent worries, communication preferences)

This layer includes its own metadata: which L1 summaries were processed, a block index for tracking consolidation state, and version information for handling updates.

The L2 layer is where we capture the "story arc" of a pet parent's journey—not just isolated conversations, but the narrative thread connecting them.

Tradeoffs & Design Decisions

No architecture is perfect. Here's what we optimised for and what we sacrificed.

What We Gained

Temporal coherence: Unlike vector search, our system preserves the timeline. The AI knows what happened first, what was tried next, and how things progressed. Healthcare conversations demand this.

Graceful degradation: As conversations grow longer, context quality doesn't cliff—it gradually compresses. A 100-message conversation gives you L2 + L1 + recent messages, which is richer than a 10-message conversation but scales identically in token usage.

Cross-session persistence: When a pet parent returns after days or weeks, the system rebuilds context seamlessly. There's no "cold start" problem because summaries are persistent, not ephemeral.

Predictable performance: Token usage is bounded and predictable. We never risk hitting context limits or having to frantically prune history.

What We Sacrificed

Perfect recall: We can't retrieve every specific detail from 50 messages ago. If someone asks "what exact time did I say Luna vomited on Tuesday?" we might not have that—it might have been compressed away in summarisation.

We made this tradeoff consciously. Healthcare conversations need patterns and progression more than perfect timestamps. If exact recall matters (medication schedules, specific test results), we store that separately as structured data.

Semantic flexibility: Unlike embedding-based systems, we can't magically retrieve context based on conceptual similarity. Our system is chronologically organised—it excels at "what happened over time" but doesn't do "find all mentions of X across history."

Again, deliberate. For pet health, timeline matters more than topic clustering.

Summarisation latency: Generating summaries costs time—a few hundred milliseconds per summary operation. Systems that just retrieve pre-existing messages are faster.

But this latency is backloaded (happens between conversations, not during them) and the tradeoff for continuity is worth it. Pet parents don't notice a 200ms delay between sessions; they absolutely notice when the AI forgets context.

Why This Fits Our Use Case

Hoomanely's conversations have specific characteristics that make hierarchical summarisation ideal:

Longitudinal by nature: Pet health isn't transactional. It's ongoing monitoring, pattern recognition. A system designed for continuity over time aligns perfectly.

High-stakes context: Missing context isn't just annoying—it could lead to bad advice. We need reliability and completeness more than we need speed or perfect recall.

Narrative structure: Pet parents tell stories. "Luna's been anxious since the move" isn't a fact to extract—it's a narrative beat. Our system preserves story arcs because it's built around narrative compression, not fact extraction.

What We Learned Building This

Compression is an Art

Early iterations compressed too aggressively. We'd lose critical details in L1 generation. We learned that tactical summaries need to be "lossy but faithful"—they simplify wording but preserve meaning and specifics.

L2 summaries can be more aggressive—they extract themes, not details. Finding the right compression ratio for each layer took iteration and qualitative evaluation.

State Management is Critical

One of the hardest problems wasn't the summarisation itself—it was ensuring we never double-summarise content and always maintain consistent state across concurrent operations.

We learned to treat summarisation as a state machine problem, not just an LLM problem. Coverage tracking, atomic updates, and idempotency became as important as prompt engineering.

Pet-Specific Isolation is Non-Negotiable

In multi-pet households, context bleeding is catastrophic. Early testing showed that without strict isolation, summaries would occasionally conflate details about different pets.

We learned that partition design and query filtering aren't just performance optimisations—they're correctness requirements for healthcare applications.

Users Don't Notice Good Memory (But They Notice Bad Memory)

When the system works, it's invisible. Pet parents just experience conversations that feel continuous. They don't think "wow, great memory system"—they think "this AI understands Luna."

But when memory fails—when the AI forgets something important or asks a question already answered—trust breaks immediately. Memory systems are high-stakes plumbing: you only notice them when they break.

Key Takeaways

The "infinite context" problem isn't really about context windows—it's about how we organise and compress memory.

Vector embeddings, RAG, and sliding windows each solve specific problems well. But for longitudinal, high-stakes conversations like healthcare, hierarchical summarisation aligns with how humans naturally compress and recall information over time.

Our two-level architecture preserves what matters: temporal progression, narrative coherence, and graceful scaling. It sacrifices perfect recall and semantic flexibility, but those aren't critical for our use case.

Building effective AI memory isn't about cramming more tokens into prompts. It's about understanding how different memory patterns serve different conversational needs, and architecting systems that mirror how humans actually remember—with layers, compression, and context-aware detail.

For Hoomanely, this system is foundational to our mission. Pet parents shouldn't have to repeat themselves, and AI shouldn't forget what matters.