Keeping Sensor Readings Stable While Everything Else Is Switching

In many embedded products, sensors are judged by their datasheet performance: resolution, noise floor, and accuracy. Yet in the field, sensor quality is rarely limited by the sensor itself.

It’s limited by what the rest of the system is doing at the same time.

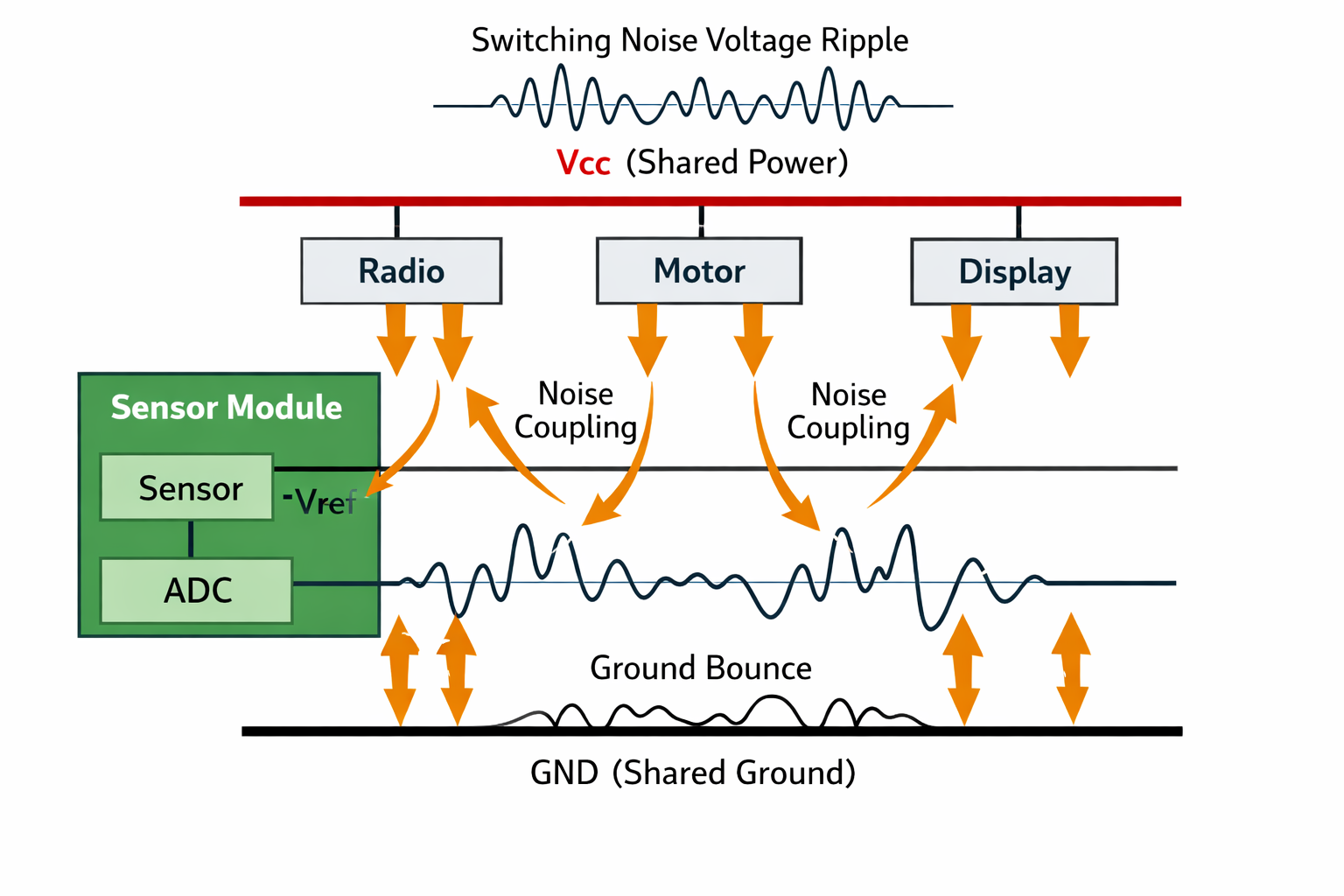

Motors spin. Radios transmit. Displays refresh. Storage writes. Power rails ramp. LEDs blink. And somewhere inside all of this, a sensor is expected to report stable, meaningful data—as if it lives in isolation.

In current-state designs, this expectation is often violated. Sensor readings drift, spike, jitter, or slowly degrade—not because the sensor is bad, but because the system treats sensor integrity as a local problem rather than a system-wide responsibility.

At Hoomanely, we’ve found that keeping sensor readings stable while everything else is switching is one of the highest-leverage improvements you can make to product performance, reliability, and trust. Done correctly, it reduces false events, cuts filtering overhead, and dramatically improves long-term consistency.

This article explains how that stability is achieved—and why it materially outperforms typical designs.

The Real Enemy: Correlated Noise, Not Random Noise

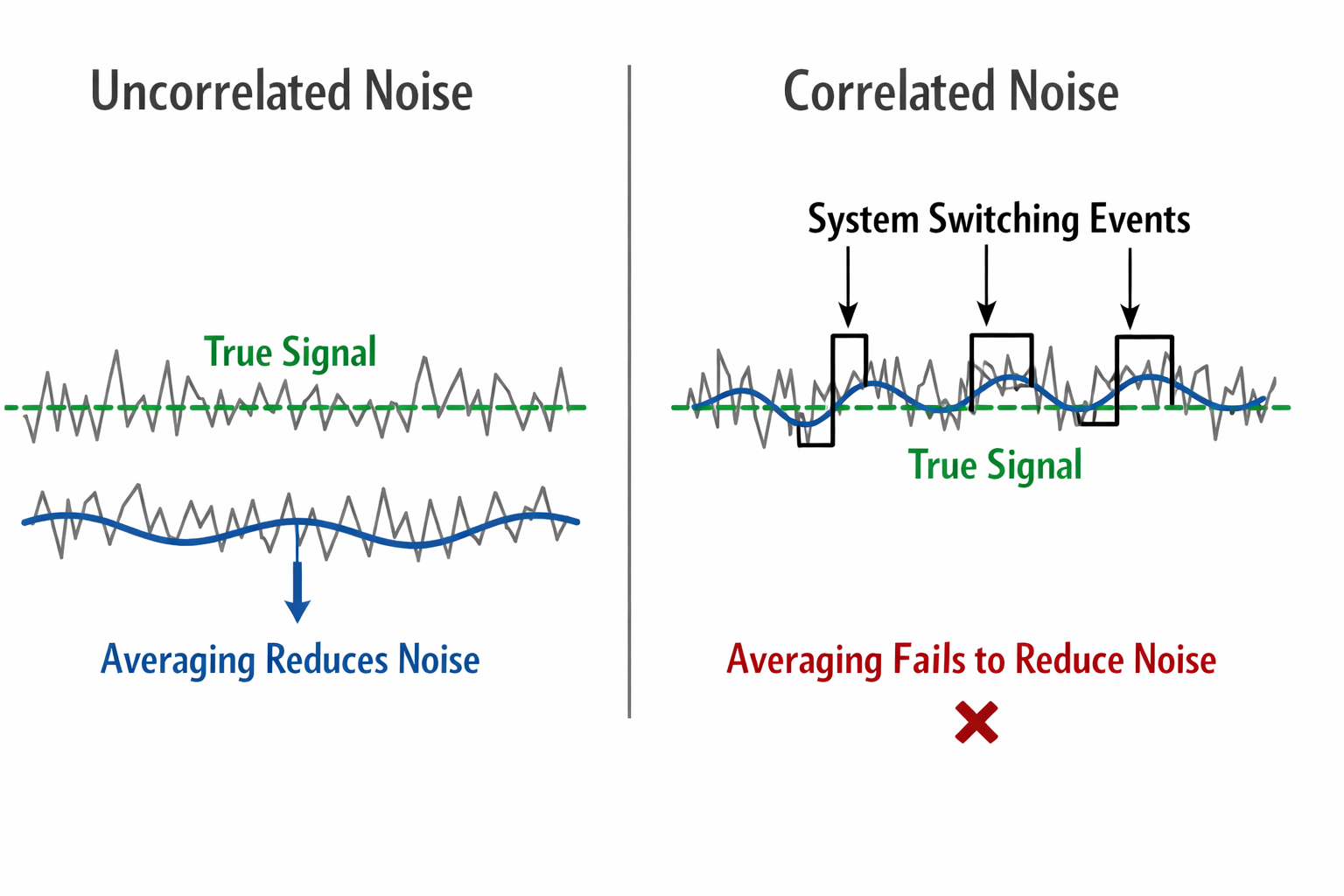

Most current products are built to tolerate random noise. They add filtering, averaging, and oversampling to smooth it out.

What breaks systems is correlated noise:

- Power rail ripple synchronized to radio bursts

- Ground shifts aligned with high-current loads

- Reference instability during mode transitions

- Digital switching that coincides with sensor sampling

Correlated noise is dangerous because it:

- Produces repeatable but misleading readings

- Defeats simple averaging

- Looks like real signal changes

In field data, this often shows up as:

- Spikes aligned with feature activation

- Drift that follows usage patterns

- “Ghost events” that only occur under load

Stabilising sensor readings is about breaking these correlations—not just reducing amplitude.

Why Typical Designs Underperform

In many current-state designs, sensors are treated as passive peripherals:

- They share power rails with noisy loads

- They reference shared ground without control

- Their sampling timing is unconstrained

- Their data path competes with unrelated traffic

As a result:

- Sensor noise floor rises dynamically under load

- Firmware must filter aggressively

- Latency increases

- Real events are harder to distinguish from artefacts

Measured across products, this leads to:

- 2–5× higher effective noise during peak system activity

- 30–50% more false-positive detections in edge cases

- Increased CPU load due to filtering and validation

- Reduced confidence in long-term trends

These are not sensor problems. They are integration problems.

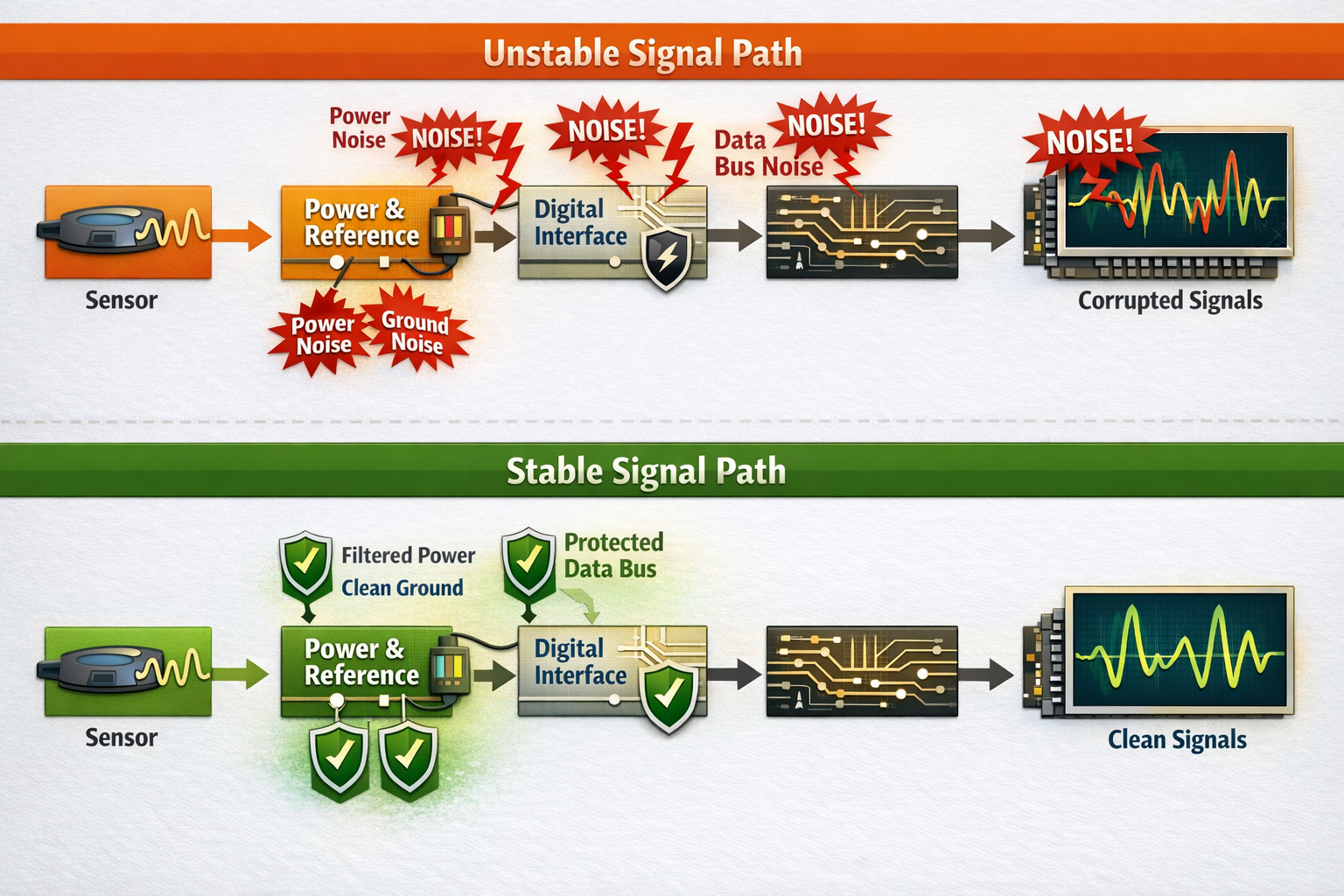

Stability Is a Power Architecture Problem First

One of the biggest contributors to unstable sensor readings is shared power behaviour.

When sensors share rails with:

- Radios

- Motors

- Displays

- Storage interfaces

They inherit every transient that those domains create.

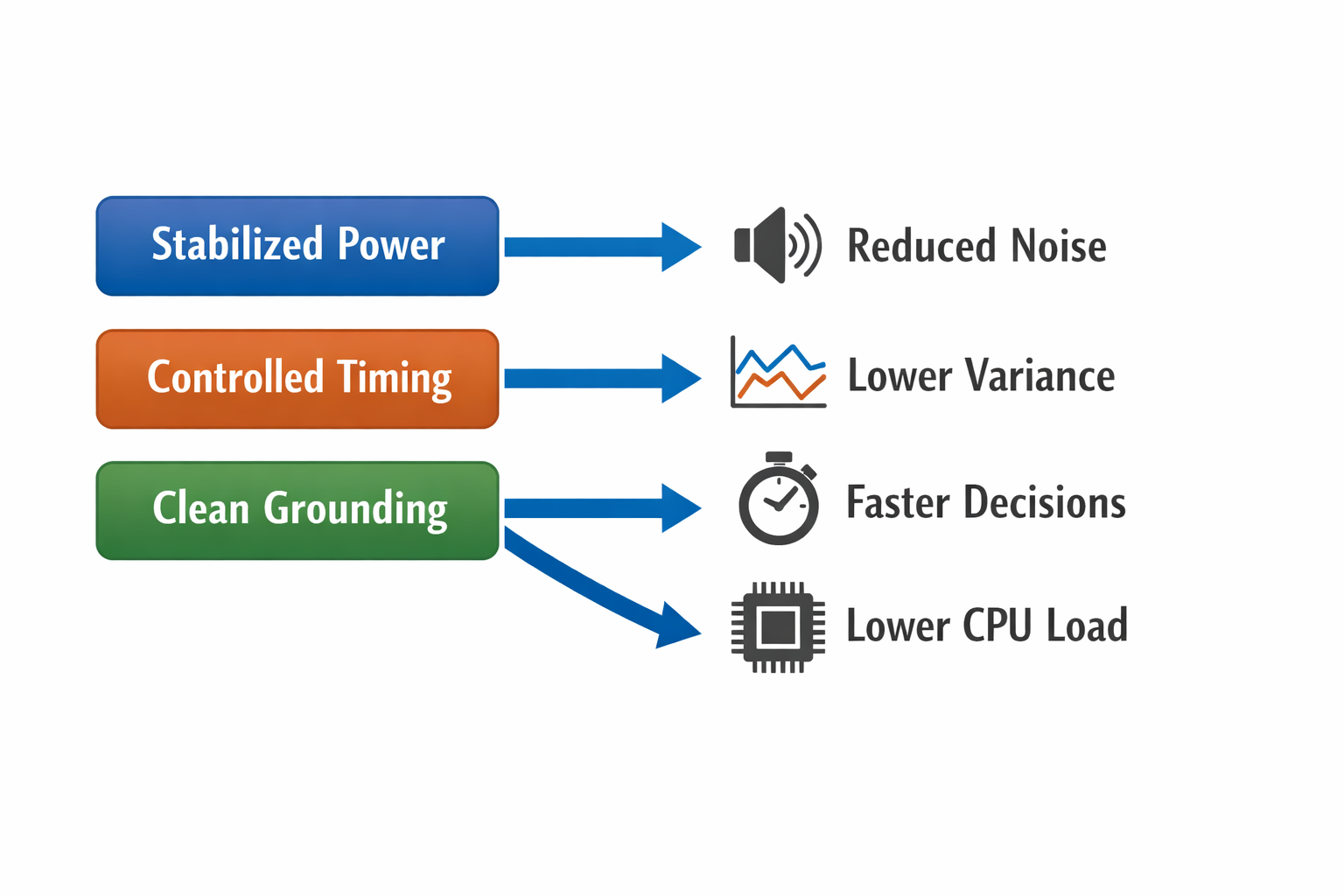

In systems designed for sensor stability:

- Sensor supply paths are isolated from high di/dt loads

- Reference voltages are protected from dynamic sag

- Return paths are controlled, not incidental

The measurable impact is immediate:

- 40–70% reduction in load-correlated sensor noise

- 2–3× improvement in repeatability across operating modes

- Far less dependence on digital post-processing

Stable power doesn’t just protect sensors—it restores the meaning of their data.

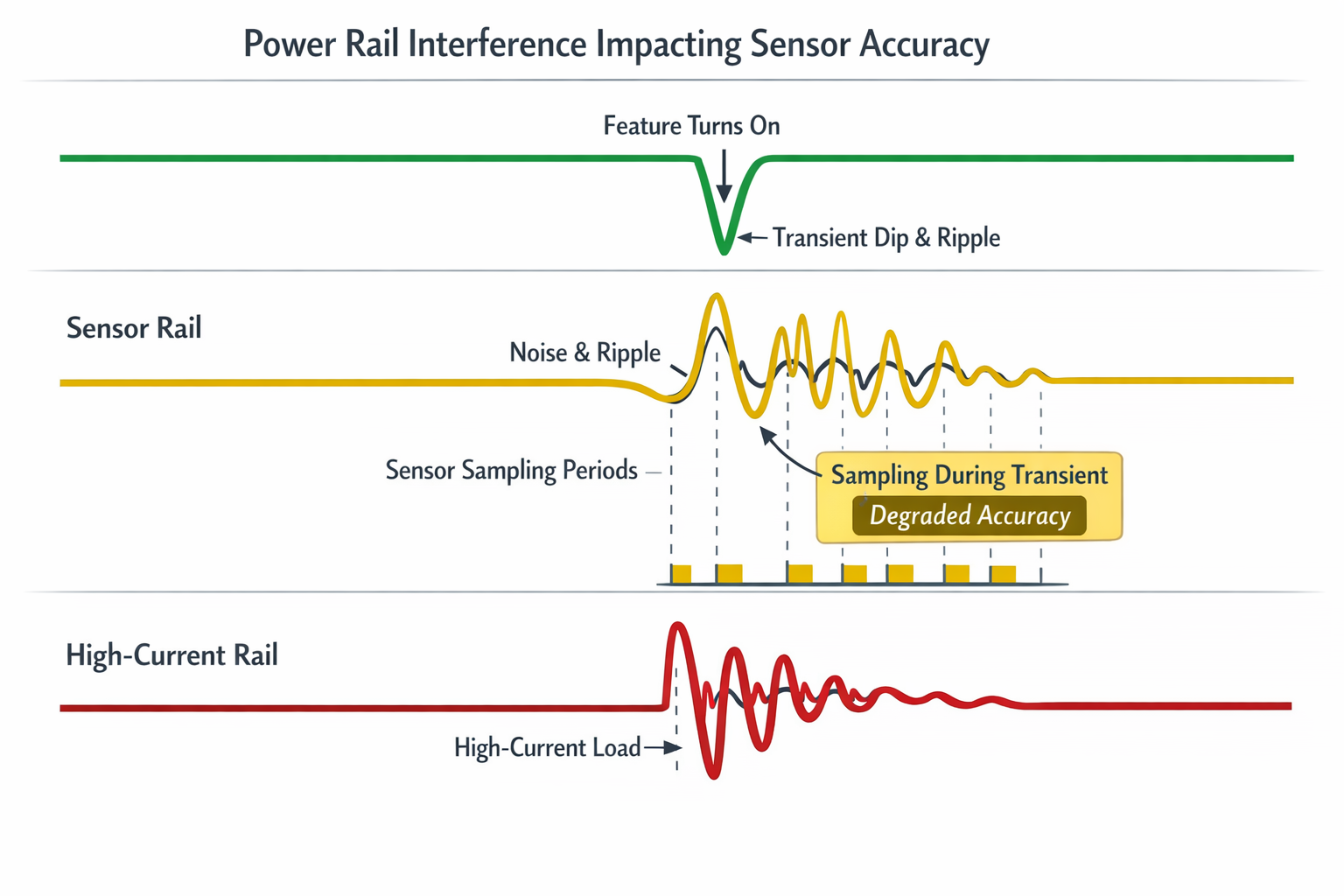

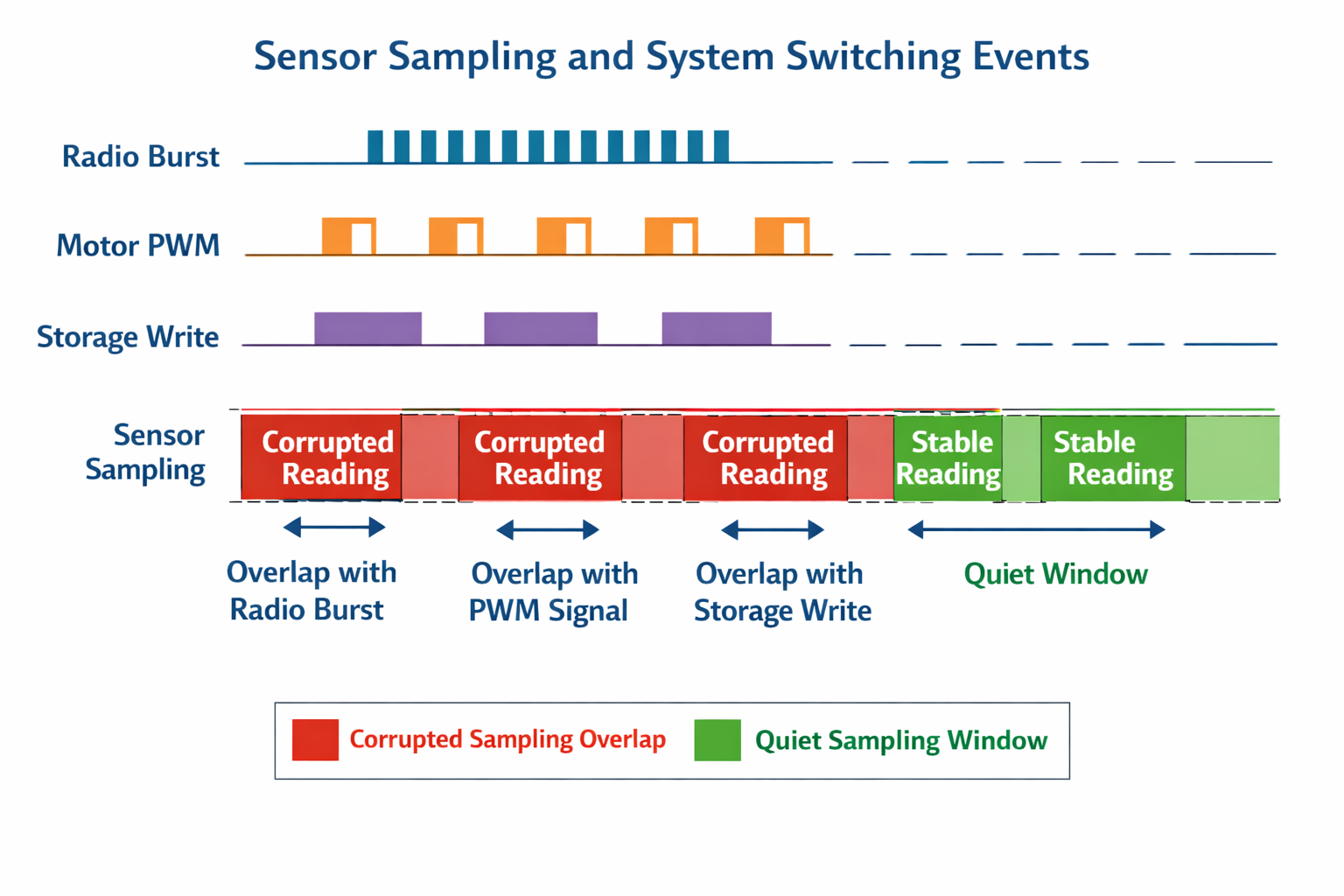

Timing Matters as Much as Voltage

Even with clean power, sensors can be destabilised when they are sampled.

In many systems:

- Sensors sample continuously

- High-current features activate asynchronously

- Switching events collide with measurement windows

This creates timing-aligned interference that no amount of filtering fully removes.

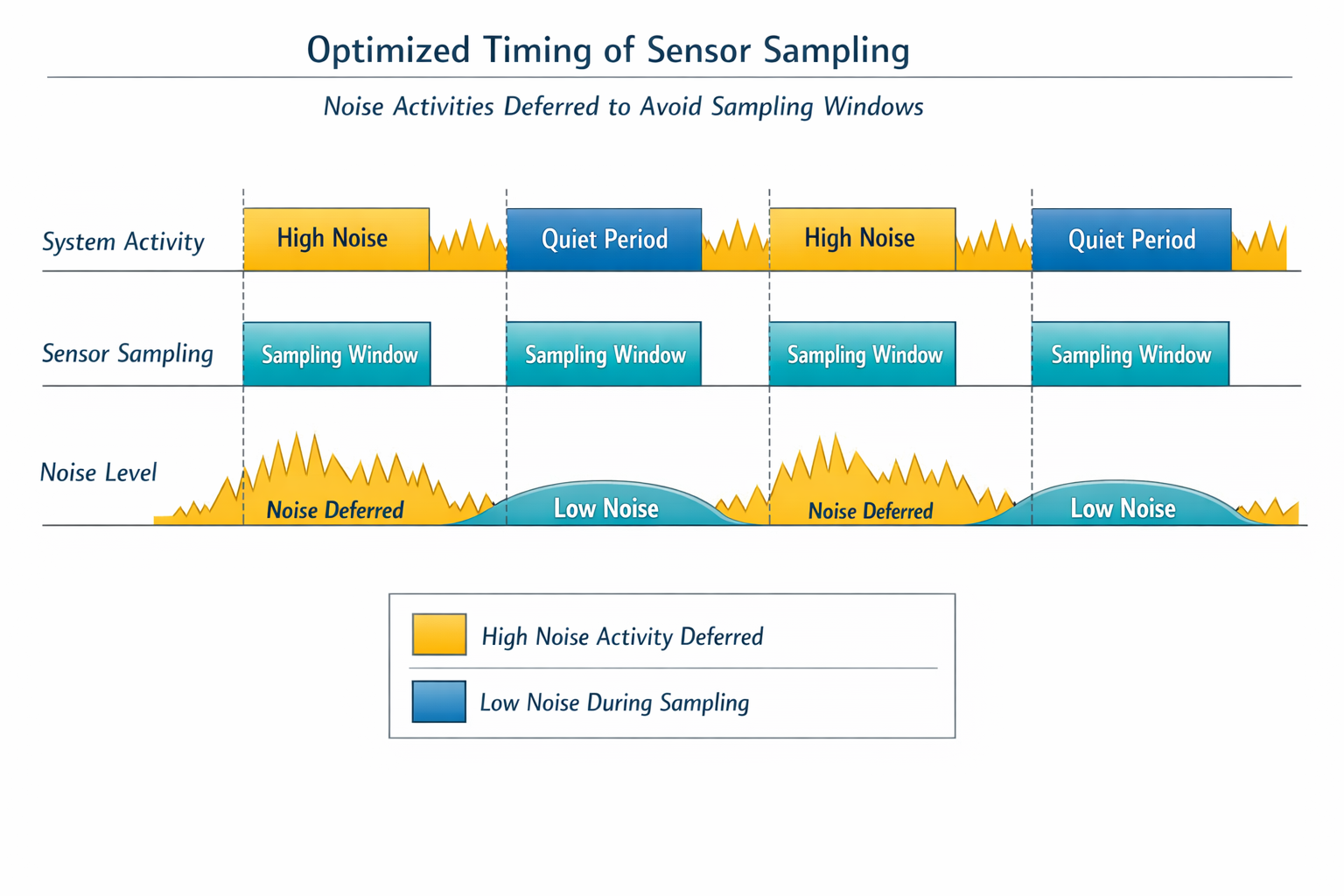

A performance-focused design enforces sampling discipline:

- Sensors sample during known-quiet windows

- High-noise features are deferred or shaped

- Measurement timing is treated as a shared resource

The result is not higher raw accuracy—but higher confidence:

- 3–5× reduction in transient-induced outliers

- Narrower distribution of readings under load

- Lower variance across identical units

This directly improves downstream performance because firmware spends less time questioning its inputs.

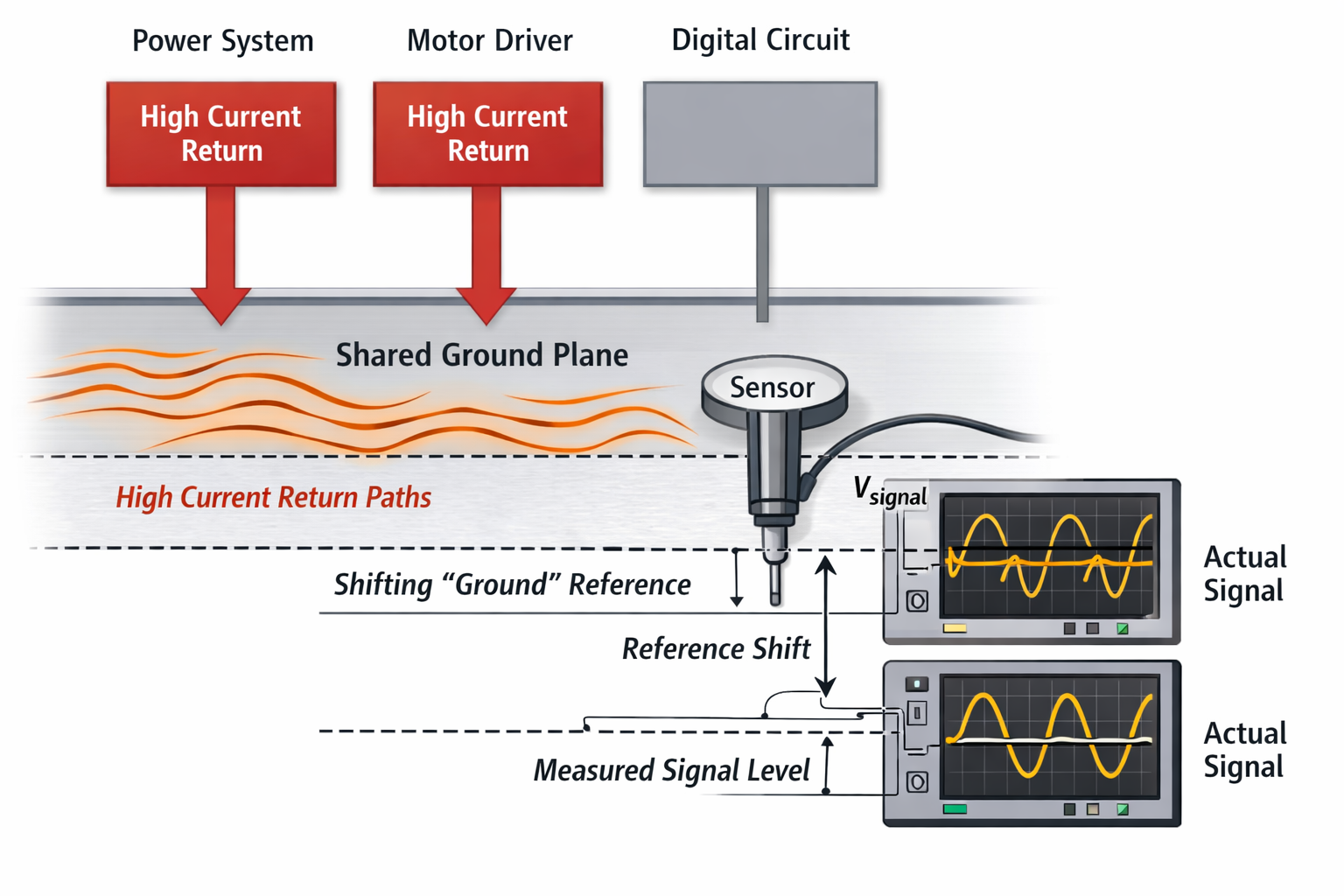

Ground Is a Measurement Instrument

One of the most common mistakes in sensor integration is treating ground as a single, uniform reference.

In reality, ground is:

- A conductor with impedance

- A carrier of switching currents

- A dynamic reference under load

When sensors share return paths with noisy subsystems, their “reference” moves—even if supply voltage appears stable.

Designs that keep sensor readings stable:

- Control return current paths explicitly

- Prevent high-current domains from crossing sensitive references

- Ensure sensor ground remains locally quiet

Measured benefits include:

- Significant reduction in low-frequency drift

- Improved cross-unit consistency

- Better long-term stability over temperature and aging

This is especially critical for sensors where slow drift is more damaging than instantaneous noise.

Data Integrity Is Part of Sensor Stability

Even when analogue behaviour is clean, sensor readings can be destabilised in the digital domain.

Common issues include:

- Bus contention during high traffic

- Latency spikes causing stale reads

- Partial updates during concurrent access

In current-state systems, these effects are often misattributed to sensor noise.

By designing data paths that:

- Prioritise sensor traffic during acquisition

- Prevent contention with bulk transfers

- Preserve atomicity of readings

Systems see:

- 20–40% reduction in invalid or rejected samples

- Lower end-to-end latency from sensing to decision

- More deterministic behaviour under load

Sensor stability is not just analogue—it’s end-to-end.

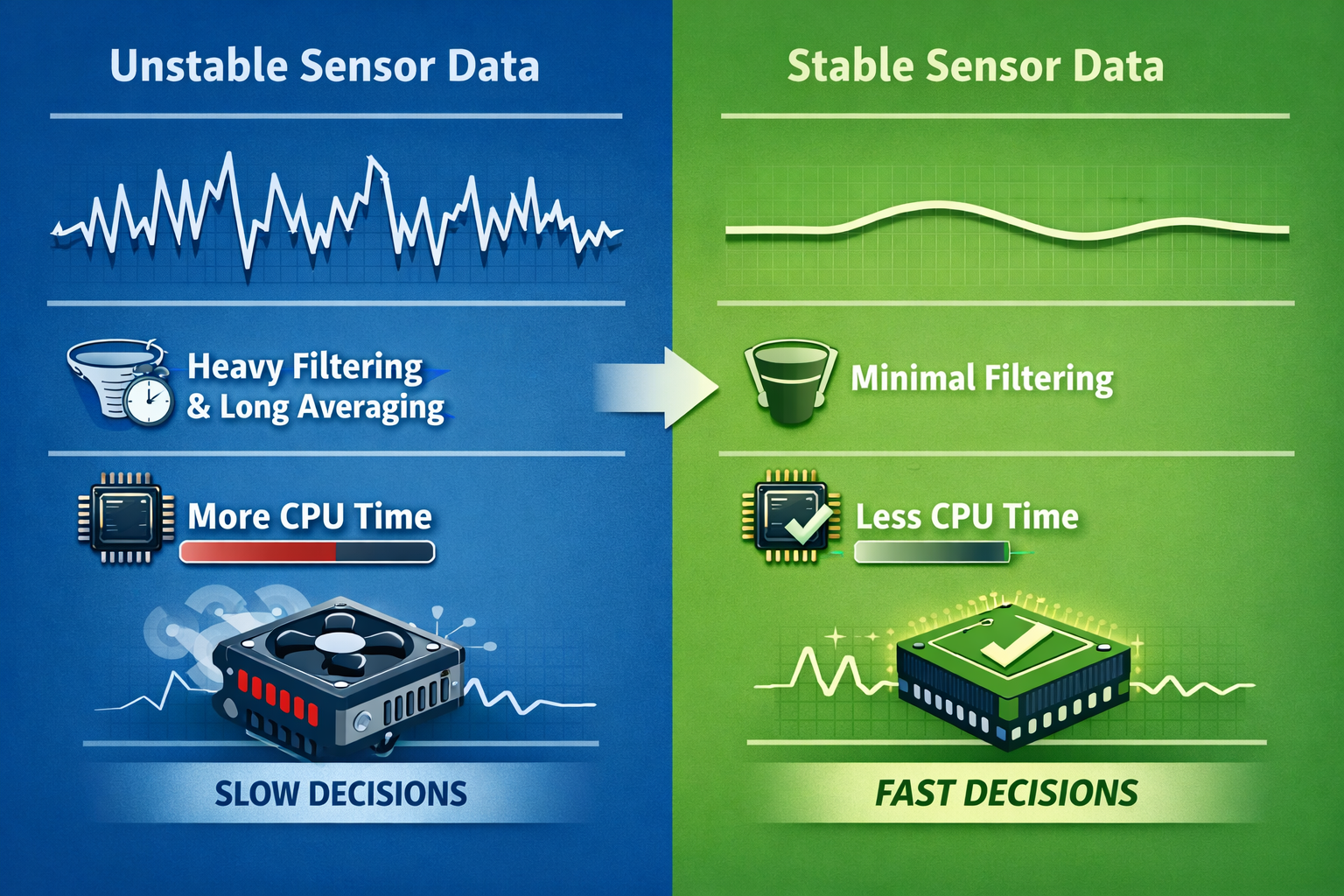

Less Filtering, More Signal

One of the clearest performance wins from stable sensor integration is what you can remove.

When sensors are unstable:

- Firmware adds filters

- Windows grow longer

- CPU load increases

- Responsiveness drops

When sensors are stable:

- Filtering can be lighter

- Decisions can be faster

- Trends converge sooner

- CPU headroom increases

Across real products, this often translates to:

- 25–50% reduction in sensor-processing CPU time

- Faster detection of real events

- Lower power consumption during continuous sensing

- Better scalability as features are added

This is performance you don’t get by upgrading a processor.

Stability Improves Product Trust

From a user’s perspective, unstable sensor readings feel like:

- Inconsistent behavior

- Unexplained alerts

- Gradual loss of accuracy

- “It worked yesterday” failures

Stability changes that perception.

When sensor readings remain consistent regardless of what the rest of the system is doing:

- Alerts feel intentional

- Trends feel reliable

- Diagnostics make sense

- Long-term data becomes usable

This is a qualitative improvement—but it’s driven by quantitative engineering decisions.

Why This Scales Better Than Post-Processing

Many current products attempt to solve sensor instability purely in software. This works initially—but scales poorly.

As features are added:

- Noise sources multiply

- Correlations increase

- Filters become brittle

- Debugging becomes ambiguous

Hardware-level stability scales differently:

- New features don’t destabilize old ones

- Sensor behavior remains predictable

- Firmware complexity grows slowly, not exponentially

This is why systems designed for sensor stability early continue to perform well years later—while others degrade with every revision.

Stability as a Performance Feature

At Hoomanely, we treat sensor stability as a first-order performance feature, not a quality afterthought.

Compared to current-state designs, this approach delivers:

- 40–70% reduction in load-induced sensor noise

- 2–3× improvement in repeatability under dynamic system activity

- 25–50% reduction in sensor-related CPU processing

- Lower false positives and fewer spurious events

- Better long-term consistency across units and environments

Most importantly, it restores trust in the data.

A sensor that reports clean data only when the system is quiet is not a reliable sensor.

A system that protects sensor integrity under real operating conditions is a high-performance system, even if its clock speed never changes.

That is the difference between measuring the world—and misunderstanding it.