Learning Meals with Image Similarity

Intro

One of the hardest problems in smart pet devices isn’t sensing - it’s understanding context. At Hoomanely, our EverBowl feeding system can reliably detect when a dog is eating using weight sensors, proximity sensing, and on‑device vision. But knowing what the dog is eating is a very different challenge.

Pet parents feed a wide variety of meals: dry kibble, wet food, home‑cooked mixes, toppers, and rotating combinations. Hard‑coding food classes or forcing users to label every meal forever leads to friction and poor adoption. We needed a system that starts simple, learns gradually, and eventually works automatically - without sacrificing accuracy or trust.

This post walks through how we designed a progressive, robust meal recognition system using image similarity search. We begin with explicit user classification during onboarding and transition to automated meal detection once enough visual data is collected. The result is a system that feels personalized, low‑effort, and increasingly intelligent over time.

Problem

Traditional image classification assumes a fixed, global label space: kibble A, kibble B, wet food, and so on. That assumption breaks down immediately in real homes.

Key challenges we faced:

- Highly personalized meals: Each household defines its own meal types

- Small data per user: Only a handful of images per meal initially

- Visual variance: Lighting, camera angle, bowl placement, food mixing

- Cold start: No training data on day one

A global classifier would either overfit badly or require unrealistic amounts of labeled data. What we needed was a system that could learn per user, adapt continuously, and improve without constant retraining.

Approach

We designed the system around a simple principle:

Let the user teach the system first - then let the system take over.

Instead of predicting meal labels immediately, EverBowl follows a two‑phase approach:

- Onboarding Phase (Human‑in‑the‑Loop)

Users manually classify meals from a list they create. - Learning Phase (Similarity‑Based Automation)

The system uses image embeddings and similarity search to auto‑detect meals.

This avoids premature automation while ensuring that every prediction is grounded in real, user‑validated data.

Process

Step 1: Meal Definition During Onboarding

When a user sets up EverBowl, the app asks them to define meals they commonly feed:

- “Morning Kibble”

- “Chicken + Rice”

- “Evening Wet Food”

These labels are user‑specific, not global categories. At this stage, there is no computer vision involved - only intent capture.

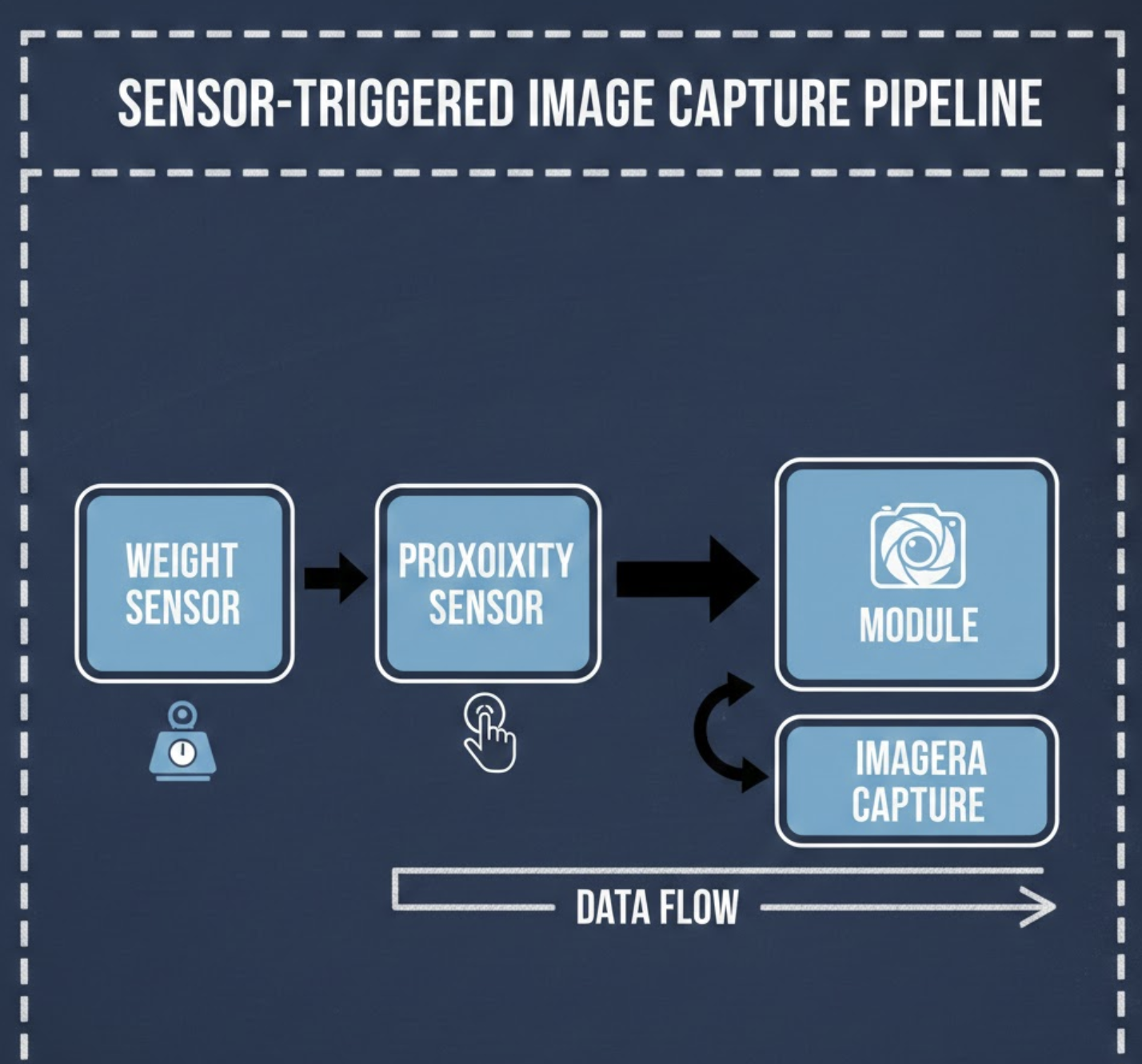

Step 2: Triggering Meal Capture

Every feeding event is detected using the weight sensor:

- Bowl weight increases → food added

- Proximity confirms dog presence

- RGB image is captured at peak stability

This ensures images are captured only when food is present, reducing noise and unnecessary storage.

Step 3: User‑Assisted Classification

For the first few weeks, the app prompts the user:

“We detected a meal. Which one did you serve?”

The selected meal label is stored alongside:

- Captured image

- Timestamp

- Bowl weight delta

Over time, this builds a small but high‑quality dataset per meal, per household.

Step 4: Image Embedding Generation

Instead of training a classifier, we convert each image into a fixed‑length embedding vector using a lightweight vision model.

Key properties we care about:

- Similar meals cluster together

- Robust to lighting and angle changes

- Small footprint for edge or near‑edge inference

Each meal label becomes a set of embeddings, not a single reference image.

Step 5: Similarity Search at Inference Time

When a new meal is detected:

- Generate an embedding for the new image

- Compare it against stored embeddings for that user

- Retrieve the top‑K nearest neighbors

- Aggregate similarity scores per meal label

If confidence crosses a threshold, the system auto‑labels the meal. Otherwise, it falls back to asking the user - preserving accuracy and trust.

Why Similarity Search Is the Right Abstraction

Similarity search is not just a convenient choice - it is a robust design decision aligned with personalization and real‑world behavior.

Personalized Label Spaces by Default

Meals are user‑defined concepts, not universal classes. Similarity search naturally supports this:

- Each user maintains their own embedding space

- No global ontology or label harmonization

- Adding a new meal does not disturb existing ones

Classifiers, by contrast, assume shared labels and fixed decision boundaries - a brittle assumption in personalized settings.

Few‑Shot Learning Without Retraining

During onboarding, we may only have 3–5 images per meal. Training or fine‑tuning classifiers at this scale is unstable.

With similarity search:

- Every labeled image is immediately usable

- Performance improves monotonically with data

- No retraining cycles or deployment delays

The system responds to user behavior in real time, not model release schedules.

Robustness to Visual Drift and Mixing

Meals evolve. Brands change. Ingredients get mixed. Lighting varies across days and seasons.

Embedding‑based similarity handles this gracefully:

- New variants naturally expand clusters

- Partial similarity still retrieves the correct meal

- Mixed meals land near their dominant components

Instead of forcing hard decisions, the system reasons in degrees of similarity, which better matches how food actually appears in bowls.

Graceful Failure Modes

A critical advantage of similarity search is that it knows when it is uncertain.

If no stored embeddings are sufficiently close:

- The system defers to the user

- No incorrect label is silently logged

- The correction becomes new training data

Mistakes improve the system rather than eroding trust.

Interpretability Builds Trust

When EverBowl predicts a meal, we can explain it:

“This looks similar to the last 3 times you served Chicken + Rice.”

Nearest‑neighbor explanations are intuitive and transparent - something softmax‑based classifiers struggle to provide.

Lower Operational Complexity

From an engineering standpoint, similarity search simplifies production ML:

- No per‑user model training

- No model version explosion

- No scheduled retraining pipelines

The system grows by adding data, not rebuilding models.

Progressive Automation by Design

Similarity search enables a smooth transition:

- Manual → assisted → mostly automatic

- Confidence‑based thresholds control automation

- Users remain in control during early learning

This gradual progression is difficult to achieve with traditional classifiers.

Results

After sufficient data collection:

- Users stop seeing meal classification prompts

- Meal detection becomes automatic and consistent

- Occasional corrections further refine the embedding space

Observed outcomes:

- Faster meal logging

- Higher user trust due to explainability

- Reduced cognitive load during feeding routines

The system improves silently in the background - without explicit training cycles.

Learnings

Key insights from building this system:

- Start with user intent, not automation

- Few high‑quality examples beat large noisy datasets

- Similarity search reduces ML and ops complexity

- Progressive automation builds long‑term trust

Most importantly, this approach respects how real people feed real dogs - messy, personal, and constantly evolving.