Leveraging OpenSearch for Production RAG

Ask any pet parent about their anxious dog, and they'll give you a novel. Ask an AI assistant the same question without proper context, and you'll get generic advice that could apply to any animal, anywhere.

Here's what we learned building Hoomanely's AI assistant: the quality of an AI's response is directly proportional to the relevance of the context it receives. A pet owner asking "Why is my dog panting heavily?" needs different answers depending on whether their dog just finished a run, is elderly with a heart condition, or ate something unusual. The LLM can reason brilliantly—but only with the right information in front of it.

This is where Retrieval-Augmented Generation (RAG) becomes less of a trendy acronym and more of a production necessity. For Hoomanely—a platform helping pet parents make informed decisions about their companions' health—getting context right isn't just about better answers. It's about trust, accuracy, and sometimes, the difference between catching a health issue early or missing it entirely.

The RAG Implementation Landscape

The promise of RAG is elegant: instead of hoping your LLM memorised everything during training, you retrieve relevant information at query time and inject it into the prompt. Simple in theory. Complex in production.

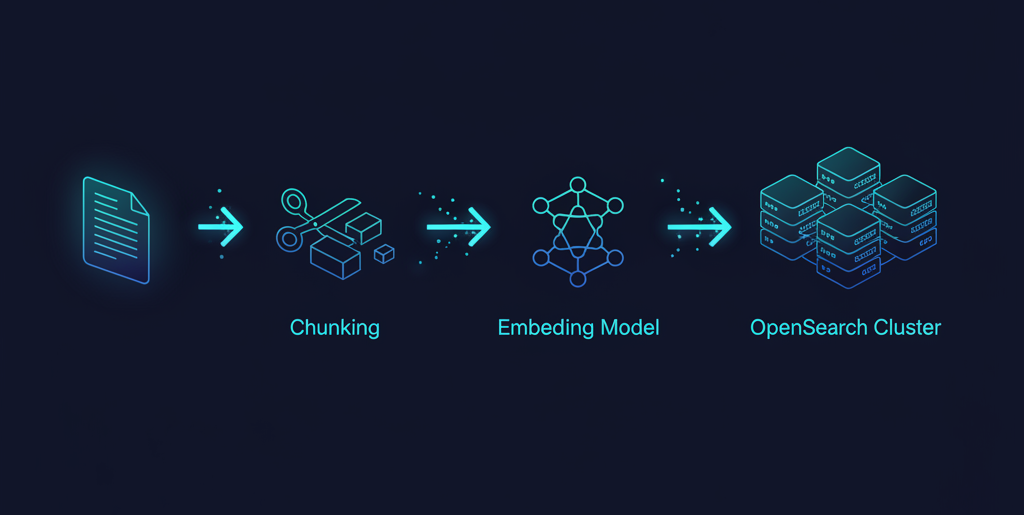

The naive approach involves chunking your documents, embedding them, storing vectors in a database, and retrieving the top-k most similar chunks when a query comes in. This works for demos and impresses stakeholders. It falls apart when users ask "What should I feed my senior dog with kidney disease?" and your system returns chunks about pet nutrition because "feed" and "diet" triggered semantic similarity.

We explored three architectural patterns before settling on our current approach:

Dense retrieval only: Pure vector similarity using embeddings. Fast, semantic, but prone to missing exact matches. A user searching for "FLUTD" (Feline Lower Urinary Tract Disease) might get results about general urinary health instead of the specific condition.

Hybrid search: Combining keyword matching with vector similarity. Better precision, especially for medical terms and specific conditions. When someone types "antifreeze poisoning," you want exact terminology matches, not semantically similar "toxic substance" content.

Reranking pipelines: Retrieve a larger candidate set (say, 50 chunks) using hybrid search, then use a cross-encoder model to rerank based on actual relevance to the query. More computationally expensive, but significantly better at understanding nuanced queries.

Each approach trades off latency, accuracy, and infrastructure complexity. For a production pet care system handling thousands of daily queries about everything from behavioural issues to emergency symptoms, we needed something that could scale horizontally, support hybrid search natively, and integrate cleanly with our existing AWS infrastructure.

Why OpenSearch Became Our Vector Store of Choice

OpenSearch entered our pipeline as the orchestrator of our retrieval layer, and it's proven to be one of the better architectural decisions we've made.

The indexing foundation: We maintain a corpus of a significantly large number of chunks spanning veterinary guides, research papers, breed-specific health information, etc. Each chunk is about 512 tokens—small enough for focused retrieval, large enough to maintain coherent context.

Here's what the indexing architecture looks like practically: documents arrive, get chunked with a small token overlap to preserve context boundaries, embedded via batch processing, and pushed to OpenSearch indices.

The retrieval mechanics: When a query like "my 12-year-old golden retriever is limping on his front left leg" comes in, here's what happens under the hood:

- Query processing: We generate the embeddings for the question asked.

- Index routing: Query gets routed to medical and senior-care indices based on intent classification

- Hybrid search execution: OpenSearch runs k-NN vector searches, combining scores using a weighted formula.

- Metadata filtering: Results get filtered for relevance to dogs, senior age range, and musculoskeletal issues

- Top-k selection: We retrieve the top k chunks.

The retrieved chunks then get formatted into a structured prompt template. This goes to our LLM along with the original query, conversation history and pet context.

The Competitive Landscape

Being honest about alternatives: we evaluated Pinecone, Weaviate, Qdrant, and Milvus before committing to OpenSearch.

Pinecone offers the smoothest developer experience—true serverless vector search with minimal configuration. We ran a two-week proof-of-concept and loved the simplicity. The dealbreaker: cost at scale and vendor lock-in. For our query volume, we were looking at $800-1,200/month, and metadata filtering felt like an afterthought bolted onto a pure vector database.

Weaviate impressed us with its GraphQL API and built-in vectorization modules. Solid hybrid search, good performance. The learning curve was steep, and operating it ourselves meant another complex service to maintain. We're a small team; operational overhead matters.

Qdrant and Milvus both excel at pure vector performance—if you need million-vector-per-second throughput, these are serious contenders. But we needed the hybrid search capabilities more than raw vector speed, and both felt like bringing a Formula 1 car to a cross-country road trip.

OpenSearch won on pragmatics: it's not the fastest pure vector search (Milvus wins there), not the easiest to start with (Pinecone), but it offered the best balance of hybrid search quality, operational maturity, AWS ecosystem integration, and cost efficiency. When you're running a production system with real users and real stakes, "good enough with low operational overhead" beats "theoretically optimal but operationally complex."

What We Learned About Context in Production

Building this system reinforced a few hard truths about RAG in production environments:

Chunking strategy matters more than embeddings model choice: We obsessed over which embedding model to use (tried OpenAI's, Cohere's, several open-source options). Switching models gave us marginal gains. Rethinking how we chunk documents—respecting logical boundaries, maintaining sufficient context, optimising chunk size for our use case—improved retrieval quality significantly.

Metadata is your secret weapon: Structured filters (pet type, age range, severity level) cut through semantic ambiguity. A vector might think "excited" and "anxious" are similar; metadata filters know the user said "dog" not "cat."

Operational simplicity compounds: Every additional service is another potential failure point, another monitoring dashboard, another on-call burden. OpenSearch being part of AWS's managed services meant one less thing to worry about at 3 AM.

Key Takeaways

For teams building similar systems:

- Invest heavily in metadata structure and chunk quality before optimising embeddings

- Choose infrastructure that matches your team's operational capacity, not just the theoretical best performance

- Measure retrieval precision separately from overall response quality; they're different problems

- Cost scales with query volume faster than you expect; understand the economics early

For teams evaluating OpenSearch specifically:

- Best fit if you need hybrid search, already use AWS, and value operational maturity over cutting-edge features

- Not the right choice if you need pure vector performance, want managed services with zero configuration, or require real-time updates at massive scale

- Seriously underrated for production RAG systems where reliability and debuggability matter more than impressive benchmarks

The real lesson: RAG systems succeed or fail on the quality of retrieval, and retrieval quality depends on understanding your domain deeply enough to structure both data and queries appropriately. The vector database is infrastructure. The intelligence is in how you use it.