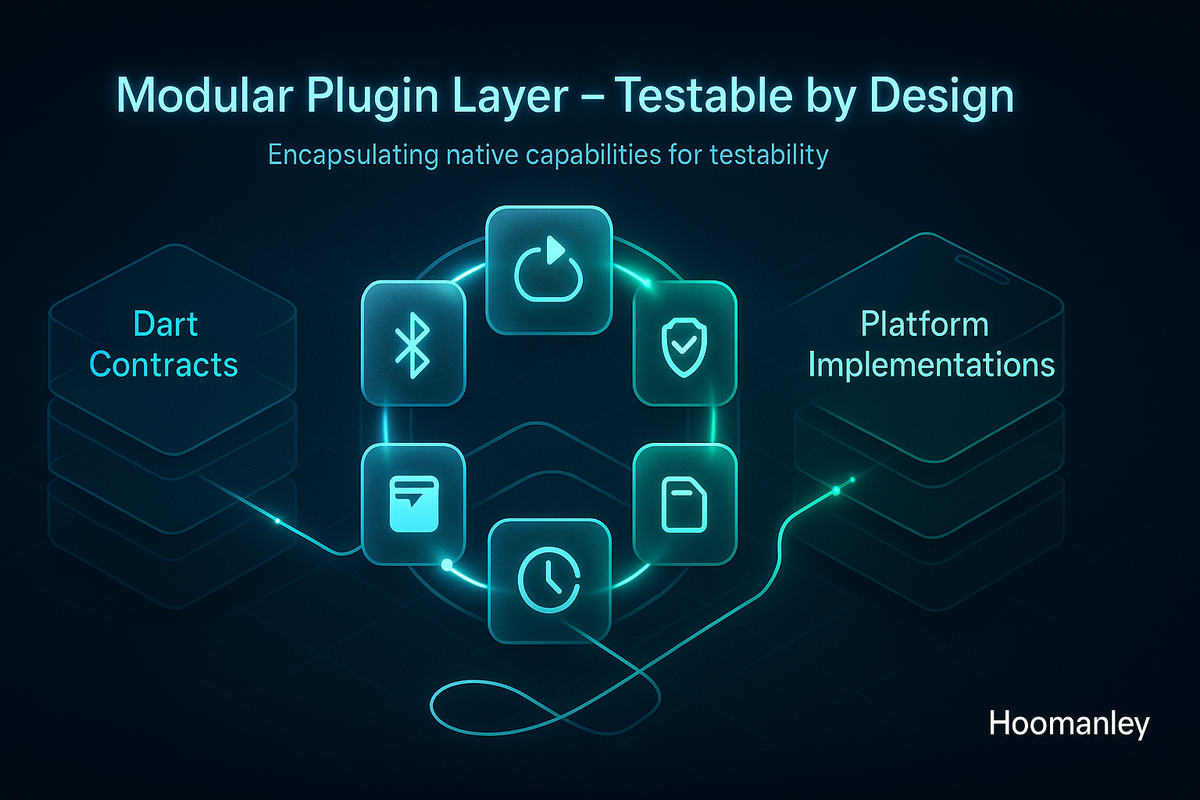

Modular Plugin Layer, Testable by Design

Cross-platform apps move fast—until native features start leaking into product code. BLE streaming, notifications, file access, analytics: all must behave consistently across iOS and Android without tangling UI logic with platform quirks or slowing tests to a crawl. Our current setup uses direct native bridges from a view-model into platform entry points for analytics and BLE. That choice shipped value quickly and kept incident response nimble—but it also makes isolation, swapping, and automation harder than they should be.

This article lays out a realistic blueprint to evolve that foundation into a modular, testable plugin layer without a big-bang rewrite. We’ll keep delivery speed, carve crisp seams, and layer in observability and tests—step by step. At Hoomanely, the guiding principle is simple: product code should depend on capabilities, not operating systems.

Problem

Today’s trade-offs (effective, but limiting):

- Direct bridges in feature logic. Native calls for analytics and BLE streaming live close to a view-model and platform entry points, not in dedicated packages. This is fast to ship and debug, but platform details bleed upward and swapping is difficult.

- DI that stops short of the platform. A service locator wires high-level services, yet platform bridges remain concrete; inversion behind clear interfaces is minimal. That constrains test doubles and runtime swaps.

- Sparse automation. One integration test and one unit test exist—enough for smoke checks, not enough for regression confidence.

- Log-first observability. Readable logs are helpful, but there are no structured metrics or SLIs to quantify latency and success rates.

- Versioning via app releases. Native behavior changes ship only with the whole app; independent rollbacks are not possible.

Where this pinches: platform quirks creep upward, analytics and BLE handshakes are harder to mock, and adding reconnect or background behaviors risks spreading OS-specific assumptions across features.

Approach

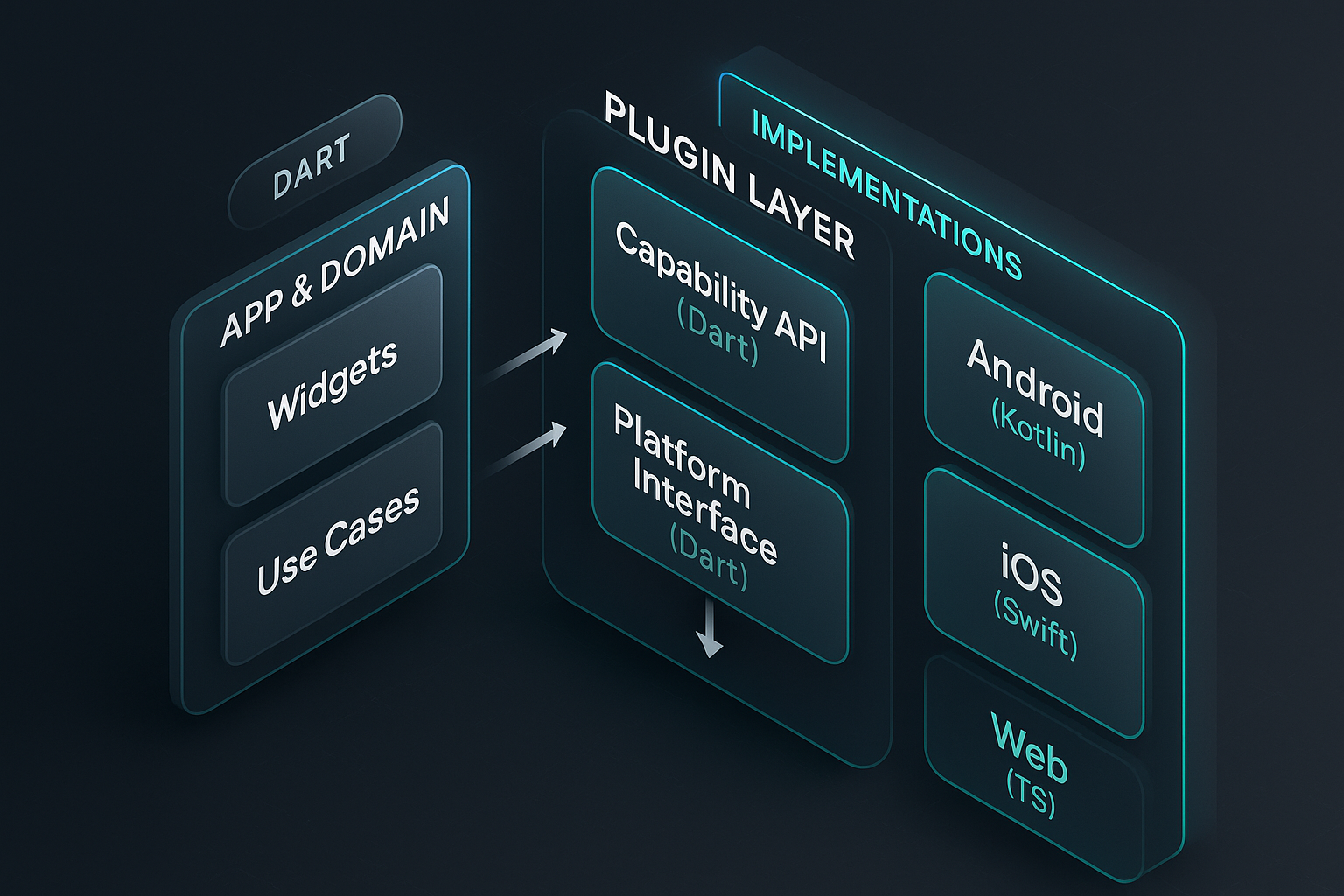

Goal: a federated, modular plugin layer—delivered incrementally.

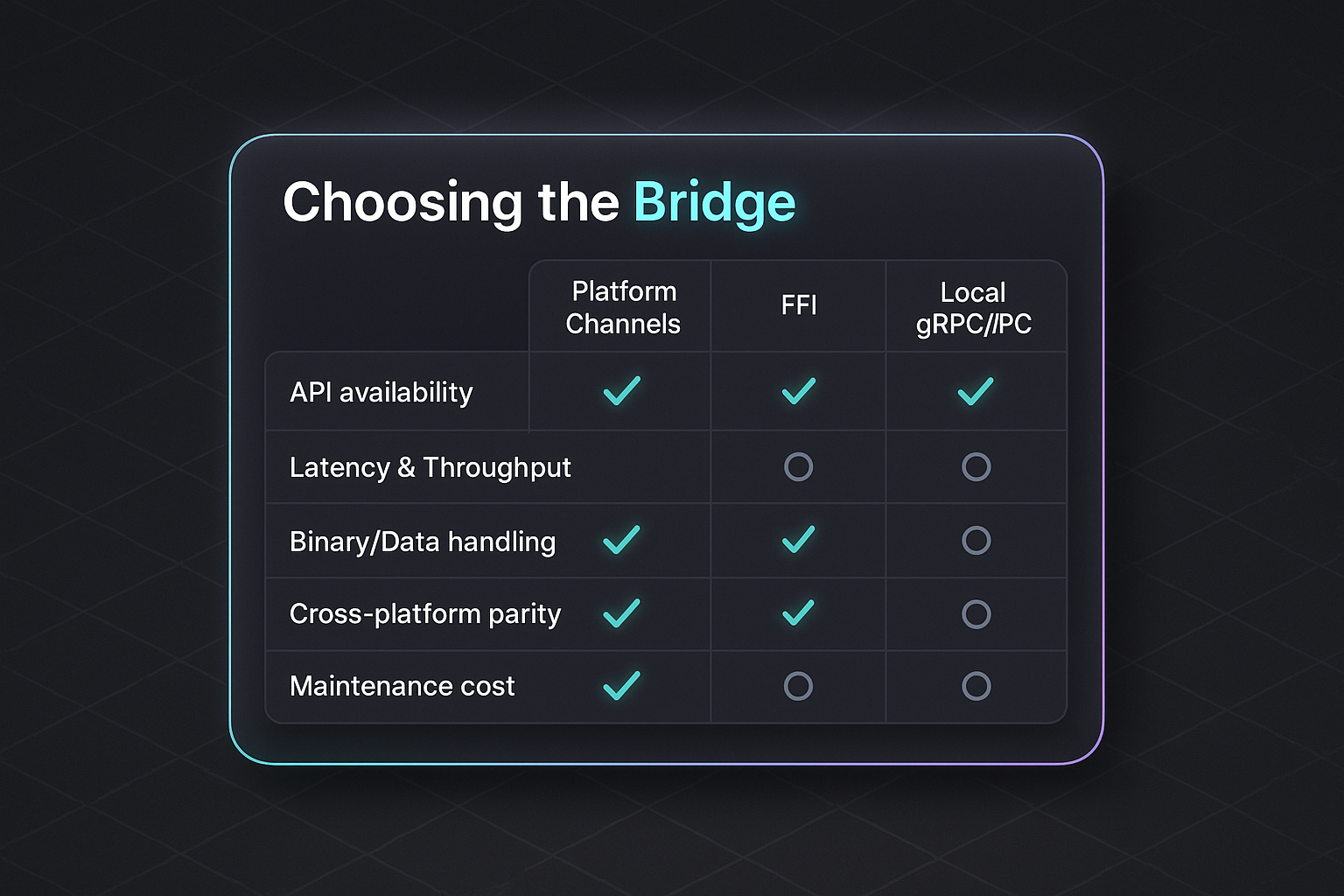

- Contracts first, channels later. Introduce small capability contracts (e.g., “analytics sink,” “BLE session,” “notifier”). Hide bridge details behind adapters that implement those contracts.

- Keep DI, formalize the seam. Continue using the locator, but register interfaces instead of concrete types. Current adapters can keep calling the bridges under the hood.

- Observability at the boundary. Emit structured, low-cardinality metrics at capability entry/exit; keep human-readable logs for forensics.

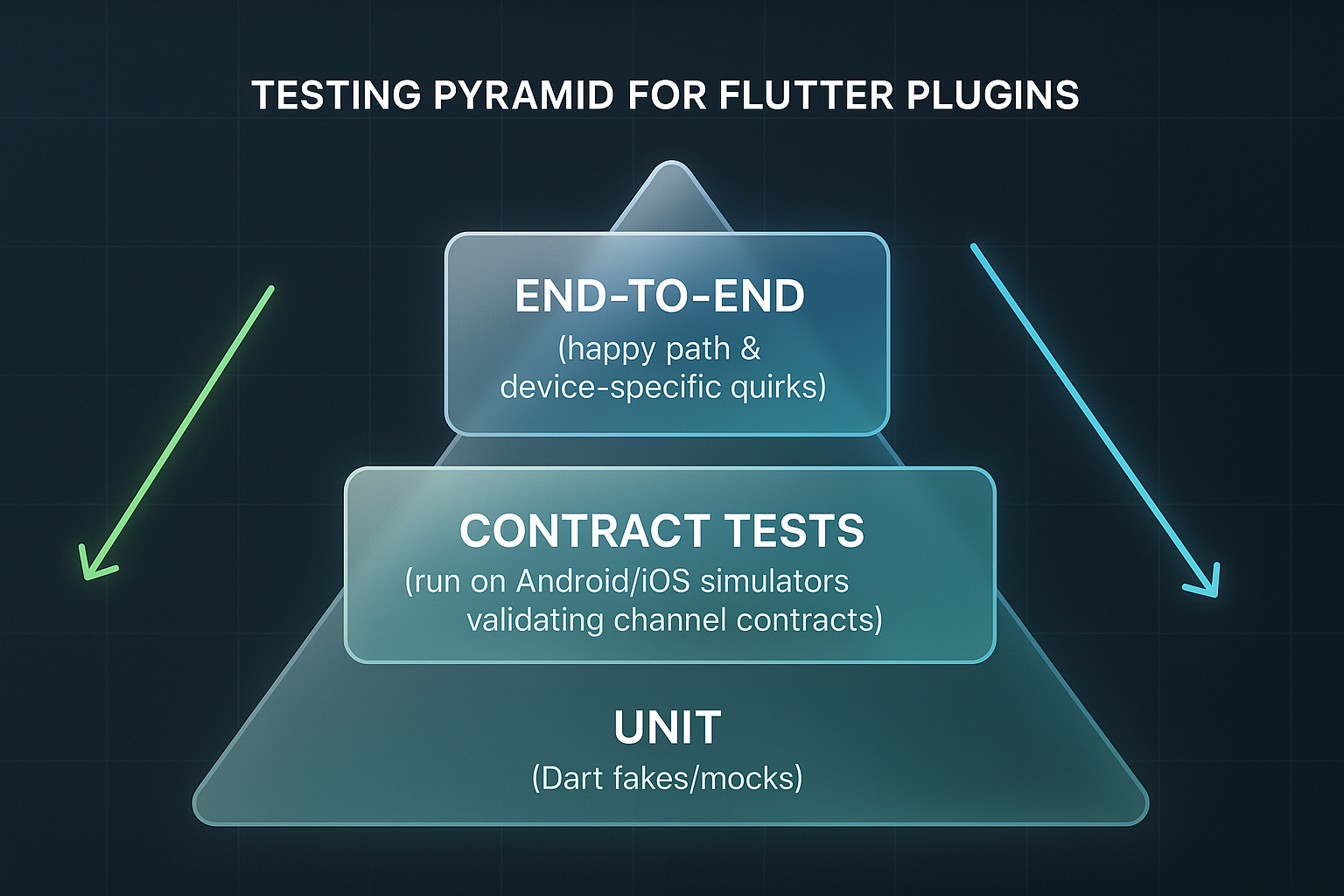

- Layered testing.

- Unit with fakes (fast, pure Dart),

- Contract tests per platform (behavior parity),

- A tiny, deterministic E2E set (confidence without flake).

- Versionable boundaries (later). When contracts stabilize, split them into platform-interface and per-OS implementations, following semantic versioning.

This plan preserves today’s velocity while creating the seams needed for tomorrow’s modularity.

Process

- BLE streaming handshake and monitoring. A single orchestrator coordinates connect → discover → subscribe, with timers for idle detection and backoff on transient errors. Centralization reduced regressions and made incident triage sane.

- Notification permissions. A view-model checks status, triggers the OS prompt via a bridge, and records outcomes for UX follow-ups.

- Post-frame initialization. Early capability checks (notification channels, adapter state watchers, analytics boot) run after the first frame, preventing startup jank.

- Analytics events. An app-level sink forwards events through a native bridge with lightweight retries and error reporting.

Quiet improvement already implemented: thin adapters around the bridges unify call sites today and create the future seam for inversion.

What we tightened without rewrites

- Guarded transitions. The handshake is fenced to prevent re-entrancy and race conditions during state transitions.

- One place for timers. Connection monitoring cadence is standardized to avoid drift between features.

- Locator-registered services. Cross-feature access routes through the locator, removing scattered bridge calls.

- Capability contracts (incremental). Introduce minimal interfaces for analytics, BLE session, and notifications, while adapters continue to use current bridges underneath.

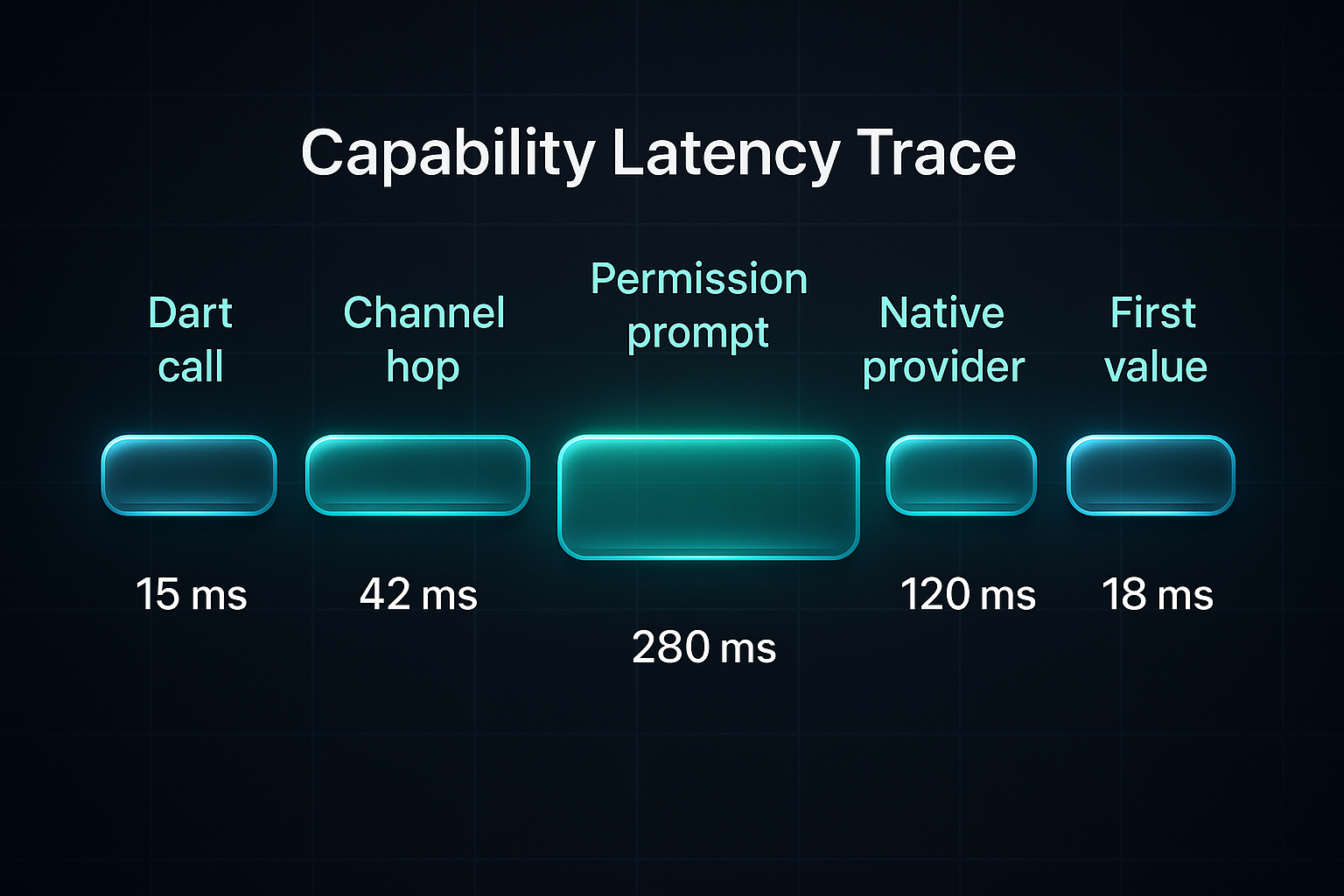

- Structured telemetry at boundaries. Add counters and timers such as

handshake.start/success/error,event.enqueue.duration, andpermission.prompt.result(names illustrative). - Connection monitor refinement. Consolidate backoff and health checks into a single helper to keep reconnect logic consistent across flows.

Suggestions

- Move platform details fully behind adapters. Callers use capability contracts; channel names, arguments, and parsing live in adapters only.

- Contract tests per platform. A shared Dart test suite validates timeouts, error mapping, and stream semantics on both iOS and Android.

- Small, reliable E2E suite. Two or three seeded flows—e.g., “pair + stream,” “capture + upload,” “analytics on cold start”—run deterministically.

- SLIs & dashboards. P95 time-to-first-value, reconnect success within N attempts, analytics enqueue latency/error rate. Alerts are conservative and actionable.

- Versionable packages (when stable). Split contracts and implementations; feature flags for safe, fast rollouts and reversions.

Results

- Speed without chaos. Direct bridges enabled fast delivery of BLE streaming and analytics; central orchestration and standardized timers contained complexity.

- Cleaner seams. Thin adapters reduced duplicate call sites and paved an easy path to inversion behind interfaces.

- Smoother startup. Post-frame initialization kept the first render responsive while still preparing observers and capability state.

- Better incident posture. Consistent logs around handshake order, permission flows, and key timing points shortened triage.

Compounding benefit: the team increasingly thinks in capabilities—not OS APIs. That mindset makes future extraction safe and predictable.

Observability Improvements

- Current: clear, human-readable logs at key steps on both sides of the bridge.

- Improvements:

- Structured events with durations and outcome enums at capability boundaries.

- Low-cardinality tags (OS, app version, feature flag) for stable dashboards.

- SLIs with conservative alerts: P95 time-to-ready, reconnect success within N attempts, analytics enqueue latency/error rate.

Testing

Broaden unit tests with fakes for capability contracts; add contract tests that run on both platforms; keep E2E small, seeded, and deterministic.

Versioning & Release Strategy

Interfaces and platform implementations become separately versioned packages. Contract changes are deliberate; implementations ship fixes independently; feature flags at call-sites enable staged rollouts and quick reverts.

Real Scenarios

- Streaming handshake coordination: connect → discover → subscribe, guarded against overlap and tuned with backoff.

- Connection health: heartbeats, idle detection, and retry cadence from a single source of truth.

- Permission UX: status → rationale → prompt → follow-up actions, with outcomes recorded for UX improvements.

- Startup hygiene: essential observers and validation deferred until after the first frame to keep the app responsive.

Notes to remember

- Draw the boundary first. Introduce capability contracts before moving any code; adapters can keep calling existing bridges under the hood while the app talks only to interfaces.

- Centralize lifecycles. Keep handshake, timers, and reconnect logic in one orchestrator; guard transitions to prevent re-entrancy and race conditions.

- Normalize async semantics. Futures for one-shots; Streams for continuous data; always include timeouts and cancellation paths.

- Instrument the seams. Turn key boundary steps into measurable events (start/success/error + duration). Low-cardinality tags beat verbose logs when you need dashboards.

- Prefer small, reliable tests over many flaky ones. Expand unit tests with fakes, add shared contract tests per platform, and cap E2E at a few deterministic journeys.

- Keep DI simple but meaningful. Register interfaces in the locator now; swap implementations later without touching the UI.

- Feature-flag risky paths. Gate new handshake strategies or codecs so you can roll forward and back without UI changes.

- Delay package splits until stable. Extract federated packages only when the contracts stop churning; then adopt semantic versioning and independent release trains.

- Document error taxonomy. Distinguish user-denied, timeout, transient I/O, and unknown; route each to clear UX or retry behavior.

- Protect the first frame. Defer heavy capability checks post-frame; hydrate quietly and surface actionable state changes to the UI when ready.

In Hoomanely builds pet-health experiences spanning wearables, smart feeders, and a mobile companion app. A modular plugin layer lets us evolve BLE, notifications, and analytics independently, test them reliably, and keep the customer experience predictable as native stacks evolve.

Takeaways

You don’t need a rewrite to gain modularity—draw the seams now, extract when stable. Make product code depend on capabilities, not OS details. Test in layers: fast unit, parity-keeping contract, and a tiny E2E set. Promote logs to structured metrics and SLIs so you can see latency and success clearly. When interfaces settle, version the boundaries so fixes ship faster and roll back safely—without touching the UI.