Privacy by Design: How Edge Segmentation Protects Pet Parents

The Unspoken Tension of In-Home AI

When we talk about smart home devices, the conversation inevitably drifts toward a single, uncomfortable word: privacy.

At Hoomanely, we build technology that lives in the spaces of a pet parent's home. Our flagship product, Everbowl, is a smart feeding system that tracks a dog's hydration, feeding patterns, and ambient conditions - data critical for preventive pet healthcare. But here's the catch: to understand when and how a dog interacts with its bowl, we need a camera.

The moment you place a camera inside someone's home, you inherit a responsibility that no amount of disclaimers can offset. Even if your system only captures images when a dog is detected, what else is in the frame? A family photo on the counter. A prescription bottle. A calendar with appointments. The background of any pet image is, by definition, a window into someone's life.

This post explores how we approached this challenge—not by avoiding cameras altogether, but by rethinking what a camera sees.

The Problem: Useful Data vs. Private Data

Everbowl uses proximity-triggered imaging. When a dog approaches the bowl, a low-power sensor activates the camera to capture a snapshot.

The challenge is that the signal we need (the dog) occupies a small fraction of the image. The rest—the kitchen counter, the hallway, the living room—is noise from a machine learning perspective but highly sensitive from a privacy perspective.

Traditional approaches to this problem fall into two common categories:

| Approach | Limitation |

|---|---|

| Crop to bounding box | Still includes background context around the dog |

| Blur entire image | Destroys the signal we need to analyze |

Neither felt right. We needed a solution that preserved the dog with full fidelity while treating everything else as if it didn't exist.

The Approach: Segment, Isolate, Obscure

The core insight was simple: if we can precisely separate the dog from the background at the pixel level, we can apply different policies to each region.

This is the domain of instance segmentation—a computer vision technique that goes beyond bounding boxes to produce a detailed mask of each object. Instead of saying "there's a dog somewhere in this rectangle," segmentation says "these exact pixels belong to the dog."

Our pipeline works in two stages:

- Segmentation: A lightweight neural network runs on-device and produces a binary mask—every pixel is labeled as either "dog" or "not dog."

- Selective Processing: The dog region is preserved at full resolution. The background region is heavily blurred using a Gaussian kernel, rendering it unrecognisable while maintaining the overall scene structure.

The result is an image that looks like a professional portrait photo: a sharp subject against a soft, intentionally defocused background. Except in our case, the "artistic blur" is a privacy mechanism.

Why Segmentation, Not Detection?

A fair question: why not just crop tightly around the detected dog?

The answer lies in edge precision. Object detection produces rectangles. Rectangles include corners. Corners include background. Even a "tight" crop around a sitting dog will capture floor tiles, furniture legs, or nearby objects.

Segmentation, by contrast, follows the exact contour of the subject. Fur edges, ear tips, tail curves—the mask hugs the dog's silhouette with pixel-level accuracy. When we blur "everything outside the mask," we're blurring everything that isn't biologically part of the dog.

This distinction matters enormously at scale. Across thousands of daily captures, even small background leakage accumulates into a meaningful privacy exposure. Segmentation eliminates that leakage at the source.

The Engineering Strategy: Giant Teachers, Tiny Students

Running segmentation on edge devices isn't free. The models that produce the best masks—large transformer-based architectures like Grounding DINO and Segment Anything (SAM)—require GPUs with gigabytes of VRAM. They're research-grade tools, not embedded software.

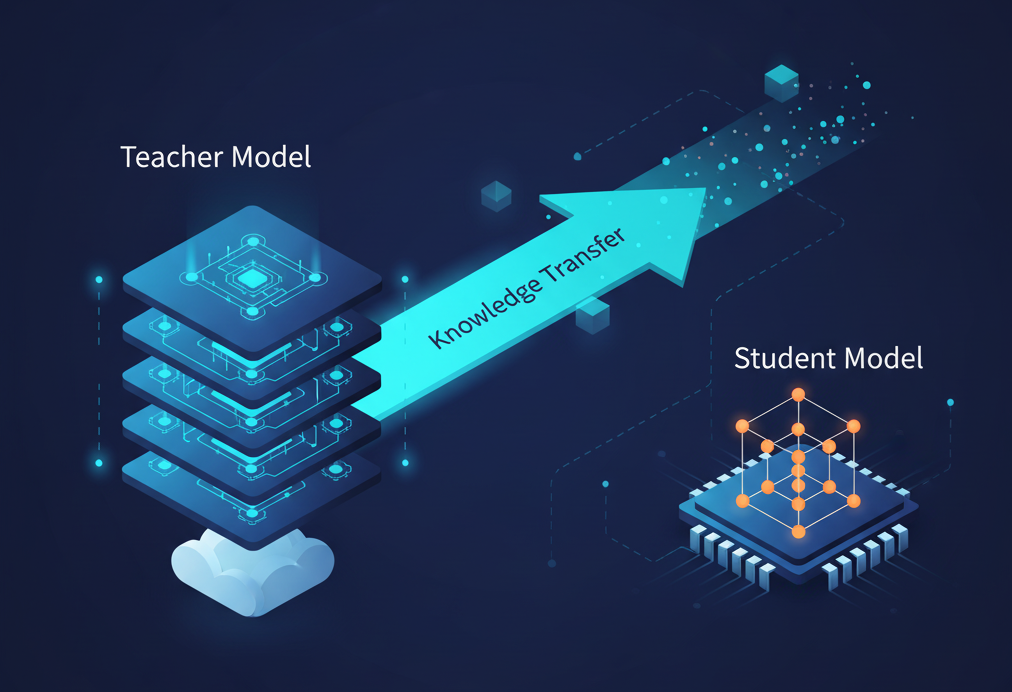

But here's the insight: you don't need a giant model at inference time if a giant model helped you train a small one.

Our approach leverages a concept sometimes called knowledge distillation or teacher-student learning:

- Offline Labeling with Transformers: We ran Grounding DINO (for open-vocabulary detection) combined with SAM (for precise mask generation) on thousands of unlabeled dog images. These large models, running on cloud GPUs, produced pixel-perfect segmentation masks automatically—no manual annotation required.

- Dataset Curation: The auto-generated labels were reviewed and curated using a lightweight quality-control tool. Images with poor masks or edge cases were removed, ensuring the training data was clean.

- Edge Model Training: With a high-quality labeled dataset in hand, we trained a compact segmentation model—small enough to run on our target embedded hardware in real-time. The edge model learned to mimic the outputs of the large transformers without needing their computational overhead.

- Post-processing Refinement: We applied morphological operations to clean up mask edges—filling small holes, smoothing contours, and ensuring the final mask looks natural rather than algorithmic.

The result? A model that runs comfortably on constrained hardware while producing masks that, in subjective evaluations, are nearly indistinguishable from those generated by models 100x its size.

Measuring Success: Beyond Accuracy Metrics

How do you evaluate a privacy-preserving system? Traditional ML metrics—precision, recall, IoU—tell you how well the model segments the dog. But they don't tell you whether the privacy goal was achieved.

We developed a complementary evaluation framework:

- Segmentation quality (IoU > 50%): Does the mask capture the dog accurately enough that the blurred output looks natural?

- Background obscurity: Can a human reviewer identify any specific object in the blurred region? We sampled processed images and asked reviewers to describe what they saw in the background. The target: "nothing identifiable."

- Behavioral signal preservation: Can our downstream analytics—feeding duration, posture detection—still operate on the blurred images? The answer needed to be yes.

Across our validation set, the system achieved over 95% segmentation accuracy at the IoU > 0.5 threshold, with reviewers unable to identify background objects in 98% of processed images. Critically, our behavioral models showed no degradation in performance on segmented images compared to originals.

The Bigger Picture: Privacy as a Product Feature

It's tempting to treat privacy engineering as a compliance checkbox—something you do because regulations require it. But that framing misses the point.

For Hoomanely, privacy preservation isn't a constraint on our technology. It's a feature of our product. When a pet parent sees that their Everbowl captures focused images of their dog with zero background detail, they understand immediately: this device respects my home.

That understanding translates into trust. Trust translates into longer retention, more engaged users, and ultimately, better health outcomes for pets—because users who trust their devices share more data, more consistently.

Key Takeaways

- The privacy challenge in smart home devices isn't theoretical. Any camera in a home captures context that users didn't consent to share.

- Segmentation offers a surgical solution. By isolating subjects at the pixel level, you can apply different policies to foreground and background.

- Edge deployment is viable with the right trade-offs. Compact models, curated training data, and post-processing can achieve production-quality results on constrained hardware.

- Privacy engineering is product engineering. Users notice when you've designed with their comfort in mind—and they reward it.