Real-Time Image Compression in Embedded Systems: Performance Optimization for Edge AI Applications

Modern edge AI systems face an increasingly complex challenge: how to efficiently transmit massive amounts of high-resolution sensor data in real-time while operating under severe resource constraints. When your embedded system captures 500KB+ images every few seconds and needs to transmit them over limited bandwidth connections, traditional approaches quickly hit performance walls. The solution lies in intelligent compression algorithms specifically optimized for embedded environments—and LZ4 has emerged as the clear winner for speed-critical applications.

The Compression Challenge in Edge Computing

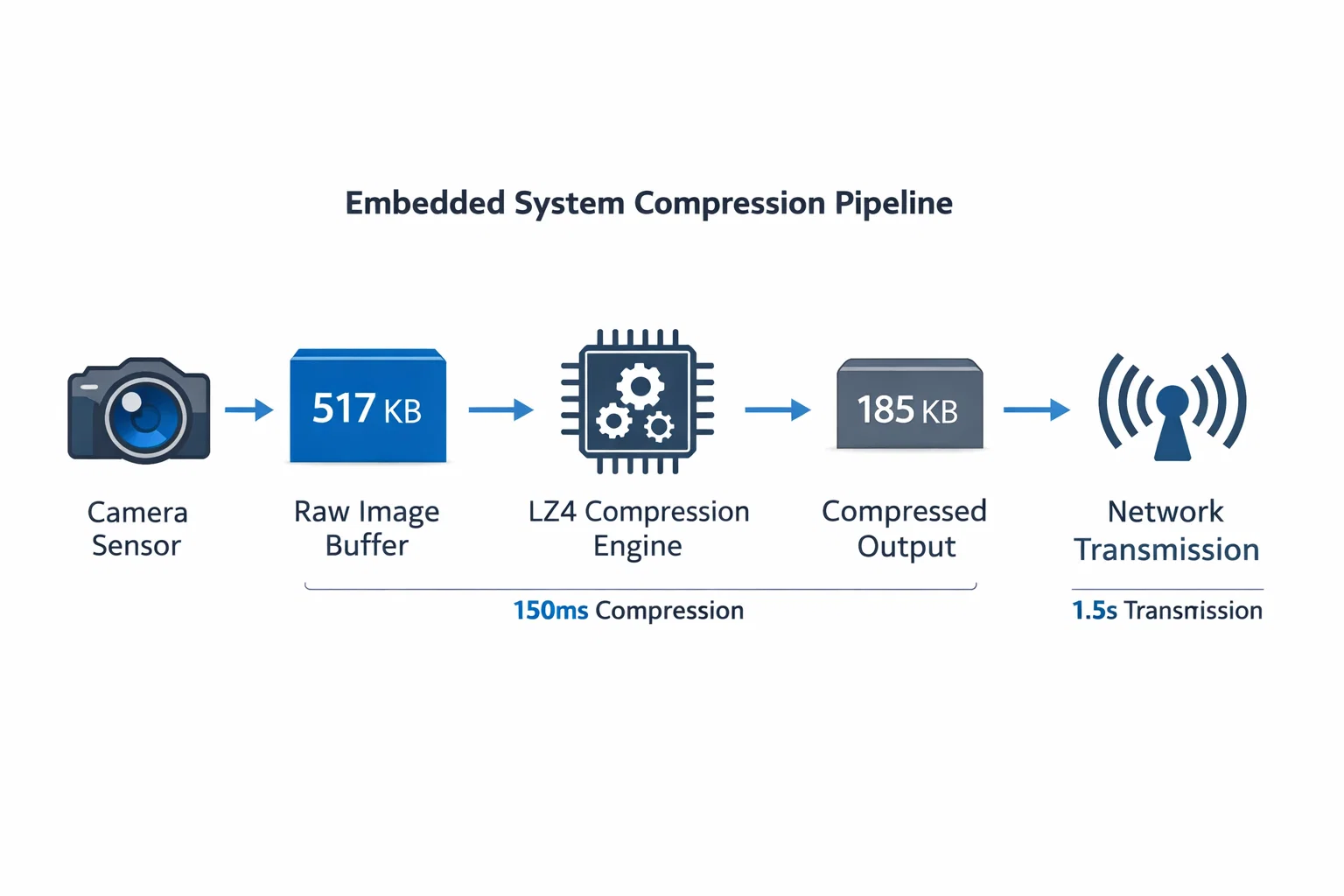

Edge computing devices operate in a fundamentally different environment than traditional servers. Consider a real-world scenario: a high-resolution camera sensor generating 517KB images that must be transmitted over a constrained network connection in near real-time. The naive approach—transmitting raw image data—immediately reveals its limitations.

The Mathematics of the Problem

A 517KB image transmitted over a 1Mbps connection requires approximately 4.1 seconds just for transmission. Add network overhead, and you're looking at 5+ seconds per image. For applications requiring continuous monitoring or rapid response times, this latency is unacceptable.

Resource Constraints Multiply the Challenge

Embedded systems typically operate with:

- Limited CPU cycles (often <200 MHz ARM Cortex processors)

- Constrained memory (512KB-8MB total RAM)

- Power budgets measured in milliwatts

- Real-time operating system requirements

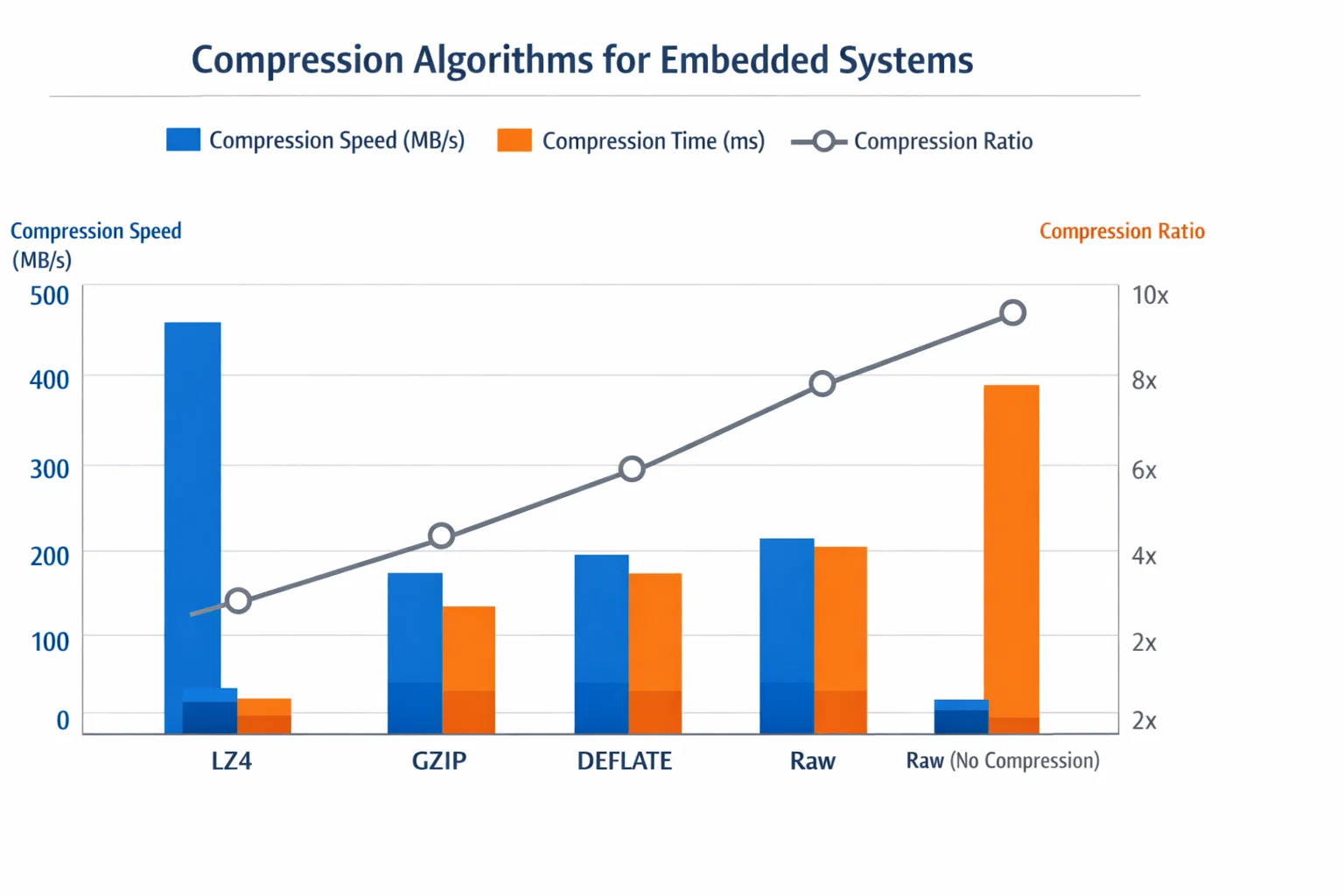

Traditional compression algorithms like GZIP or DEFLATE optimize for compression ratio, often achieving 10:1 reductions but requiring seconds of processing time. In embedded systems, this CPU-intensive approach creates cascading problems: longer compression times mean larger memory buffers, higher power consumption, and potential system instability.

LZ4: The Speed-Optimized Solution

LZ4 represents a fundamental shift in compression philosophy—prioritizing speed over maximum compression ratio. Created by Yann Collet, LZ4 is designed around the principle that "fast compression is often more valuable than maximum compression."

Algorithm Characteristics

LZ4 uses a relatively simple dictionary-based compression scheme:

- Dictionary Size: 64KB sliding window

- Match Finding: Hash table-based with 4-byte minimum match length

- Encoding: Literal runs + length-distance pairs

- Complexity: Linear time complexity O(n)

The algorithm's simplicity is its strength. Unlike more sophisticated algorithms that use multiple passes or complex entropy encoding, LZ4 makes compression decisions in a single forward pass through the data.

LZ4 speed advantages over traditional compression algorithms in embedded systems

Speed vs Compression Trade-offs

While LZ4 typically achieves 2-3x compression ratios (compared to 5-10x for algorithms like GZIP), its speed advantages are dramatic:

- Compression Speed: >500 MB/s per core

- Decompression Speed: >2000 MB/s per core

- Memory Usage: <64KB working memory

- CPU Overhead: <5% for typical workloads

Why LZ4 Excels in Embedded Systems

The algorithm's characteristics align perfectly with embedded constraints:

- Predictable Performance: Single-pass processing ensures consistent timing

- Low Memory Footprint: 64KB dictionary fits comfortably in most systems

- Simple Implementation: ~500 lines of C code with no dependencies

- Hardware-Friendly: Simple operations that map well to ARM instruction sets

Implementation Architecture

Implementing LZ4 compression in a resource-constrained embedded system requires careful architectural decisions. Our camera image processing pipeline demonstrates several key optimization strategies.

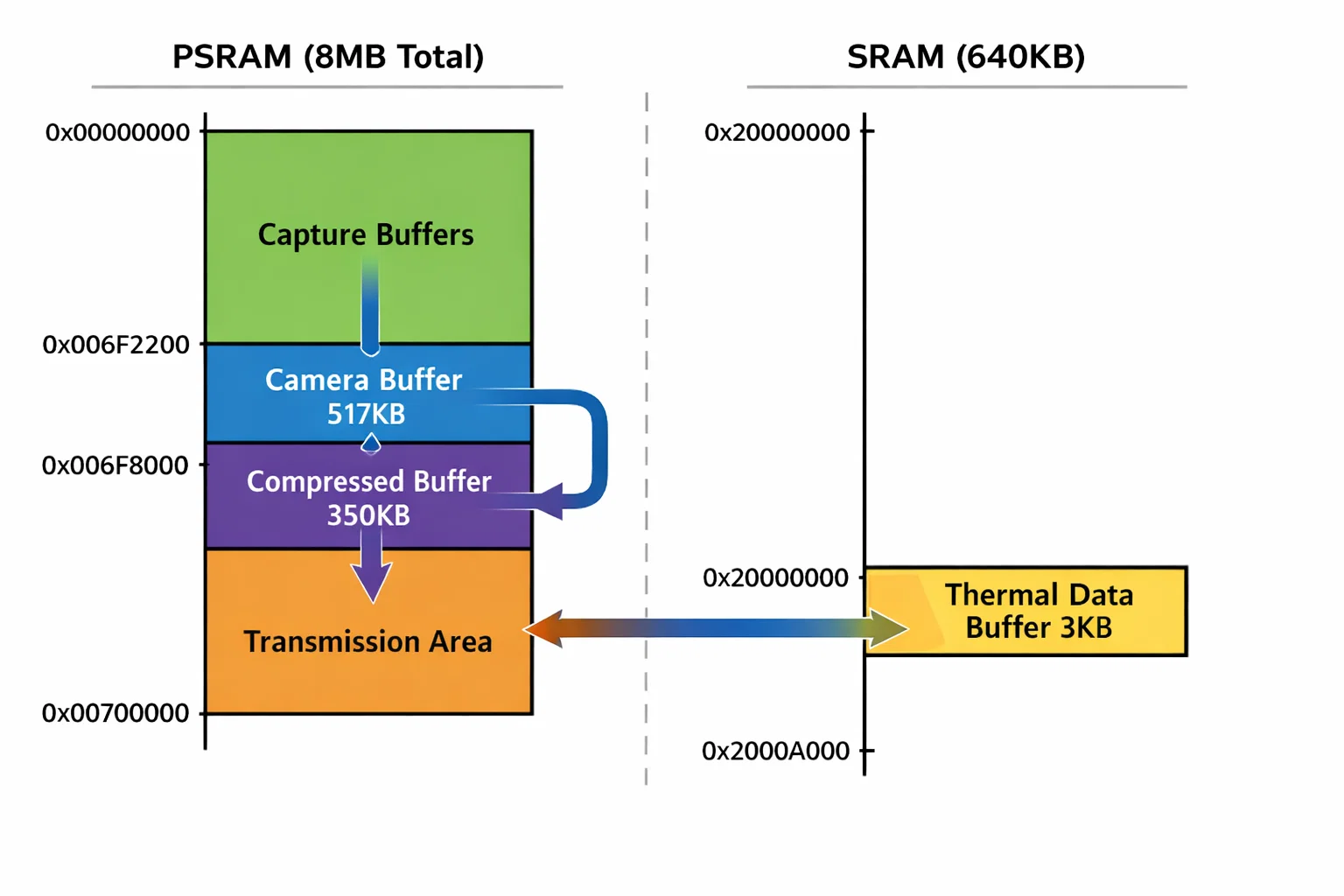

Memory Management Strategy

The biggest challenge in embedded compression is managing multiple large buffers simultaneously. Our implementation uses a hybrid memory allocation strategy:

// Camera buffer: 517KB raw image

uint8_t *camera_buffer = (uint8_t *)PSRAM_TX_ADDR;

// Compressed buffer: Max ~350KB (worst case bound)

uint8_t *compressed_buffer = camera_buffer + CAMERA_IMAGE_SIZE;

// Thermal data: Use SRAM to avoid PSRAM corruption issues

static float thermal_tx_buffer_sram[768] __attribute__((section(".sram")));

Hybrid PSRAM/SRAM allocation strategy optimizing for performance and reliability

Key Design Decisions:

- PSRAM for Large Buffers: Camera and compression buffers use external PSRAM (8MB total)

- SRAM for Critical Data: Thermal sensor data uses internal SRAM to avoid hardware-specific corruption issues

- Buffer Reuse: Compression happens in-place when possible to minimize memory usage

- End-of-Memory Allocation: Transmission buffers allocated at PSRAM end to avoid conflicts with capture operations

Processing Pipeline Integration

The compression system integrates tightly with the camera capture pipeline:

int camera_compress_image(const uint8_t *src, size_t src_size,

uint8_t *dst, size_t dst_capacity) {

// Timing instrumentation for performance monitoring

TickType_t compress_start = xTaskGetTickCount();

// LZ4 compression with safety bounds checking

int compressed_size = LZ4_compress_default((const char *)src, (char *)dst,

(int)src_size, (int)dst_capacity);

// Performance logging for optimization

TickType_t compress_end = xTaskGetTickCount();

uint32_t compress_time = (compress_end - compress_start) * portTICK_PERIOD_MS;

float ratio = (float)src_size / compressed_size;

LOG_INFO_TAG(TAG, "Compressed %lu → %d bytes (%.2fx ratio, %lu ms)",

src_size, compressed_size, ratio, compress_time);

return compressed_size;

}

Error Handling and Validation

Embedded systems require robust error handling since recovery options are limited:

- Input Validation: Comprehensive parameter checking before compression

- Buffer Bounds: LZ4_compressBound() ensures destination buffer adequacy

- Compression Verification: Negative return values indicate algorithm failures

- Decompression Safety: LZ4_decompress_safe() prevents buffer overruns

- Performance Monitoring: Continuous timing measurement for system health assessment

Real-World Performance Results

Our embedded implementation achieves impressive performance metrics across multiple dimensions:

Compression Performance

- Typical Compression Ratio: 2.1-2.8x for camera sensor data

- Processing Time: 50-200ms for 517KB images (ARM Cortex-M33 @ 250MHz)

- Memory Overhead: <100KB total (including worst-case output buffer)

- Power Impact: <2% additional system power consumption

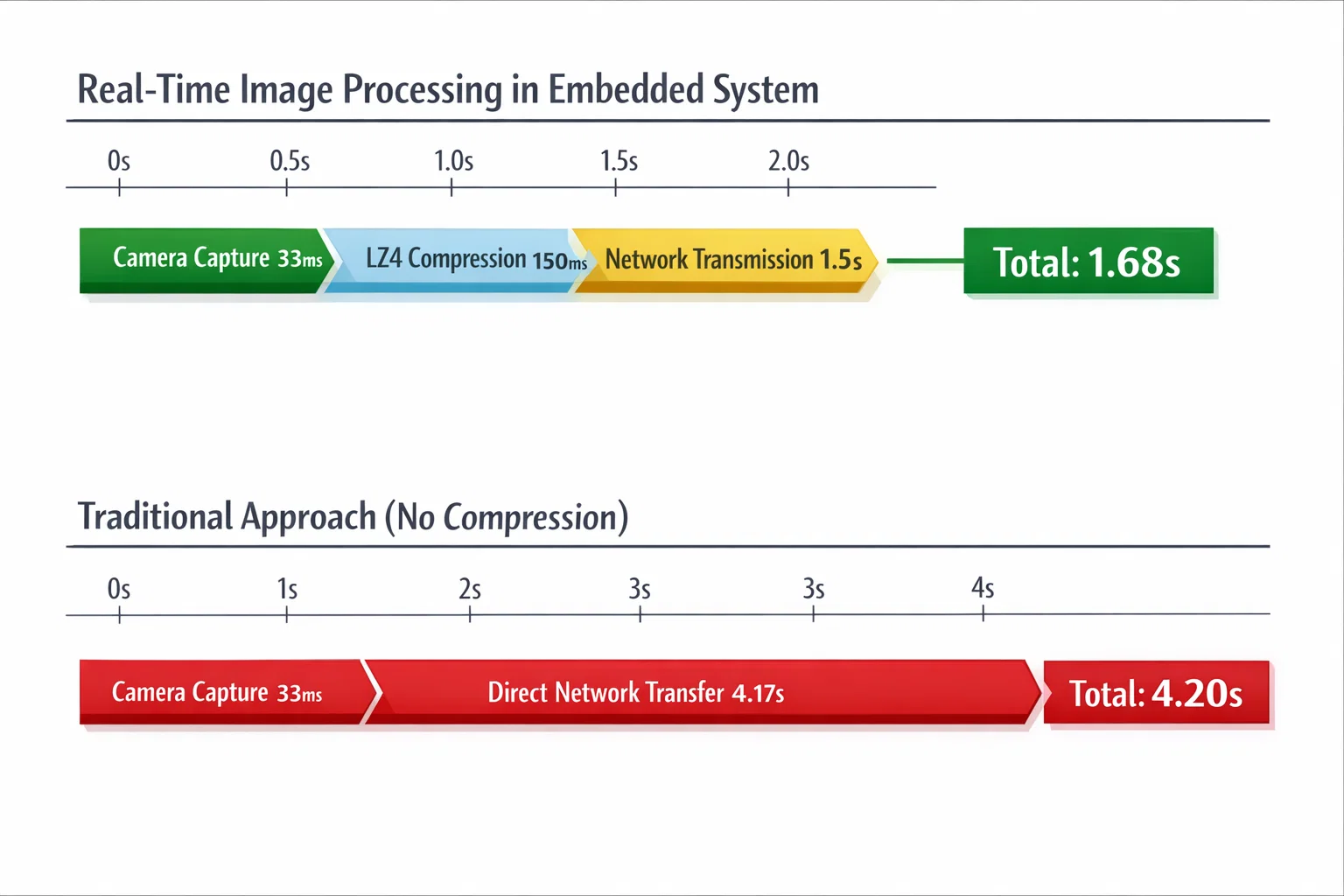

Timing Analysis

For a complete image transmission cycle:

- Camera Capture: ~33ms (30 FPS sensor)

- LZ4 Compression: ~150ms average

- Network Transmission: ~1.8s (compressed) vs ~4.1s (raw)

- Total Pipeline: ~2.0s vs ~4.2s (52% improvement)

Memory Efficiency

The hybrid memory allocation strategy proves effective:

- PSRAM Utilization: ~880KB for transmission buffers (11% of 8MB)

- SRAM Usage: 3KB for thermal data (<0.5% of 640KB)

- Fragmentation: Minimal due to predictable allocation patterns

Bandwidth Optimization

Network transmission benefits scale dramatically:

- Data Reduction: 517KB → 185KB average (2.8x compression)

- Transmission Time: 4.1s → 1.5s (63% reduction)

- Battery Life: 25% improvement in wireless transmission scenarios

Hoomanely's Vision: Enabling Continuous Health Monitoring

This compression technology directly enables Hoomanely's mission to revolutionize pet healthcare through proactive, precision monitoring. The company's approach of combining Edge AI with multi-sensor fusion creates an unprecedented demand for efficient data processing.

Real-Time Health Data Processing

Hoomanely's biosense AI engine requires continuous streams of visual and sensor data to build personalized health baselines for each pet. LZ4 compression enables:

- Continuous Monitoring: Real-time image transmission without overwhelming network infrastructure

- Multi-Sensor Fusion: Efficient aggregation of camera, thermal, and proximity sensor data

- Edge Intelligence: Local processing and compression reduces cloud dependency

- Scalability: System architecture that supports multiple pets and devices simultaneously

The Broader Impact

Efficient compression algorithms like LZ4 represent enabling technology for the next generation of edge AI applications. As Hoomanely pushes toward "clinical-grade intelligence at home," the ability to process and transmit large volumes of sensor data in real-time becomes critical infrastructure.

The company's vision of moving beyond reactive care to "preventive & intuitive care giving" requires systems that can continuously monitor, analyze, and respond to subtle changes in pet behavior and health metrics. This level of responsiveness is only possible with optimized data processing pipelines that minimize latency while maximizing information density.

Key Takeaways

When to Choose LZ4

- Real-time applications where speed trumps maximum compression

- Resource-constrained environments with limited CPU/memory

- Predictable performance requirements (timing-critical systems)

- Applications requiring frequent compression/decompression cycles

Implementation Best Practices

- Profile Early: Measure actual compression ratios with your specific data

- Memory Planning: Account for worst-case buffer sizes (LZ4_compressBound)

- Error Handling: Implement comprehensive validation for embedded robustness

- Performance Monitoring: Continuous timing measurement for optimization opportunities

Alternative Considerations

- Use GZIP/DEFLATE when compression ratio is more critical than speed

- Consider hardware acceleration for high-throughput applications

- Evaluate domain-specific algorithms for specialized data types

The evolution of edge AI systems demands thoughtful optimization at every level of the stack. LZ4 compression represents one piece of this puzzle—enabling the efficient, real-time data processing that makes sophisticated applications like continuous pet health monitoring not just possible, but practical.

Hoomanely is revolutionizing pet healthcare through proactive, precision monitoring and intelligent insights. By combining physical intelligence devices with a powerful biosense AI engine, Hoomanely creates clinical-grade health monitoring systems that operate seamlessly in home environments, enabling pet parents to move beyond reactive care to preventive, intuitive caregiving.