Seeing Smarter: How ArUco Markers Inspired Our Fast Logo Detection

Intro: Vision That Starts with a Square

At Hoomanely, we’re building smart systems that see and understand pets - from their eyes and movements to the bowls they eat from. To make this happen, our camera pipeline needs to detect key visual cues with precision and speed - even on small edge devices like the Broadcom based ARM7 MPU. One piece of technology that has inspired our visual detection stack is something deceptively simple: the ArUco marker.

These black-and-white squares are everywhere in robotics and AR systems, acting as visual anchors for machines to localize themselves in space. But what makes them so fast and reliable? And how did the same principles help us create a Hoomanely logo detection system that runs in real-time on embedded devices?

Let’s break it down.

1. What Are ArUco Markers?

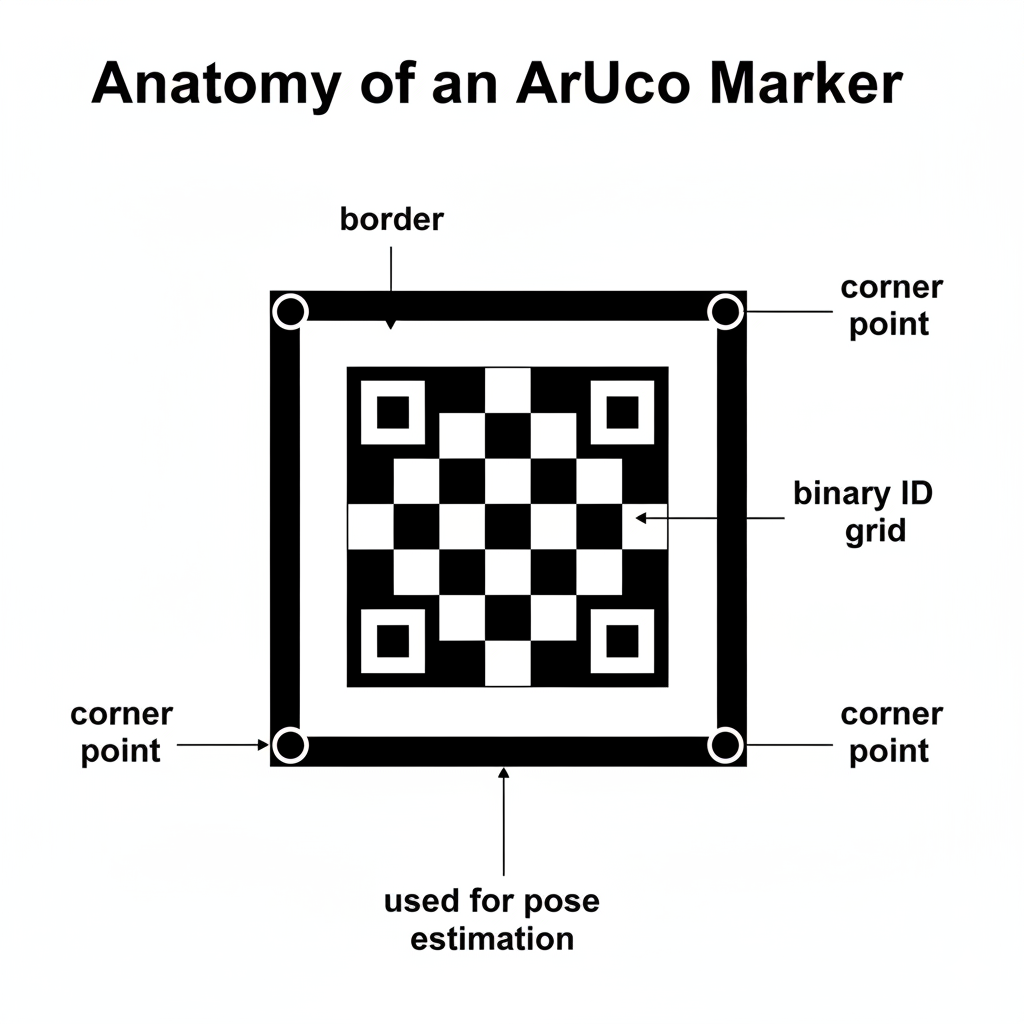

Imagine a QR code, but leaner and smarter. ArUco markers are binary square patterns designed to be easily detectable by computer vision systems.

Each marker encodes a small binary ID inside a thick black border. Unlike QR codes, they aren’t meant to store data - instead, they serve as unique identifiers for positioning and tracking.

They are used in:

- AR applications for overlaying virtual objects on physical surfaces.

- Robotics for localization and mapping.

- Drones for autonomous landing and alignment.

When you point a camera at an ArUco marker, the system instantly identifies which marker it is and where it lies in 3D space.

2. How Do ArUco Markers Work?

At its core, ArUco detection is a pattern-recognition pipeline optimized for speed and robustness.

Let’s walk through the process step-by-step:

Step 1: Grayscale Conversion

The input image is converted to grayscale, reducing computational load by focusing only on intensity.

Step 2: Adaptive Thresholding

This step converts the image into a binary map (black and white) using adaptive thresholds, making detection robust under changing lighting.

Step 3: Contour Detection

Contours (closed curves around white regions) are extracted. The algorithm filters out quadrilateral shapes - potential candidates for markers.

Step 4: Corner Refinement

Each detected square is perspective-corrected (using homography) and warped to a fixed-size grid.

Step 5: Binary Code Extraction

The interior grid is sampled to decode the binary pattern. This bit matrix corresponds to a specific marker ID from a predefined dictionary.

Step 6: Pose Estimation (Optional)

Using the 2D corner coordinates and known physical size, OpenCV’s solvePnP() estimates the marker’s 3D position and orientation relative to the camera.

Why It’s So Fast:

- The binary design minimizes data to process.

- Thresholding + contour-based detection avoids deep learning altogether.

- Corner-based pose estimation is pure geometry - quick matrix math, no neural nets needed.

In practice, ArUco detection runs at 30–60 FPS even on small CPUs - perfect for drones, AR glasses, or our CM4-based smart bowl.

3. The Math Behind the Magic

At its core, ArUco leverages two main mathematical building blocks:

PnP (Perspective-n-Point)

Once corner correspondences are known, the camera’s extrinsic parameters (rotation R, translation t) are estimated using:

s [u, v, 1]ᵀ = K [R | t] [X, Y, Z, 1]ᵀ

Here K is the camera’s intrinsic matrix (focal lengths, optical center).This step enables 3D tracking - you can literally know how far and at what angle the marker lies from the camera.

Homography Estimation

Each 2D corner (x, y) is mapped to a real-world coordinate (X, Y) using:

[x, y, 1]ᵀ ≈ H [X, Y, 1]ᵀ

where H is the homography matrix derived from perspective correction.

The entire operation is algebraic - no neural network inference latency, just matrix multiplications and bit comparisons.

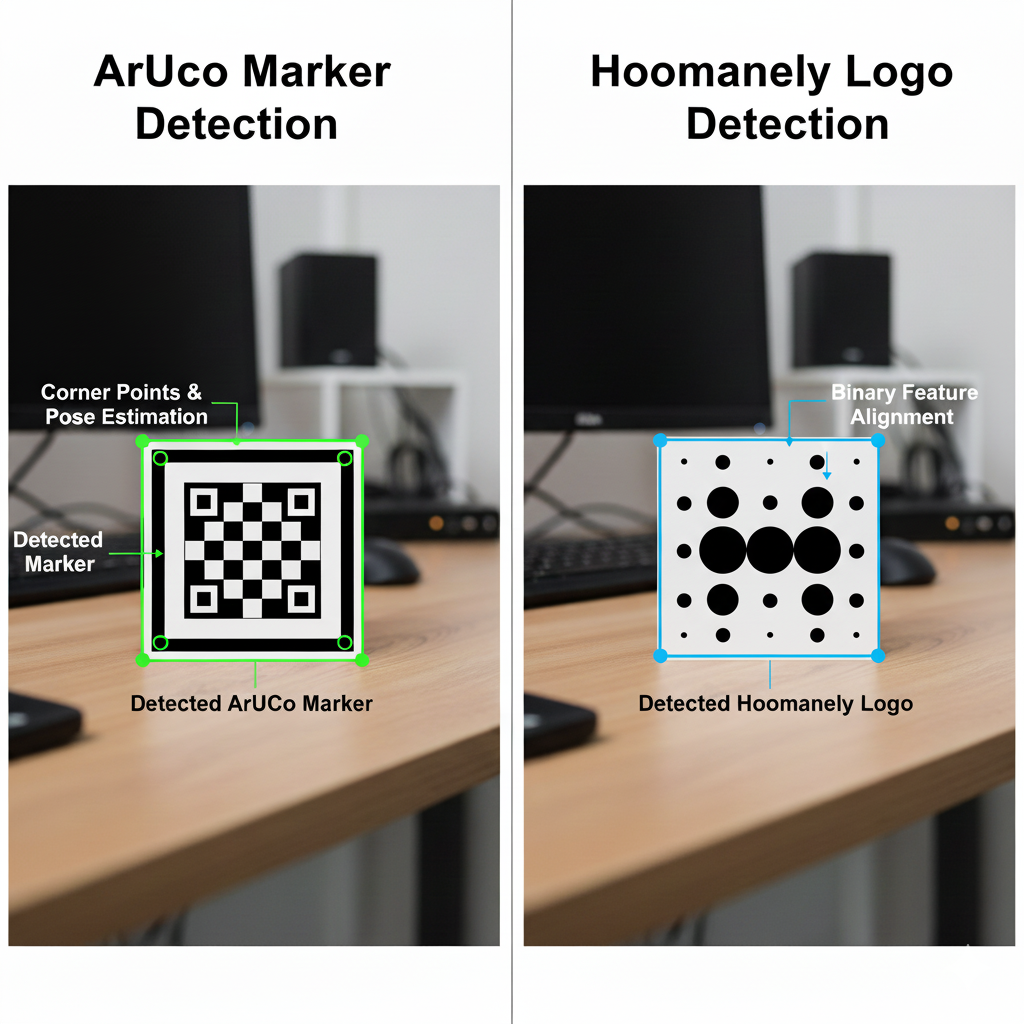

4. From ArUco to Hoomanely: Logo Detection at the Edge

At Hoomanely, we needed to detect our logo on bowls and packaging to align multi-sensor readings (RGB, thermal, proximity) in real-time. The challenge:

- The logo isn’t a binary square.

- Lighting varies (indoor, outdoor, shadowed bowls).

- The camera runs on ARM7 MPU, a low-power ARM chip.

We took inspiration from ArUco markers’ binary encoding and threshold-based speed to build a custom logo detector.

Our Adapted Approach:

- Edge Simplification:

Convert RGB → grayscale → adaptive threshold to isolate the logo outline. - Shape Filtering:

Instead of perfect squares, we use template matching + contour filters to detect the curved logo shape. - Binary Feature Hashing:

Similar to how ArUco encodes bits, we compute a binary hash of key logo regions (high-contrast zones) to quickly reject false positives. - Pose Alignment:

Once detected, the logo corners are used for homography estimation - aligning overlays like temperature readings or motion vectors.

This hybrid classical pipeline lets us achieve 40–50 ms inference on ARM7 MPU - comparable to ArUco speeds but with more flexible logo shapes.

5. Why We Didn’t Just Use Deep Learning

You might wonder - why not just use YOLO or a CNN?

Good question. While CNNs are powerful, they come with trade-offs:

- Latency: A CNN can take 300–700 ms per frame on ARM7 MPU.

- Energy: Power draw increases with heavy inference cycles.

- Data: Training requires hundreds of labeled logo samples under different lighting conditions.

ArUco-inspired classical CV offers a lightweight, deterministic alternative - perfect for embedded edge setups where we need to:

- Detect the bowl logo reliably.

- Align thermal and RGB frames in sync.

- Conserve battery life.

In short, deep learning helps us detect complex things (like eyes or faces), but classical vision wins for known patterns - it’s simpler, faster, and explainable.

6. Field Results

In real-world testing across multiple bowl units:

- Detection Accuracy: ~92% under varying light conditions.

- Latency: ~40–50 ms per frame (vs ~500 ms for YOLOv8).

These results allowed us to maintain perfect overlay consistency between RGB and thermal frames - ensuring accurate eye-temperature readings even when the bowl moves slightly.

7. Key Takeaways

- Simplicity scales. ArUco’s power lies in its simplicity - binary encoding + geometric math = speed.

- Edge efficiency matters. Lightweight algorithms can outperform deep models in real-time, power-constrained systems.

- Adapt old tricks to new challenges. By combining classical techniques (thresholding, homography) with tailored design, we achieved robust logo detection for Hoomanely.

Author’s Note

At Hoomanely, we’re building intelligent pet systems that can see, hear, and understand the world around your pet - safely, locally, and efficiently. Our logo detection isn’t just a branding element - it’s part of a larger vision system that ensures every measurement, from eye temperature to eating behavior, is spatially aligned and meaningful.

Sometimes, innovation starts not with the newest algorithm - but with a smart reuse of old ideas. ArUco markers are proof that even simple geometry can power the future of perception.