SPI vs. QSPI vs. OctoSPI: When the Extra Pins Are Worth the Routing Complexity

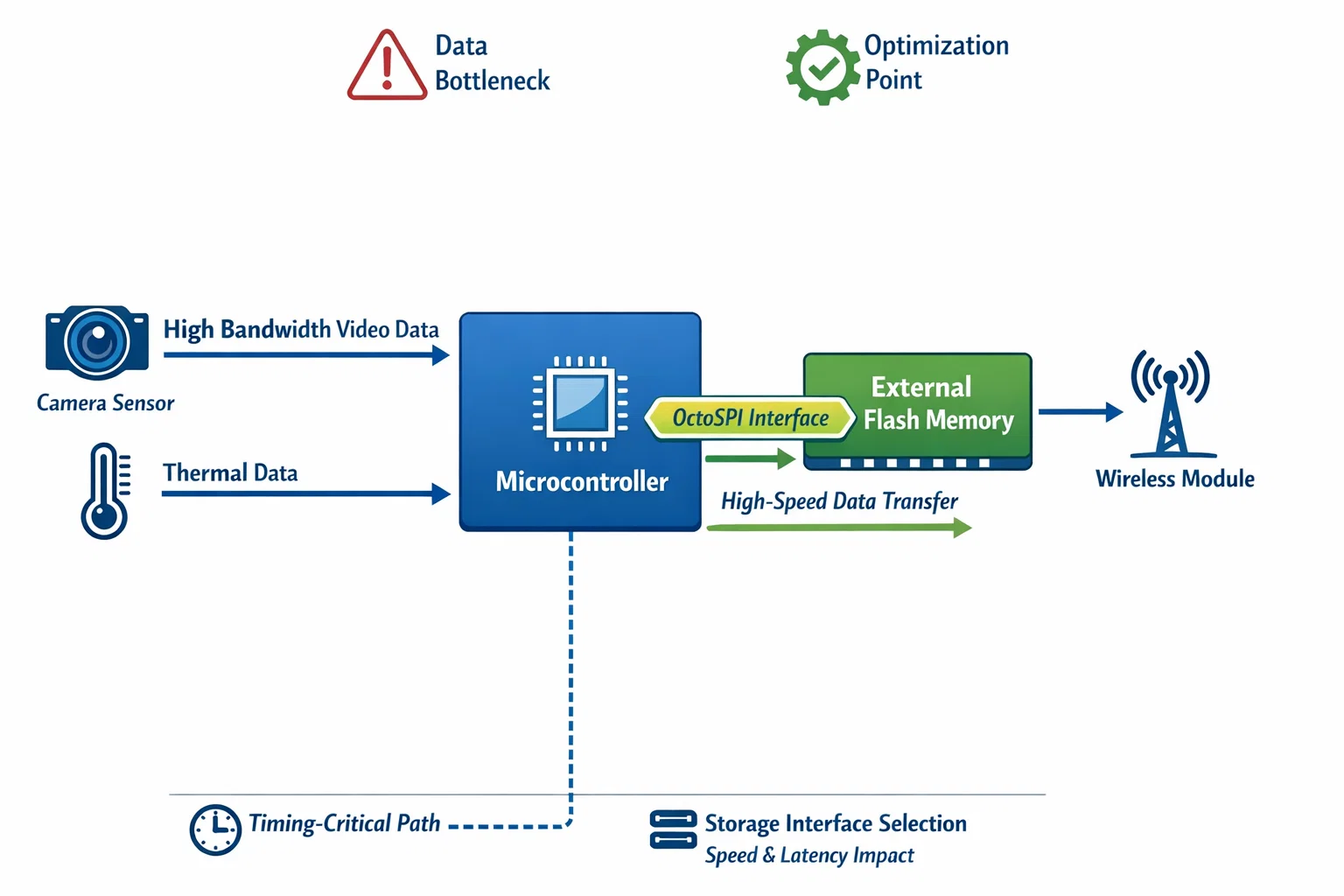

When designing embedded systems with significant data storage requirements, the choice between SPI, QSPI, and OctoSPI interfaces often comes down to a single question: are the extra pins worth the routing complexity? After implementing a high-performance storage system for a multi-sensor camera platform, we discovered that the answer isn't as straightforward as the datasheets suggest.

In real-world applications handling megabytes of sensor data, the theoretical bandwidth improvements of wider interfaces can be overshadowed by implementation challenges, protocol overhead, and system-level bottlenecks that no amount of extra data lines can solve.

The Promise vs. Reality of Parallel Flash Interfaces

Understanding the Theoretical Advantage

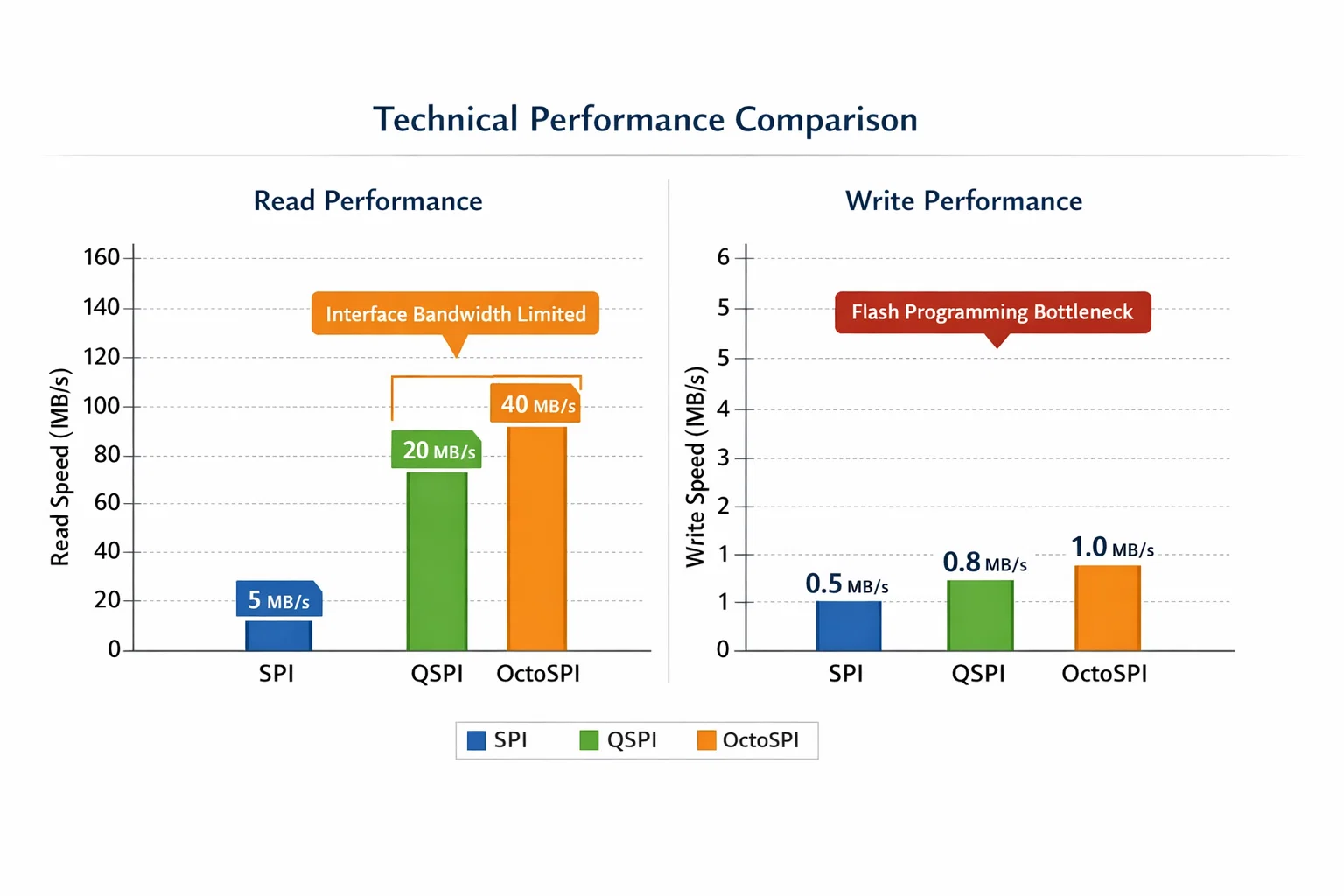

The progression from SPI to QSPI to OctoSPI represents a clear evolution in parallel data transfer capability. Traditional SPI uses a single data line for input and output, limiting throughput to one bit per clock cycle. QSPI expands this to four data lines, theoretically providing 4x the bandwidth. OctoSPI takes this further with eight data lines, promising an 8x improvement over standard SPI.

The Math Looks Compelling: At identical clock frequencies, an OctoSPI interface should deliver dramatically higher throughput than its predecessors. For applications processing large image files or continuous sensor streams, this bandwidth multiplication appears to be exactly what's needed.

The Reality Is More Complex: When implementing these interfaces in production systems, the theoretical advantages quickly encounter practical limitations that textbooks rarely mention.

Real-World Performance: When More Pins Don't Equal More Speed

The 83MHz Reality Check

Our implementation revealed a critical insight: higher interface complexity doesn't automatically translate to proportional performance gains. Testing with a high-speed flash memory controller capable of OctoSPI operation, we discovered that increasing the clock speed from 83MHz to 125MHz provided no measurable improvement in write performance.

The Bottleneck: Flash memory write operations are fundamentally limited by the physical page programming time, not the interface speed. While read operations benefit significantly from wider interfaces, write performance—often the critical path for sensor data storage—remains constrained by the flash memory's internal characteristics.

This finding challenges the common assumption that interface bandwidth is the primary performance limiter in storage systems.

System-Level Performance Analysis

When benchmarking 517KB camera image writes (a realistic workload for vision systems), the results were surprising:

- OctoSPI at 83MHz: Delivered consistent performance with excellent stability

- OctoSPI at 125MHz: No performance improvement, with occasional stability issues

- QSPI equivalent: Would theoretically provide similar performance for this write-limited workload

Key Insight: For write-intensive applications, the additional complexity of OctoSPI may not justify the routing challenges, especially when system performance is limited by flash memory characteristics rather than interface speed.

The Routing Complexity Tax: Hidden Costs of Wide Interfaces

Signal Integrity Challenges

As interface width increases, so do the signal integrity requirements. OctoSPI demands careful attention to:

Matched Trace Lengths: Eight data lines plus clock and control signals require precise length matching to maintain timing relationships. Small variations in trace length become significant at high frequencies.

Crosstalk Management: More parallel traces increase the potential for crosstalk between adjacent signals. This necessitates larger spacing between traces or additional ground planes.

Power Distribution: High-speed digital switching across multiple pins simultaneously creates significant current spikes, requiring robust power delivery and decoupling.

PCB Real Estate Impact

The transition from SPI to OctoSPI dramatically affects board layout:

- SPI: 4 pins (CLK, MOSI, MISO, CS)

- QSPI: 6 pins (CLK, IO0-IO3, CS)

- OctoSPI: 11 pins (CLK, IO0-IO7, CS, DQS)

In space-constrained designs, the additional pins can force layout compromises that impact other system functions. The pin count increase also affects microcontroller selection and package requirements.

When the Extra Pins Pay Off: Read-Intensive Applications

The Read Performance Advantage

While write performance showed minimal improvement, read operations demonstrate the clear advantages of wider interfaces. Testing revealed significant benefits for read-intensive workloads:

Burst Read Operations: Large sequential reads, such as loading stored images for transmission, showed dramatic improvements with wider interfaces. The ability to transfer multiple bits per clock cycle directly translates to faster data retrieval.

Random Access Performance: Applications requiring frequent access to stored configuration data or lookup tables benefit substantially from the reduced latency of parallel interfaces.

Application-Specific Considerations

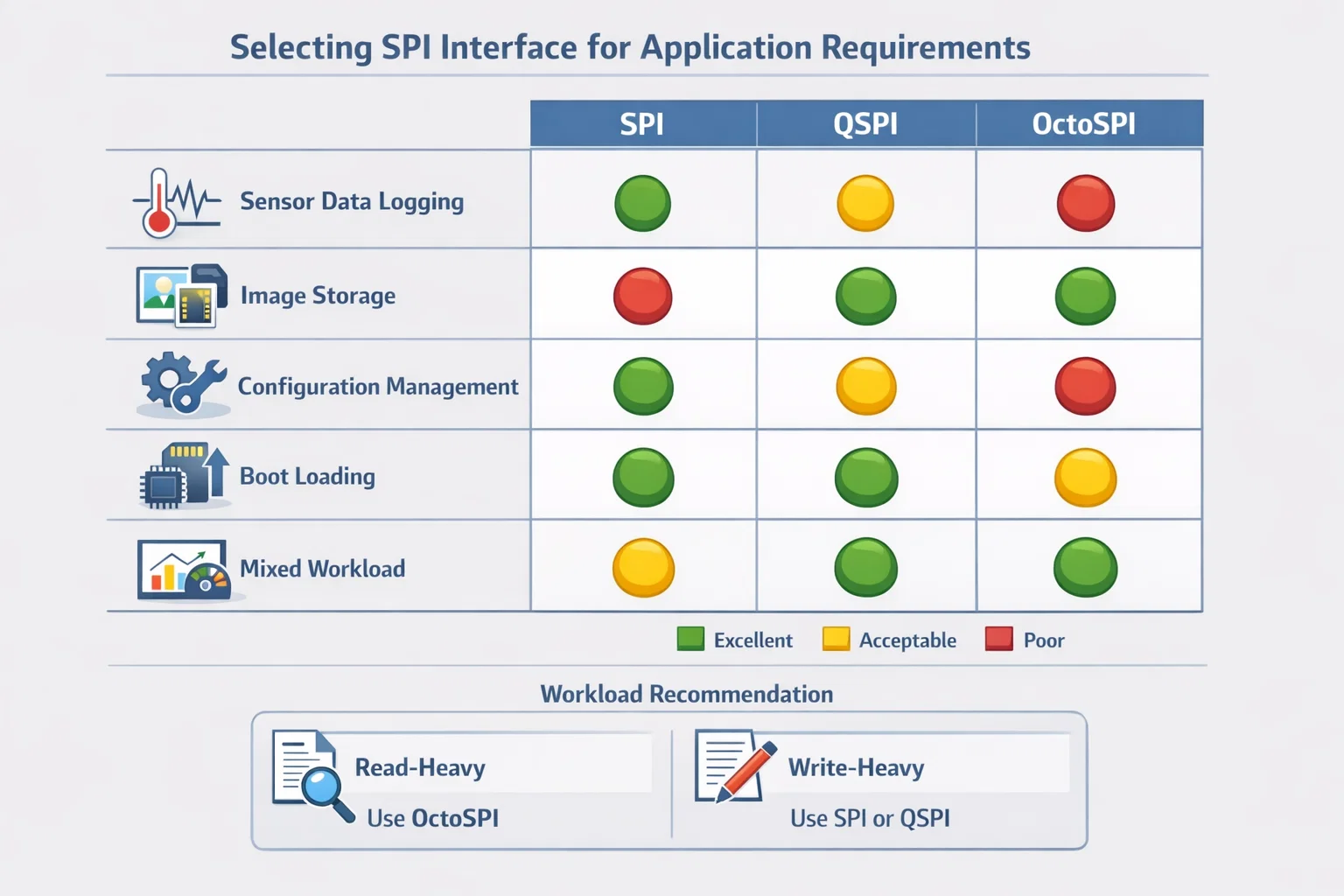

The decision between SPI variants should align with application requirements:

Write-Heavy Workloads: Continuous sensor logging, image capture, or data recording applications may see minimal benefit from wide interfaces due to flash programming limitations.

Read-Heavy Workloads: Applications that frequently access stored data, such as boot loaders, configuration managers, or data playback systems, can fully exploit the bandwidth advantages of wider interfaces.

Mixed Workloads: Systems with balanced read/write requirements need careful analysis to determine if the read performance gains justify the implementation complexity.

Implementation Strategy: Balancing Performance and Complexity

The Pragmatic Approach

Based on real-world implementation experience, consider this decision framework:

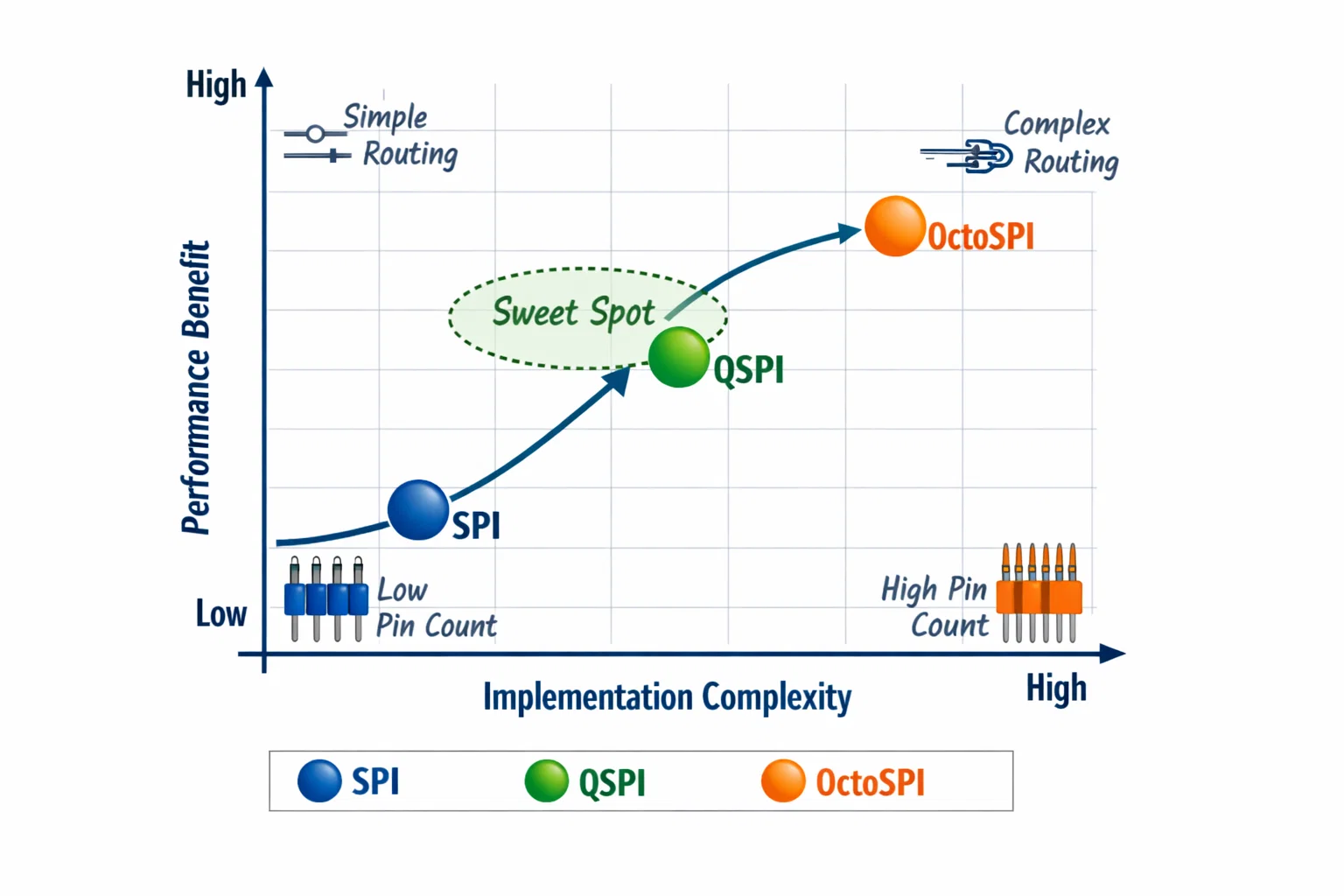

Start with QSPI: For most applications, QSPI provides an excellent balance of performance improvement and implementation complexity. The four data lines offer substantial benefits over SPI while keeping routing manageable.

Choose OctoSPI When: Read performance is critical, board space allows for proper routing, and the application can justify the additional complexity. High-performance computing applications or systems with large, frequently-accessed datasets are ideal candidates.

Stick with SPI If: Board space is severely constrained, cost is paramount, or the application has minimal storage performance requirements.

Design Validation Recommendations

Before committing to a wider interface:

- Profile Your Workload: Measure the actual read/write ratio of your application under realistic conditions

- Prototype Early: Build test boards with the proposed interface to validate signal integrity and performance

- Consider Alternative Optimizations: Sometimes better flash memory selection or improved software algorithms provide greater benefits than interface changes

At Hoomanely, our mission to revolutionize pet healthcare through precision monitoring technology directly benefits from understanding these storage interface trade-offs. When developing wearable devices that continuously monitor pet health metrics, every design decision impacts the final system's reliability and performance.

Our approach combines Edge AI with multi-sensor fusion to generate clinical-grade intelligence at home. This breakthrough requires sophisticated data management—capturing biosensor readings, processing camera data for behavioral analysis, and maintaining reliable wireless connectivity. The storage system must handle diverse data streams efficiently while operating within the power and space constraints of a pet-wearable device.

By mastering the practical implications of storage interface selection, we ensure that our devices can reliably capture and process the complex data streams necessary for precision health monitoring. The lessons learned from high-performance embedded storage implementation directly enable the technology platform that transforms raw health data into personalized insights for pet care.

The Engineering Trade-off Matrix

Making the Right Choice

The decision between SPI variants isn't just technical—it's strategic. Consider these factors:

Performance Requirements vs. Implementation Cost: Wide interfaces require more sophisticated hardware design, longer development cycles, and potentially higher manufacturing costs.

Signal Integrity vs. Board Complexity: Achieving reliable high-speed operation with multiple data lines demands careful PCB design and may require additional layers or specialized materials.

Future Scalability vs. Current Needs: Selecting a more capable interface than currently required provides upgrade headroom but at the cost of immediate complexity.

Beyond Bandwidth: System-Level Thinking

The most successful implementations consider storage interfaces as part of a complete system optimization strategy. Sometimes, the best performance comes from:

- Better Memory Management: Optimized caching and buffering strategies can reduce storage access requirements

- Data Compression: Reducing the amount of data that needs to be stored often provides greater benefits than faster storage interfaces

- Parallel Processing: Using multiple storage devices with simpler interfaces can outperform single wide interfaces in some applications

Key Takeaways

- Interface bandwidth is often not the limiting factor in storage performance—flash memory write characteristics frequently dominate system throughput.

- Routing complexity grows exponentially with interface width, requiring careful cost-benefit analysis for each application.

- Read and write workloads respond differently to interface improvements—characterize your specific application thoroughly.

- Signal integrity challenges increase significantly with parallel interfaces—factor this into development timeline and cost estimates.

- System-level optimization often trumps interface optimization—consider the complete data path before defaulting to the widest available interface.

This analysis draws from hands-on experience implementing high-performance storage systems in resource-constrained embedded environments. The performance data and design insights reflect real-world challenges that extend beyond theoretical specifications. When evaluating storage interface options, remember that the "fastest" interface on paper may not deliver the best system-level performance for your specific application.

The intersection of hardware capabilities and software optimization demands a nuanced approach that considers both immediate requirements and long-term system evolution. Whether developing healthcare monitoring devices or other data-intensive embedded systems, the storage interface decision should align with overall system architecture and performance priorities.