The Hidden Cost of Bad Labels: Why dataset curation is 80% of the ML job

The Uncomfortable Truth About ML Projects

Every machine learning project has a dirty secret: the model is usually not the problem.

When a model underperforms, the instinct is to reach for a bigger architecture, more layers, or fancier training tricks. But in our experience building machine learning systems at Hoomanely, the culprit is almost never the model. It's the data.

Bad labels are insidious. They don't throw errors. They don't crash your pipeline. They quietly poison your training set, teaching your model to learn patterns that don't exist—or worse, to ignore patterns that do. And by the time you notice, you've burned weeks of compute and engineering time chasing phantom bugs.

What "Bad Labels" Actually Means

When we talk about bad labels, we're not just talking about obvious mistakes like mislabeling a cat as a dog. Those are easy to catch. The dangerous errors are subtler:

| Error Type | Description | Impact |

|---|---|---|

| Boundary slop | Mask edges include background pixels | Model learns to include irrelevant context |

| Partial coverage | Annotation misses part of the object | Model learns incomplete representations |

| Inconsistent standards | Different annotators apply different rules | Model receives conflicting supervision |

| Silent omissions | Objects present but not labeled | Model learns to ignore valid instances |

In segmentation tasks—like the dog masking pipeline we built for Everbowl—boundary quality matters enormously. A mask that includes floor tiles teaches the model that floor tiles are part of dogs. Multiply that by thousands of examples, and you've baked a systematic bias into your weights.

How Bad Labels Accumulate

The frustrating thing about label quality is that it degrades gradually. No single bad label ruins a model. It's the accumulation that kills you.

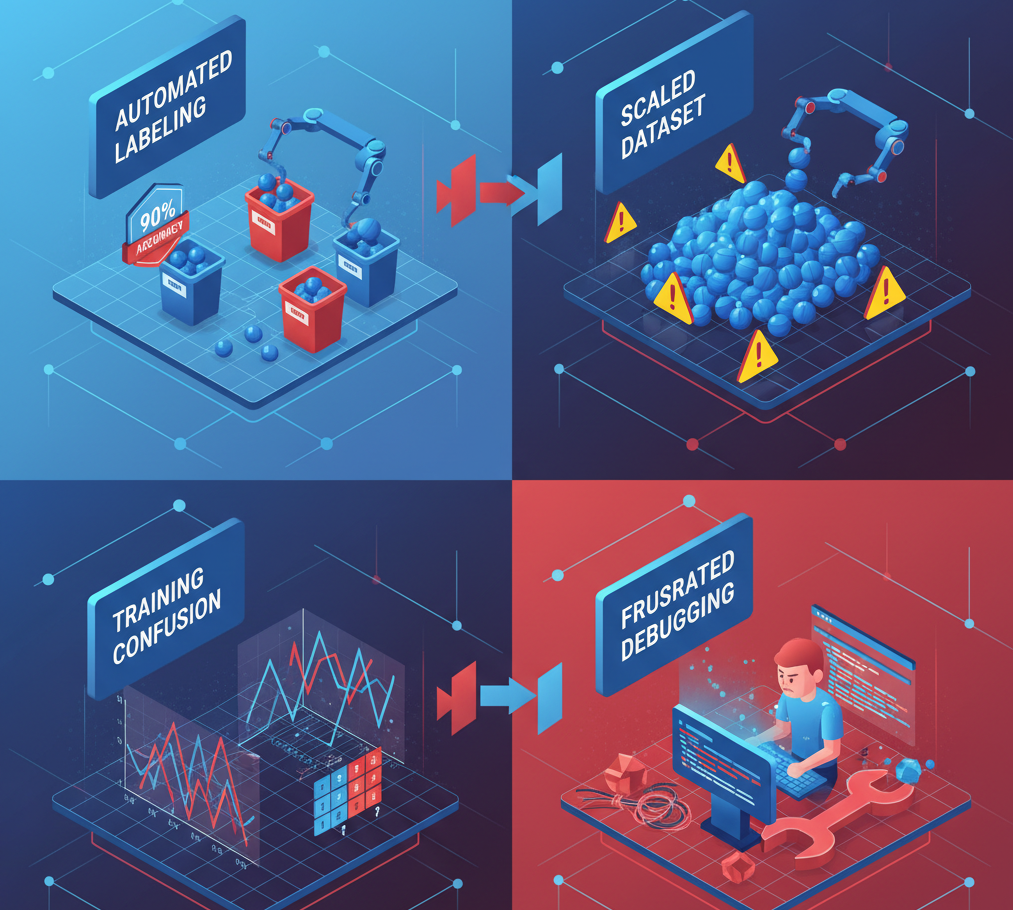

Stage 1: Optimistic automation. You use a pretrained model to generate initial labels. The outputs look pretty good—maybe 90% accurate. You move on.

Stage 2: Scale without review. You run auto-labeling on your full dataset. Ten thousand images, ten thousand labels. Nobody checks them all.

Stage 3: Training proceeds. Metrics look reasonable. You ship something.

Stage 4: Edge cases surface. Users report weird behavior. You debug for days before realizing the training data was teaching the wrong thing all along.

We've lived this cycle. The lesson was clear: automation without curation is just automated garbage.

The 80/20 Reality

There's a saying in ML that data work is 80% of the job. For production systems, that's an understatement.

The counterintuitive insight is that spending more time on data lets you spend less time on everything else. A clean dataset trains faster, converges reliably, and produces a model that just works—without debugging spirals that eat weeks.

We've seen cases where 10% improvement in label quality translated to 30% improvement in model accuracy, with zero architecture changes. The model wasn't the bottleneck. The signal-to-noise ratio was.

This mirrors what we've observed across the industry: teams that treat data as an afterthought struggle endlessly with model performance, while teams that prioritize data quality ship faster and iterate more confidently. The math is simple—if your training data contains 15% label errors, you're essentially asking your model to learn from noise 15% of the time. No amount of architectural sophistication can overcome that fundamental handicap.

Building Curation Into the Pipeline

After getting burned, we redesigned our workflow to treat curation as a core engineering task.

1. Automated labeling with bounded trust. We use foundation models (Grounding DINO, SAM) to generate initial annotations—but treat them as proposals, not ground truth. Every auto-generated label is suspect until reviewed.

2. Visual review tooling. We built lightweight tools that let engineers quickly scan labeled images, flag problems, and make corrections. Speed is key: if reviewing takes more than 2 seconds per image, people won't do it. Our curator shows the image, the mask overlay, and two buttons: "Keep" or "Delete."

The interface design matters more than you'd think. We tried several iterations before landing on this minimal approach. Early versions included fields for detailed error categorization and confidence ratings—nobody used them. Friction kills adoption. The final version is almost brutally simple, and that's exactly why it works.

3. Quality metrics as first-class outputs. We track label quality alongside model metrics. What percentage of auto-labels passed review? What are the common failure modes? This feeds back into improving auto-labeling itself.

These metrics serve double duty: they guide where to focus manual review efforts and they help us tune our foundation model prompts. When we noticed that auto-labeling consistently struggled with certain dog breeds, we adjusted our prompting strategy and saw immediate improvements in proposal quality.

4. Stratified sampling. You can't review every image, but you can review strategically. We sample across lighting conditions, angles, breeds, and background complexity to ensure representative coverage.

We maintain a "diversity score" for our dataset that tracks coverage across these dimensions. When the score dips in any category, that becomes our priority for the next review session. This systematic approach prevents the common trap of over-optimizing for common cases while edge cases accumulate unnoticed.

What Could You Go Wrong

A few mistakes worth sharing:

Trusting confidence scores. Auto-labeling models output confidence scores, and it's tempting to filter on them. But confidence doesn't correlate with correctness—models can be confidently wrong. Visual review is irreplaceable.

Treating curation as one-time. Teams initially curate, train, and move on. But data distributions shift. New edge cases emerge. Curation needs to be continuous.

Ignoring annotator fatigue. Generally, teams push for marathon review sessions. But, quality plummet after the first hour. Ideally, teams should limit sessions to 45 minutes with breaks, and track per-annotator accuracy to catch when someone needs to step away.

Key Takeaways

Bad labels are silent killers. They don't crash training—they make your model subtly, persistently wrong.

Automation is a starting point. Foundation models generate labels at scale, but every label needs human oversight.

Curation is engineering. Treat it with the same rigor as code. Version your datasets. Track quality metrics. Build tools that make the work sustainable.

The ROI is real. Time invested in data quality pays dividends in faster training, better accuracy, and less debugging. In our experience, every hour spent on curation saves three to five hours downstream.

The next time your model underperforms, resist the urge to reach for a bigger architecture. Look at your labels first. The answer is probably hiding there.