Why Transfer Learning Failed For Our Audio Noise Cancellation Pipeline

Introduction

Transfer learning usually feels like a shortcut that just works. Pretrain on a large dataset, fine-tune on your task, and let scale do the heavy lifting. That approach worked well for us in vision - but audio broke that assumption in a very specific, painful way.

At Hoomanely, we were trying to build a privacy-preserving dog audio pipeline: detect eating sounds, suppress human speech, and later enable anomaly detection — all on edge devices. We relied heavily on a prompt-based audio separation transformer as a teacher model, assuming its outputs would be “good enough” to fine-tune downstream models.

They weren’t.

This post explains why transfer learning failed in our audio setup, how a seemingly small 20% noisy output completely derailed training, and why a smaller, cleaner dataset + diffusion-based augmentation + YAMNet fine-tuning outperformed teacher–student distillation by a wide margin.

Context: Audio at Hoomanely

Hoomanely’s mission is to convert raw pet signals into daily, actionable intelligence - responsibly and privately. Audio is one of the hardest modalities in that equation.

Our constraints shaped everything:

- Always-on microphones in homes

- Strict privacy requirements (human speech must not leak)

- Edge inference (limited compute, low latency)

- Extremely subtle target signals (chewing, licking, bowl movement)

Unlike vision, audio errors are invisible. You don’t see what went wrong — you hear it, and humans are notoriously bad at auditing thousands of clips.

The Original Plan

The original pipeline looked reasonable:

- Collect raw bowl audio

- Use an audio separation transformer with prompts like

“dog eating, chewing, drinking” - Treat the separated output as clean ground truth

- Fine-tune downstream models (Conv-TasNet / classifiers)

- Deploy on edge

This was essentially teacher–student distillation, with the transformer acting as a high-capacity oracle.

On paper, it made sense.

Where Things Started Breaking

The 20% Problem

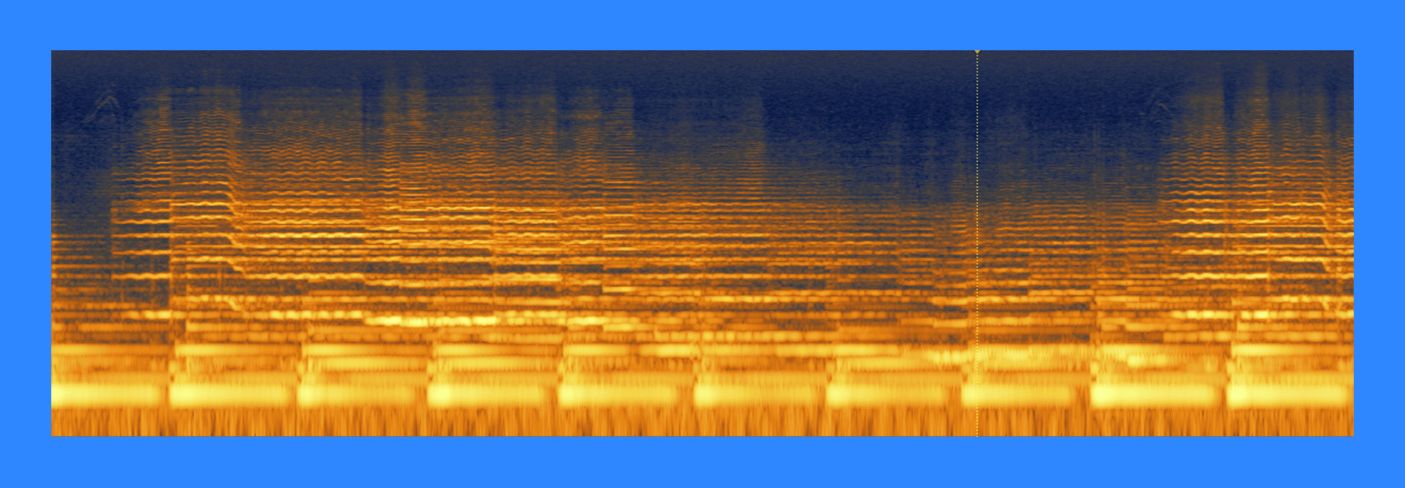

Roughly 20% of the transformer outputs were bad.

Not catastrophically bad - just subtly wrong:

- Residual human speech buried under chewing

- Over-suppressed transients

- Artificial gating artifacts

- Frequency smearing that didn’t exist in real data

The issue wasn’t the percentage.

The issue was verification.

Why Audio Is Harder to Verify Than Vision

In vision pipelines:

- A bad label is visible instantly

- You can scroll through 500 images in minutes

- Annotation errors are obvious

In audio:

- Each clip takes 5–10 seconds

- Artifacts are subtle

- Fatigue sets in quickly

- Humans disagree on what “clean” means

We could not reliably filter that 20% out.

And the model definitely learned from it.

Transformer artifacts often look plausible but distort critical frequency patterns.

Why Transfer Learning Failed (Specifically Here)

1. Teacher Errors Became Student Bias

Teacher–student learning assumes:

“Teacher errors are rare and random.”

Ours were systematic.

The model learned:

- Transformer artifacts as “normal”

- Speech leakage as acceptable background

- Over-smoothed chewing as the target texture

The student didn’t generalize — it overfit the teacher’s mistakes.

2. AudioSep Outputs Were Not Auditable at Scale

We couldn’t:

- Confidently label good vs bad outputs

- Assign trust scores

- Weight samples correctly

So every epoch reinforced noise.

In hindsight, this wasn’t a modeling failure - it was a data trust failure.

3. Distillation Multiplied the Damage

Teacher–student setups amplify bias:

- Teacher mistakes → student representations

- Student trained longer → stronger bias

- Downstream tasks inherit both

Each iteration moved us further from real dog audio.

The Pivot: Smaller, Cleaner, Verifiable Data

Instead of asking “How do we fix the model?”, we asked:

“What data can we actually trust?”

The new approach

- Manually curate a small, clean audio dataset

- Fewer samples, but 100% verified

- Real recordings, no separation artifacts

This dataset was tiny compared to our generated corpus — but it was honest.

Data Strategy Shift

| Old Strategy | New Strategy |

|---|---|

| Large synthetic dataset | Small real dataset |

| Transformer-generated targets | Human-verified audio |

| Teacher–student learning | Direct supervised learning |

| Hard to audit | Easy to trust |

This change alone stabilized training.

Augmenting with Diffusion Models (The Right Way)

The obvious concern was scale.

So instead of trusting separation transformers again, we used audio diffusion models to augment, not generate labels.

Key difference:

- Conditioned on clean dog audio

- Used for variation, not truth

- Human-verified seed data only

Augmentations included:

- Temporal stretching

- Subtle pitch variance

- Environmental noise layering

- Room impulse responses

Crucially, we could still recognize the sound.

Diffusion adds variation without inventing structure.

Fine-Tuning YAMNet (Why It Worked)

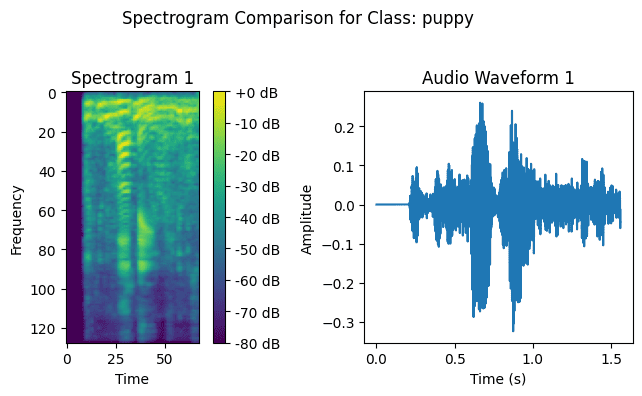

With clean + augmented data, we fine-tuned YAMNet for dog-specific sounds.

Why YAMNet?

- Lightweight

- Strong general audio priors

- Stable embeddings

- Designed for environmental audio (not speech separation)

We fine-tuned only the upper layers, keeping lower representations intact.

Results vs Teacher–Student Distillation

The results were unambiguous:

- Higher classification accuracy

- Lower false positives

- No speech hallucinations

- More stable embeddings

- Better edge performance

Most importantly, this setup outperformed the AudioSep-based teacher–student pipeline — despite using far less data.

Clean data + diffusion augmentation beat noisy distillation.

Why This Worked

1. Clean Data Beats Large Data

A small dataset you trust will always outperform a large one you don’t — especially in audio.

2. Diffusion Preserves Semantics

Diffusion models add variation, not structure. That distinction matters.

3. YAMNet Matched the Domain Better

Environmental audio ≠ speech separation. Using the right prior mattered more than model size.

How This Strengthens Hoomanely

This shift directly improved Hoomanely’s platform:

- Stronger privacy guarantees (no speech leakage learned)

- Better edge stability

- More reliable downstream anomaly detection

- Faster iteration cycles (less debugging invisible errors)

It reinforced an internal principle we now follow strictly:

Never scale audio data you cannot confidently audit.

Key Takeaways

- Transfer learning can fail due to data trust, not model choice

- Audio separation transformers are powerful — but risky as teachers

- Manual verification is harder in audio than vision

- Clean seed data + diffusion augmentation scales better

- YAMNet fine-tuning beat teacher–student distillation in our case

Final Thought

Transfer learning didn’t fail because the models were weak.

It failed because we trusted the wrong data source.

Once we treated audio like the fragile signal it is - and optimized for trust over scale - everything else fell into place.