GraphQL Waterfalls in Next.js App Router

While we were building internal engineering tools at Hoomanely for visualizing telemetry from our IoT devices. Trackers capture motion and position data. EverBowls measure weight and behavior signals. EverHubs aggregate sensor data at the edge before cloud upload. All of this flows through GraphQL APIs into dashboards built on Next.js App Router.

A few months after migrating from Pages Router, we noticed performance degrading. TTFB was creeping up on pages that should be fast. GraphQL server logs showed duplicate queries - sometimes the same query firing three or four times per page load. The code looked clean. Components were async, data fetching was straightforward. But something fundamental was broken.

The Problem

We were fetching data inside nested Server Components:

async function DashboardPage() {

return (

<>

<DeviceList />

<TelemetryPanel />

<EventLog />

<HealthStatus />

</>

);

}

async function DeviceList() {

const devices = await fetchDevices();

return <List items={devices} />;

}

async function TelemetryPanel() {

const telemetry = await fetchTelemetry();

return <Panel data={telemetry} />;

}

This pattern came from Pages Router thinking. Each component owns its data dependencies. Seemed reasonable - separation of concerns, component encapsulation, all that.

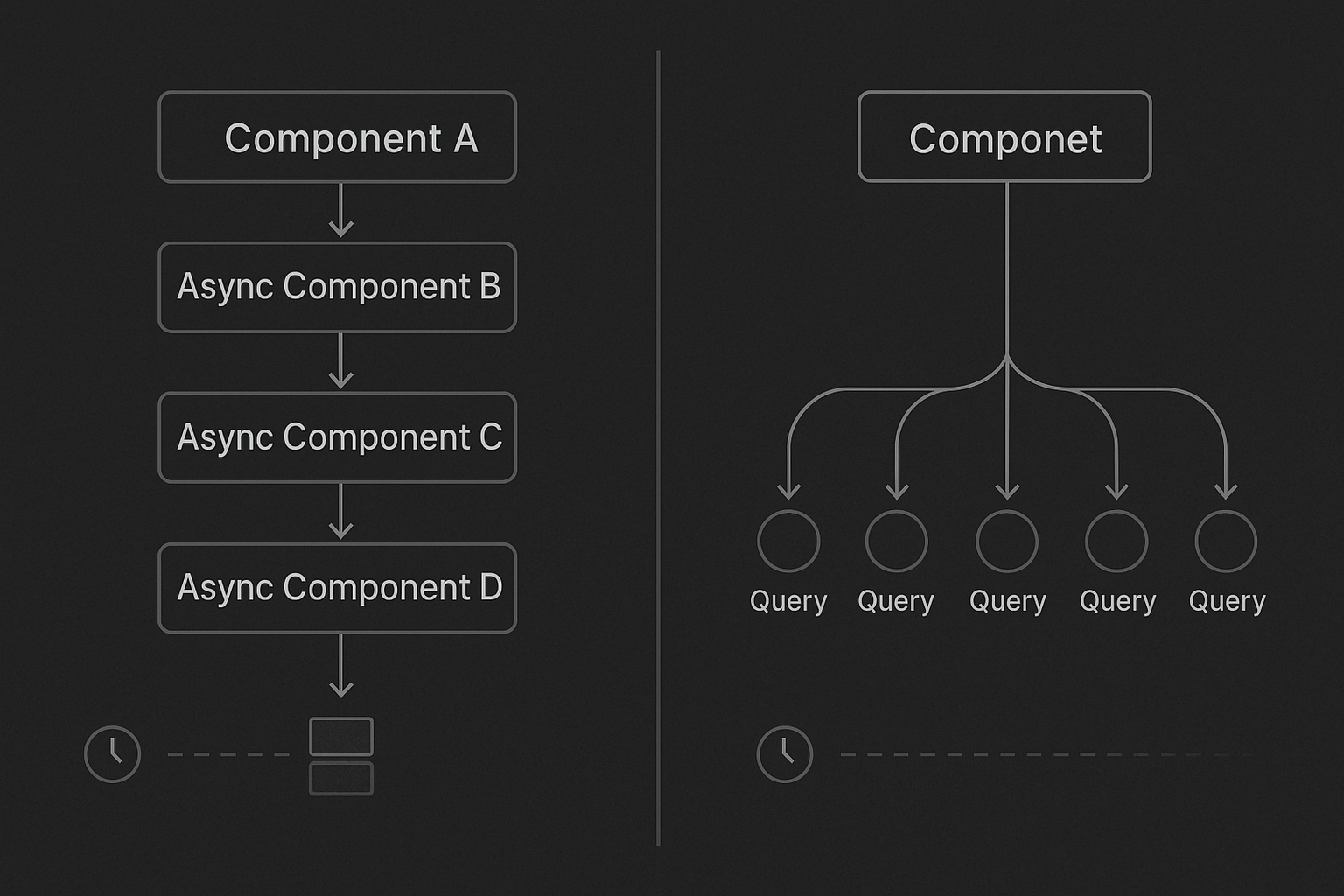

But in App Router, each async Server Component creates a network boundary. React Server Components don't automatically parallelize these calls. They execute in the order the component tree renders. Our four independent queries were running sequentially: fetch devices, wait for response, fetch telemetry, wait for response, fetch events, wait for response.

Worse, our GraphQL client was deduplicating queries only within a single component's execution context. If two components requested the same data, both queries hit the network. The client's request cache didn't span component boundaries.

The component tree structure was dictating fetch order. This wasn't a bug it's how RSC works. Component composition became request composition.

Why This Happens

Pages Router had a clear data-fetching boundary: getServerSideProps or getStaticProps. One function, one place, explicit control over parallelism. You could write:

export async function getServerSideProps() {

const [devices, telemetry, events] = await Promise.all([

fetchDevices(),

fetchTelemetry(),

fetchEvents(),

]);

return { props: { devices, telemetry, events } };

}

Parallelism was obvious and guaranteed.

App Router distributes fetching across the component tree. This enables powerful patterns: streaming, partial hydration, granular caching, progressive rendering. But it requires understanding that if you nest components that fetch, you're nesting network requests.

There's no implicit query planner. No automatic batching across component boundaries. No framework magic that parallelizes independent async calls in different components. The structure you write is the execution order you get.

Our dashboards were loading device metadata, then telemetry streams, then event logs, then health signals - all in sequence. Not because these queries had data dependencies, but because the components were nested and each contained an async fetch.

What We Changed

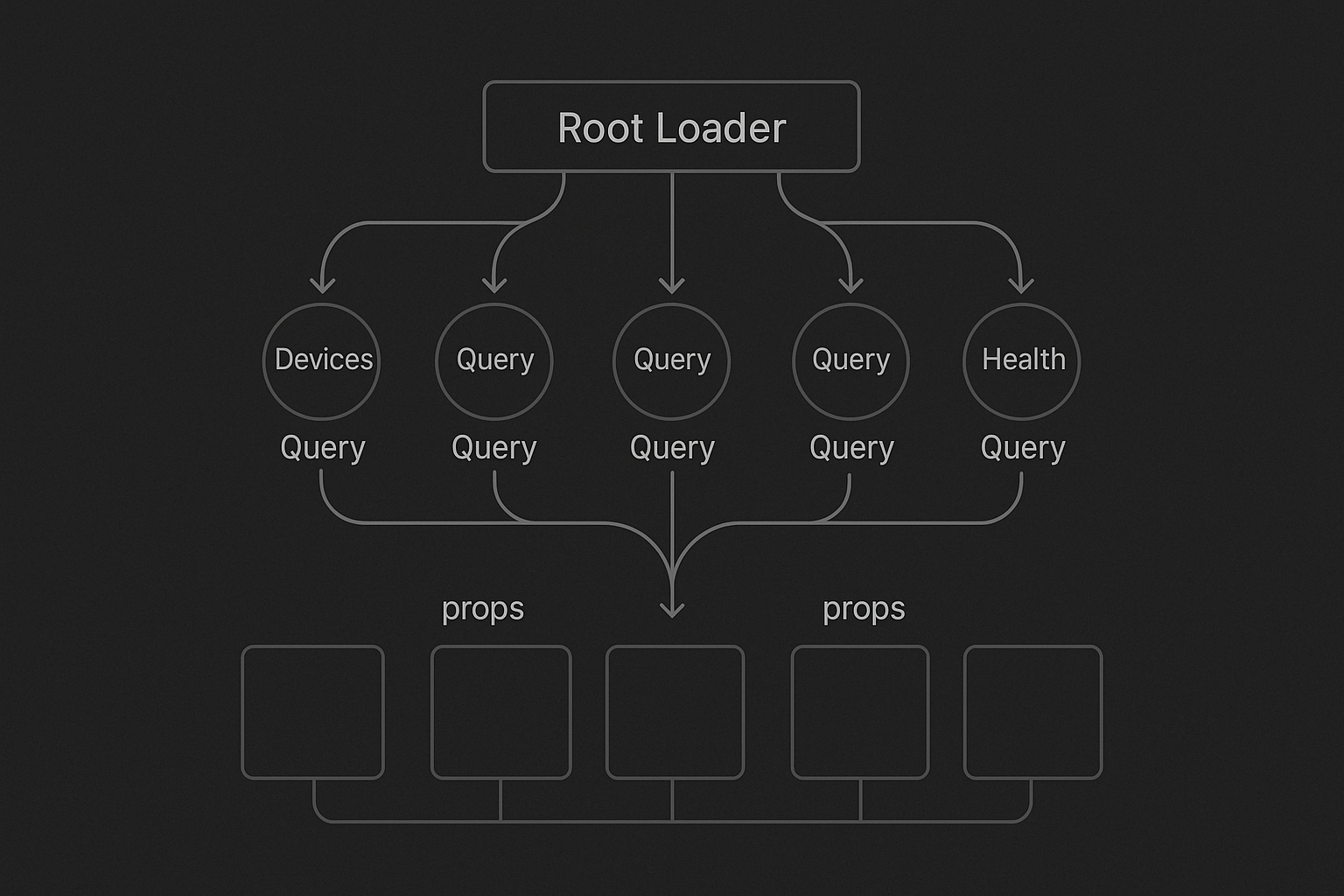

Root-Level Query Orchestration

We stopped fetching in nested components. All data fetching moved to the root:

async function DashboardPage() {

const [devices, telemetry, events, health] = await Promise.all([

fetchDevices(),

fetchTelemetry(),

fetchEvents(),

fetchHealthStatus(),

]);

return (

<Dashboard

devices={devices}

telemetry={telemetry}

events={events}

health={health}

/>

);

}

Single execution scope. Explicit parallelism with Promise.all. No hidden waterfalls. Child components became pure—they receive data as props and render.

This pattern mirrors how we structure embedded systems. Data pipelines should be explicit. Dependencies should be visible. Execution order shouldn't emerge from component hierarchy.

GraphQL Client Configuration

We reconfigured for server-side rendering with request-level deduplication:

const serverClient = new Client({

ssrMode: true,

cache: new InMemoryCache({

typePolicies: {

Query: {

fields: {

// Field-level cache policies

},

},

},

}),

link: new BatchHttpLink({

uri: process.env.GRAPHQL_ENDPOINT,

batchMax: 10,

batchInterval: 20,

credentials: 'include',

}),

});

The BatchHttpLink batches multiple queries into a single HTTP request. If two components query different data in the same execution window, they go out together. The cache deduplicates identical queries across the entire request lifecycle, not just per-component.

For pages with many small queries, this dramatically reduces network round trips. Instead of ten sequential HTTP requests, we get one or two batched requests.

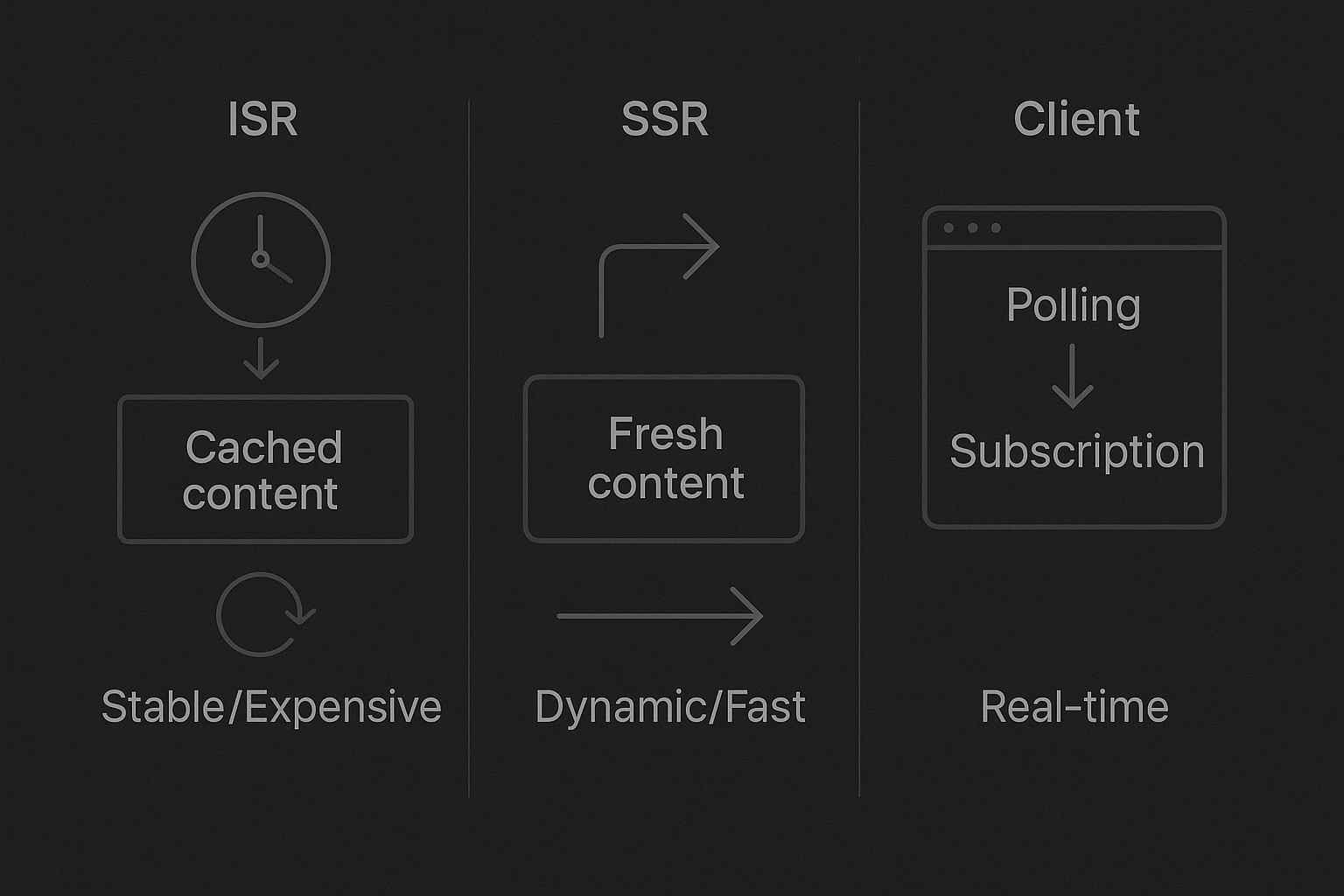

Separate Data by Volatility

We stopped treating all data the same. Different data changes at different rates. The caching strategy should match.

For stable data device metadata, firmware versions, historical aggregates we use ISR:

export const revalidate = 60;

async function FleetOverview() {

const aggregates = await fetchFleetAggregates();

return <StatsView data={aggregates} />;

}

This page regenerates every sixty seconds. Expensive aggregation queries run once, cached results serve many requests. For data that changes hourly or daily, this is the right pattern.

For personalized data user-specific device lists, permissions we use SSR:

export const dynamic = 'force-dynamic';

async function MyDevices({ userId }) {

const devices = await fetchUserDevices(userId);

return <DeviceGrid devices={devices} />;

}

Fast queries that return user-specific data. No caching—every request gets fresh results. These queries resolve quickly (under 100ms) so the per-request cost is acceptable.

For real-time data—live sensor readings, connection status—we moved to client-side:

'use client';

function LiveTelemetry({ deviceId }) {

const { data } = useQuery(LIVE_SENSOR_DATA, {

variables: { deviceId },

pollInterval: 2000,

});

return <TelemetryChart data={data} />;

}

RSC isn't designed for sub-second updates. Constantly revalidating ISR or re-rendering SSR for real-time data creates unnecessary load. Client-side GraphQL with polling or WebSocket subscriptions handles this better. The initial page load comes from RSC with stable context data. Live updates happen in the browser.

Server-Side Cache Layer

We built a cache between Next.js and our GraphQL upstream:

interface CacheEntry {

data: any;

expiresAt: number;

}

class GraphQLCache {

private cache = new Map<string, CacheEntry>();

private hash(query: string, variables: object): string {

return createHash('sha256')

.update(query + JSON.stringify(variables))

.digest('hex');

}

async query(query: string, variables: object, ttl: number) {

const key = this.hash(query, variables);

const entry = this.cache.get(key);

if (entry && entry.expiresAt > Date.now()) {

return entry.data;

}

const data = await upstreamClient.query({ query, variables });

this.cache.set(key, {

data,

expiresAt: Date.now() + (ttl * 1000),

});

return data;

}

invalidate(pattern?: string) {

if (!pattern) {

this.cache.clear();

return;

}

for (const key of this.cache.keys()) {

if (key.includes(pattern)) {

this.cache.delete(key);

}

}

}

}

export const graphqlCache = new GraphQLCache();

This cache sits above the GraphQL client. It prevents redundant upstream queries even when Next.js ISR revalidates. If a page regenerates but the underlying data hasn't changed, we serve from cache instead of hitting GraphQL resolvers.

We coordinate cache TTLs with GraphQL schema directives:

type Device {

id: ID!

name: String!

firmwareVersion: String! @cacheControl(maxAge: 3600)

lastSeen: DateTime! @cacheControl(maxAge: 15)

telemetry: TelemetrySnapshot @cacheControl(maxAge: 5)

}

Cache behavior lives with type definitions. This keeps strategy consistent across all API consumers—not just Next.js, but mobile apps, background jobs, anything hitting GraphQL.

For distributed deployments, we swap the Map for Redis or Vercel KV. Same interface, different backing store.

How We Actually Use This

Our internal tools serve different teams with different needs.

Firmware engineers reviewing device behavior need comprehensive telemetry views. These pages use root-level orchestration with SSR. Fresh data every load, predictable performance, no stale cache surprises.

Hardware designers analyzing trends across device fleets need historical aggregates. These use ISR with longer revalidation windows. Expensive queries joining telemetry across thousands of devices, computing percentiles run once and serve many requests.

Operations teams monitoring live device status need instant visibility. These pages combine ISR for device metadata (which changes rarely) with client-side GraphQL for connection status and live readings (which change constantly). Stable context, dynamic data.

The pattern stays consistent: batch queries at the root, match caching to data characteristics, separate real-time updates to the client.

What Changed

TTFB stabilized. Pages that were taking 800ms+ to first byte now respond in 150-200ms. GraphQL query volume dropped—no more duplicate queries within a request. Cache hit rates improved because we're caching the right things at the right granularity.

More importantly, refactoring stopped breaking performance. Moving a component no longer accidentally serializes queries. The architecture makes parallelism explicit. If queries should run concurrently, they're in a Promise.all at the root. If not, the dependency is visible.

Takeaways

Component nesting becomes request ordering. In App Router, if you nest async Server Components that fetch data, you're creating serial network calls. This isn't a framework bug—it's how RSC works. Be explicit about fetch order.

Batch at the root. Use Promise.all for independent queries. Don't rely on the framework to magically parallelize fetches across component boundaries. It won't.

Configure GraphQL properly. Use batching links, enable request-scoped deduplication, set up type policies. The client should batch queries within the same tick and deduplicate identical operations.

Match caching to data. ISR for expensive, stable data. SSR for fast, personalized queries. Client-side for real-time updates. One strategy doesn't fit all data types.

Cache above Next.js. A server-side cache between Next.js and GraphQL prevents redundant resolver hits across ISR revalidations. Coordinate TTLs with schema directives to keep behavior consistent.

App Router is fast when structured correctly. But it demands understanding how component composition affects execution order. The framework won't prevent waterfalls—you have to design around them.