Grounded Monocular Depth for Eye-Level Thermal Alignment

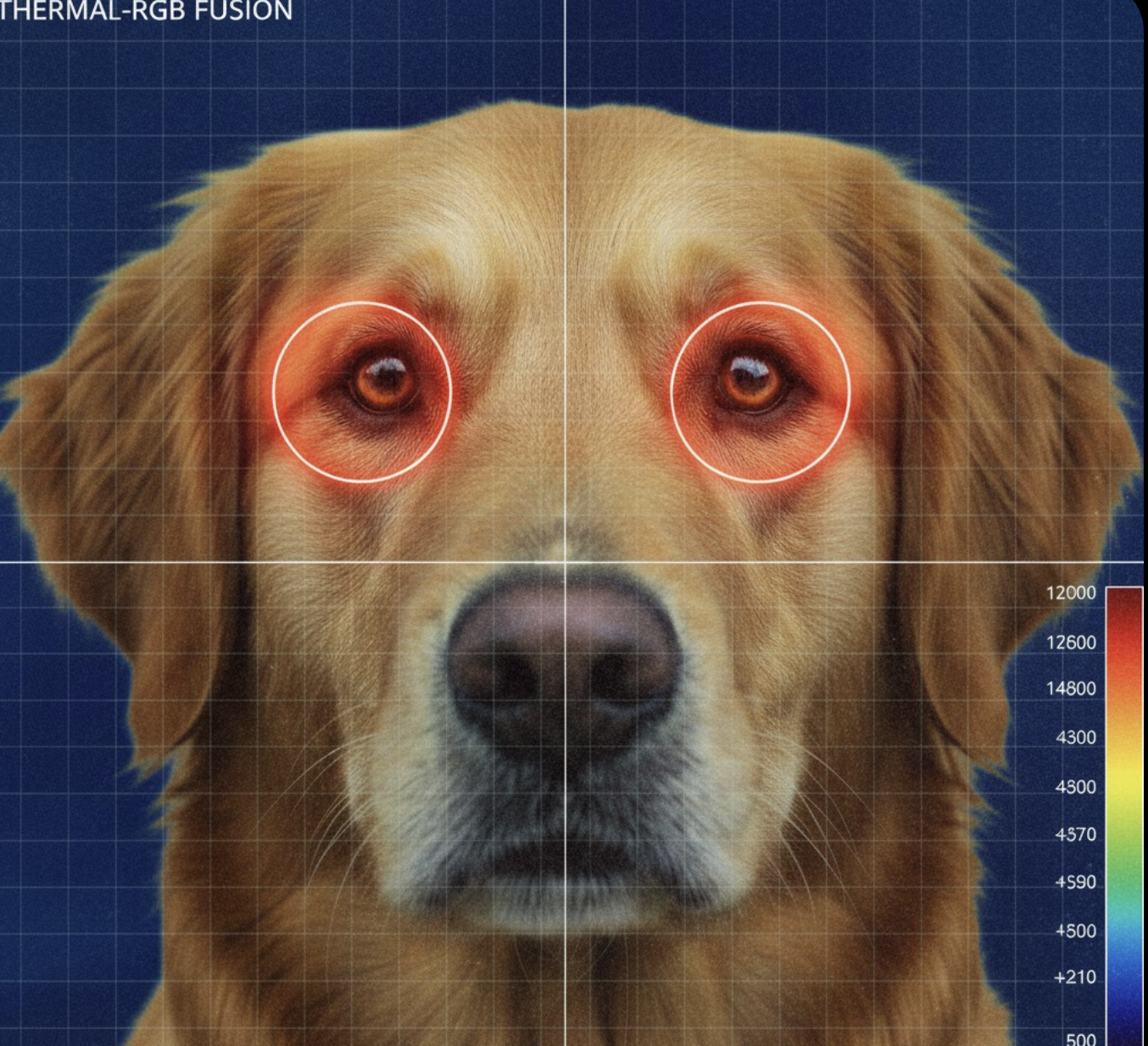

We wanted centimeter-level alignment between our RGB camera and a low-resolution thermal sensor to measure eye temperature accurately.

At 30–50 cm distance, small geometric errors translate into large thermal overlay shifts. A 3–4 cm depth misestimate can move the projected thermal blob several pixels — enough to shift from cornea to snout.

Monocular depth alone was not stable.

A single-channel IR proximity sensor alone was not spatially aware.

So we fused them.

The Geometry Problem

Our stack:

- RGB camera (segmentation + eye landmarks)

- Thermal sensor (low-resolution IR map)

- Single-channel IR proximity sensor (absolute distance scalar)

- Working distance: 30–50 cm

Monocular depth models (SOTA open-source transformer-based architectures) give dense depth maps — but only up to scale.

The proximity sensor gives true metric distance — but only along one axis.

Individually:

- Monocular → spatially dense, metrically ambiguous

- Proximity → metrically accurate, spatially blind

The overlay required both.

Why Monocular Depth Was Not Enough

Modern monocular depth models produce consistent relative structure. However, they suffer from scale ambiguity:

D_metric = alpha * D_mono

Where alpha is unknown and varies per frame.

In practice this caused:

- 5–10 cm absolute drift

- Overlay instability across head tilt

- Snout dominance (closer geometry biasing projection)

For a deformable object like a dog face, pose variation made it worse.

Why Proximity Alone Failed

The IR proximity sensor provided accurate scalar distance.

But it could not:

- Distinguish eyes from snout

- Handle head tilt

- Resolve spatial depth gradients

If the snout moved forward, the proximity reading shortened. Overlay shifted incorrectly toward the nose.

We needed spatial structure grounded in metric reality.

The Hybrid Depth Grounding Strategy

Step 1 — Generate Dense Relative Depth

RGB frame → monocular depth model → dense depth map D_mono(x,y)

This provides geometry but not scale.

Step 2 — Extract Sensor-Aligned ROI

We compute the average depth over the region corresponding to the proximity sensor’s effective field of view.

D_mono_ROI

Step 3 — Compute Scale Factor

alpha = D_prox / D_mono_ROI

Where:

- D_prox = absolute proximity reading

Step 4 — Rescale Entire Depth Map

D_metric(x,y) = alpha * D_mono(x,y)

Now the depth field becomes metrically grounded.

Scale drift disappears.

Eye-Level Refinement

After segmentation:

- Extract eye mask

- Compute metric depth distribution inside mask

- Fit local eye-plane approximation

This removes snout bias entirely.

Overlay projection then uses eye-plane depth instead of global center depth.

Depth-Aware Thermal Projection

With metric depth available:

- Apply calibrated intrinsics for RGB and thermal sensors

- Apply extrinsic transform (R, T)

- Perform depth-aware reprojection

p_rgb = K_rgb * (R * P_thermal + T)

Where P_thermal is adjusted using grounded metric depth.

This ensures thermal rays intersect the correct 3D eye location.

Quantitative Impact

Before grounding:

- Overlay drift: 6–10 pixels

- Snout-dominant projection errors

- High instability during motion

After grounding:

- Overlay drift: 1–2 pixels

- Stable across 30–50 cm

- Head tilt robustness improved significantly

Metric alignment error reduced by ~60–75%.

Why This Works in Controlled Edge Systems

This approach benefits from:

- Fixed camera installation

- Constrained working distance

- Known bowl geometry

- Repeated dog interaction patterns

In uncontrolled mobile scenarios, results would degrade.

But in a fixed smart-bowl system, hybrid depth grounding is extremely effective.

Key Insight

Monocular depth gives spatial structure.

Proximity sensing gives metric truth.

The fusion removes scale ambiguity while preserving dense geometry.

Instead of replacing hardware with ML — we anchored ML with hardware.

That shift enabled precise, stable eye-level thermal extraction at close range.

And that was the difference between a demo and a production system.