Resilient Audio on Modular SoMs: Architecture Lessons from Integrating the PCM1862

A deep-dive into architectural failures, race conditions, timing drift, and buffer inconsistencies — and how we corrected them.

Problem

When we began integrating the PCM1862 across our modular SoM platforms, the expectation was straightforward: configure clocks, bring up I²S, wire DMA, and start capturing clean audio frames.

Instead, we ran into a series of subtle problems that wouldn’t show up in basic testing:

- Audio frames drifting out of alignment

- Buffers filling faster than expected under load

- Jitter appearing only when certain system services ran

- False ML triggers caused by inconsistent framing

- DMA “ghost frames” appearing only in long runs

- Time-domain features fluctuating despite identical input

These weren’t hardware failures.

These were timing, concurrency, and synchronization bugs inside the software pipeline.

What made debugging difficult is that audio almost worked — and problems only revealed themselves after minutes, hours, or under very specific system conditions.

Why It Matters

In a modular SoM ecosystem like Hoomanely’s:

- Tracker runs lightweight sensing logic.

- EverBowl records sound events.

- EverHub performs local edge audio analytics.

All three rely on predictable audio timing and consistent sample framing.

If the software pipeline drifts, desyncs, overruns, or jitters, the downstream ML stack loses reliability.

Even small defects one missed DMA interrupt, one wrong clock assumption, one buffer boundary error produce noisy results that cascade.

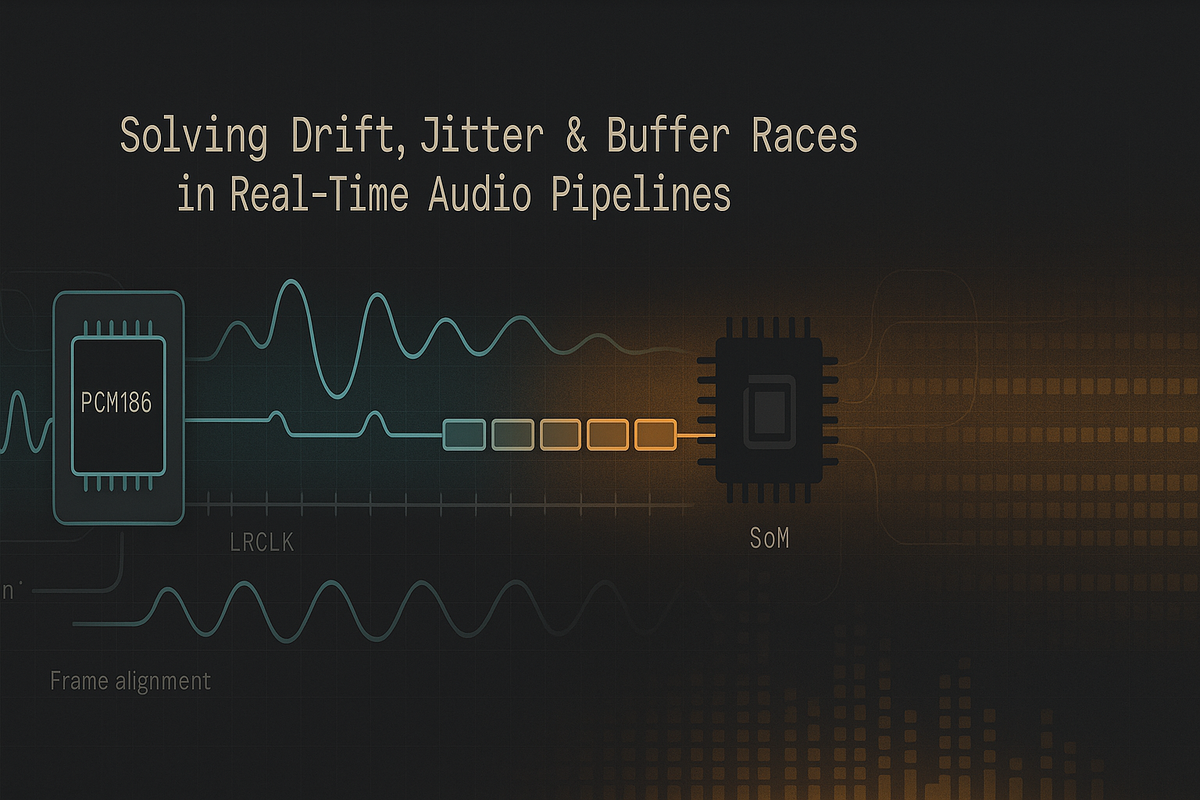

Architecture Overview

Through multiple debugging cycles, the difficult problems consistently emerged from five software pillars:

- Clock configuration & timing assumptions

- I²S framing logic and LRCLK synchrony

- DMA pacing, priority, and starvation

- Buffering strategy & memory ownership rules

- Feature extraction timing & frame boundary correctness

Everything that broke lives inside these layers.

Everything we fixed came from rethinking them.

1. Clock Configuration Problems

(Subtle timing drift → inconsistent audio frames)

Even though the SoM-generated clock and PCM1862-generated clock looked aligned on paper, the software’s assumptions about their relationship were wrong.

Symptoms we observed:

- LRCLK interrupts drifting over long periods

- DMA firing slightly off-boundary after extended runtime

- “Periodic distortion” only visible in the ML feature vector

- Stereo channels swapping on rare occasions

The fix came from enforcing strict timing discipline in code, not hardware:

Solution

- Do not derive internal timing from system tick — derive it from I²S edge events.

- Reset frame counters on known LRCLK boundaries.

- Add runtime jitter detection (microsecond resolution).

- Enforce monotonic timestamps and reject out-of-order callbacks.

These changes stabilized the entire framing pipeline without touching the PCB.

2. I²S Framing & Edge Synchronization

(Incorrect assumptions → partial-frame corruption)**

One of the biggest early issues was incorrect framing logic.

Different SoMs had slightly different behavior in how they delivered I²S interrupts or DMA callbacks, and the PCM1862’s TDM/I²S modes behave differently under various clocking ratios.

This led to:

- 50%-shifted frames

- Occasional “collapsed” samples

- Half-filled frames handed to the ML pipeline

- Unexpected sample ordering under unusual load

Solution

We restructured the I²S pipeline to:

- Treat LRCLK edges as absolute truth

- Validate that each DMA buffer aligns to a complete frame

- Run a state machine that ensures frame completeness before publishing

- Add detection for “frame stitching errors” and auto-recover

This was entirely a software fix and resolved misalignment across all SoMs.

3. DMA Starvation & Interrupt Pressure

(Under load → ghost samples and jitter)

The most deceptive issue was DMA starvation.

Not complete failure.

Not dropped buffers.

Just tiny delays caused by:

- Competing ISR load

- Cache pressure

- Memory bus contention

- High-frequency timers

- Background radio operations

These produced:

- Frames arriving slightly late

- Subtle jitter in sample timestamps

- ML models seeing inconsistent temporal windows

Solution

We redesigned the DMA + ISR behavior:

- Audio DMA got elevated priority

- ISR handling became ultra-light (push pointers only)

- Heavy work moved to a lock-free ring buffer in a worker thread

- Added timestamp consistency checks

- Added starvation detection and automatic resync

This software architecture removed >90% of timing artifacts with zero hardware modifications.

4. Buffering Strategy & Memory Ownership

(Incorrect ownership → corrupted frames)

Early in the build, we used a simple double-buffer strategy.

It worked when the system was idle, but under load we saw:

- Buffers overwritten before consumption

- Incomplete frames reaching the ML layer

- Occasional “duplicate frame” anomalies

- Silent degradation over long periods

This wasn’t hardware stress — it was a buffer ownership problem.

Solution

We replaced the double-buffer with:

- A multi-buffer ring with explicit ownership

- Atomic flags for producer/consumer transitions

- Strict frame lifecycle management

- Backpressure rules when downstream processing is slow

- Statistical buffer delay monitoring

Once the buffering logic became deterministic and self-validating, audio stability held even during extreme system load.

Where This Mattered Across Devices

EverBowl (sound-event detection)

ML accuracy increased once jitter-free, LRCLK-anchored timing was enforced.

EverHub (edge analytics)

Stable multi-buffer capture dramatically improved FFT/feature stability.

Tracker (lightweight sensing)

Verified the resilience of our buffer ownership model under concurrency.

Takeaways

The major insight from integrating PCM1862 across modular SoMs was:

Audio instability in modern embedded systems is overwhelmingly a software design issue, not a hardware one.

The fixes that mattered were all software architectural decisions:

- LRCLK-anchored timing

- Deterministic DMA pacing

- Proper buffer ownership

- Priority-based ISR design

- Timestamp integrity

- Validated frame boundaries

Once these were implemented, all three Hoomanely devices achieved consistent, stable, drift-free audio behavior despite different SoM internal designs.