Watchdogs and Livelock: Real Failure Patterns and Fixes

Introduction

Many embedded systems behave flawlessly during development. They pass unit tests, survive stress testing, and operate reliably in controlled environments. However, once deployed in the field, they occasionally stop responding without any diagnostic trace. No crash logs, no core dumps, no visible kernel panic. The device simply appears frozen.

In real-world deployments, especially within IoT products, medical wearables, automotive controllers, robotics, and industrial gateways, most failures are not caused by system crashes. Instead, they result from livelock conditions. The CPU remains active, interrupts still occur, tasks continue to run, yet the system stops making forward progress. Traditional watchdogs fail to detect these conditions, which leads to silent failures that often go unnoticed until users report operational issues.

This article explores real failure patterns involving watchdogs and livelocks, explains why they are often missed, and presents modern recovery strategies that significantly increase reliability in production environments.

The Problem: When Watchdogs Provide a False Sense of Security

Watchdog timers are often treated as a simple reliability solution.

- Hardware watchdog enabled: yes

- Kick performed in main loop: yes

- Deployment: approved

This mindset creates a dangerous assumption.

A watchdog only detects when code stops executing. Most field failures occur when code continues executing but is trapped in non-productive loops. This is the defining characteristic of a livelock.

Example

while(network_retrying()) {

retry_send();

kick_watchdog();

}From the system perspective:

- CPU is running

- interrupts are active

- watchdog is being serviced

From the user's perspective:

- the device is unresponsive

The watchdog reports a healthy system. The user observes a failure.

Why Livelock Matters

Livelocks lead to severe functional failures such as:

- missed scheduled operations

- frozen interfaces

- loss of communication

- stalled sensor acquisition

- repeated retries that drain the battery

- blocked control loops

In consumer or safety-critical products, these issues translate into:

- regulatory non-compliance

- customer dissatisfaction

- increased warranty claims

- product returns

- long-term brand impact

At production scale, these silent failures become costly and damaging.

How Livelocks Occur in Real Systems

Based on extensive experience in embedded system design, the most common causes include:

1. Infinite Retry Logic

while(!connected()) {

connect();

kick_watchdog();

}

If a modem or radio module becomes unresponsive, the system may loop endlessly.

2. Mutex or Lock Contention

while(xSemaphoreTake(mutex, 0) != pdTRUE) {

kick_watchdog();

}Missing timeout logic traps the task indefinitely.

3. Interrupt Flooding

Examples:

- noisy GPIO inputs

- misconfigured SPI interrupt lines

- CAN bus flooding

- wireless driver interrupt storms

The main loop starves and never progresses.

4. Busy Waiting

while(!adc_ready);Polling without event signaling creates non-productive execution.

5. Dead Peripherals

Issues such as:

- frozen I2C slaves

- misclocked SPI devices

- unresponsive modems

cause software to wait forever for a state change that never occurs.

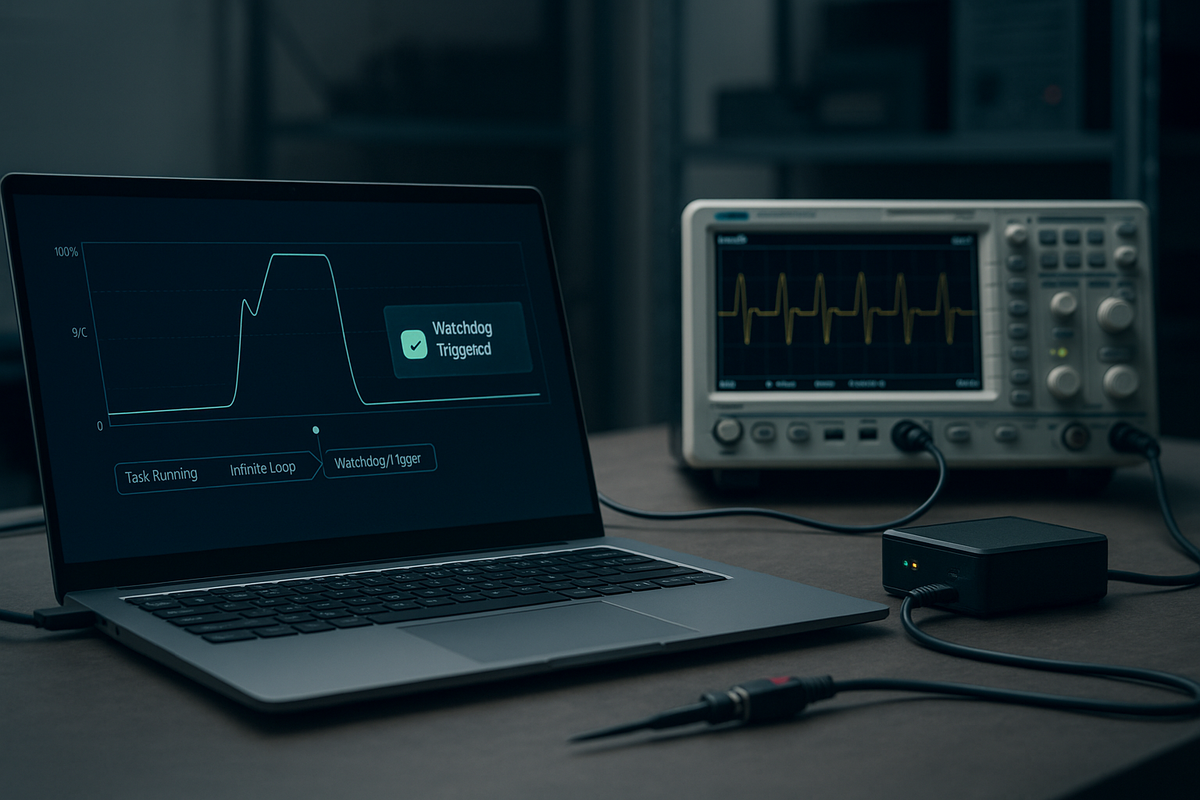

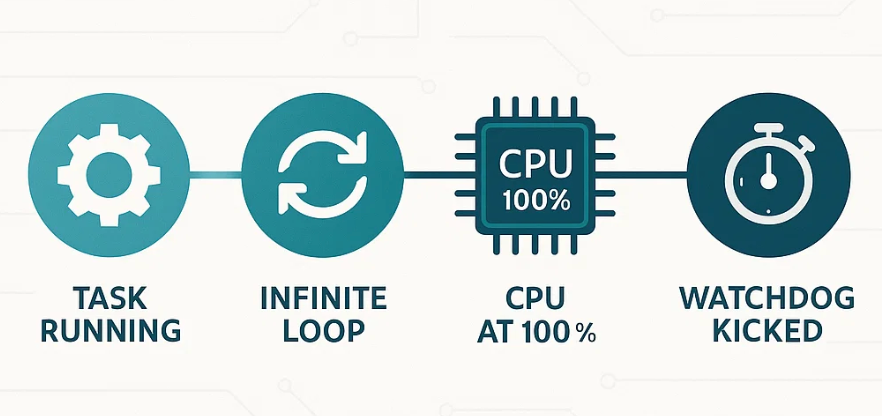

Why Traditional Watchdogs Fail

Traditional watchdogs detect:

- halted execution

- total software hangs

They do not detect:

- infinite loops

- repeated retries

- stalled state machines

- blocked queues

- starvation conditions

The system appears active and continues to reset the watchdog, which prevents corrective action.

Solution: Progress-Based Watchdogs

The core principle is:

Only kick the watchdog when measurable progress has been made.

Examples of progress:

- new data acquired

- successful communication

- state transition

- processed queue element

- completed control loop iteration

Implementation Example (RTOS)

void watchdog_task() {

if(progress_counter == last_counter) {

reset_system();

}

last_counter = progress_counter;

hw_wdt_kick();

}This approach ensures the watchdog monitors forward motion rather than raw CPU activity.

Case Study: Production Failure and Resolution (Hoomanely Context)

Hoomanely develops modular IoT pet-care systems where timely and reliable operation is essential. One deployed Hoomanely Everbowl experienced intermittent connectivity due to bad network conditions. During modem firmware freezes, the networking stack entered a state similar to:

connect -> fail -> retry -> fail -> retryThe watchdog continued to be serviced during each retry. As a result, the device remained unresponsive for several hours and missed feeding schedules.

Implemented Fix

The following enhancements were deployed:

- progress counters for successful data transmission

- retry limits with backoff

- modem hard reset triggers

- progress-aware watchdog supervision

After deployment:

- failure rate decreased by significant margin

- no missed feeding cycles were reported

- watchdog resets dropped significantly

This improvement reinforced Hoomanely's mission to deliver reliable preventive healthcare device for pet households. It also strengthened our platform architecture across all product lines.

Modern Recovery Pipelines

A robust recovery system must:

- detect livelock conditions

- attempt automated recovery

- escalate recovery in controlled stages

Typical sequence:

- detect stall

- soft subsystem restart

- hardware peripheral reset

- module power cycle

- full system reboot

- safe mode boot

- failure reporting and logging

Example

if(no_network_progress) {

restart_network_stack();

if(still_no_progress) {

power_cycle_modem();

if(still_no_progress) {

system_reboot();

}

}

}This structured recovery avoids unnecessary system resets and maximizes uptime.

Common Implementation Errors

Avoid the following practices:

- kicking the watchdog in every loop

- missing timeouts on semaphores or locks

- infinite retry loops

- ignoring hardware reset controls

- assuming peripherals self-recover

- servicing watchdogs inside interrupt handlers

- relying solely on hardware watchdogs

These patterns almost guarantee field failures.

Blueprint for Production-Grade Watchdog Strategy

Key principles:

Detect Progress

Track counters or state transitions.

Limit Retries

Use exponential backoff with maximum attempts.

Reset Peripherals

Power cycle or reinitialize modules when required.

Escalate Recovery

Move from soft resets to full system recovery when needed.

Log Failures

Enable post-mortem analysis.

Key Takeaways

- Most embedded failures result from livelocks, not crashes

- Traditional watchdogs cannot detect livelocks

- Progress-aware watchdogs provide significant reliability improvements

- Structured recovery pipelines increase system resilience

- Reliability requires design discipline, not luck

At Hoomanely, these principles have directly improved system uptime and customer trust across our ecosystem.

A missing timeout or an incorrectly placed watchdog kick can lead to widespread product failures. Investing in robust detection and recovery strategies protects both customers and engineering teams.